Emotions associated with failed searches in a digital library

Maram Barifah and Monica Landoni.

Introduction. This paper discusses causes behind failed searches from the searchers’ perspective and examines associated emotions.

Method. We conducted an online study with real users in their natural settings. Participants were asked to use a digital library and run one specific and one exploratory search task, using their own topics. They also answered pre- and post-questionnaires for both task types.

Analysis. Three types of analysis were conducted: (i) one descriptive on answers in questionnaires, (ii) one textual on users’ failure reports to identify the causes and elicit associated emotions and (iii) one behavioural analysis of the interactions recorded in log files.

Results. Users identified the lack of coverage and poor usability as main causes behind failed searches. We examined search behaviour along with declared perceptions of the causes behind failures and realised that the digital library functionalities were not fully used. Poor awareness of the digital library functionalities could be an unreported cause for search failures. In general, users had a positive attitude toward the digital library, expressing trust, joy, and anticipation. Anger and sadness were linked specifically to failed searches.

Conclusions. There persists a need to improve the digital library systems and simplify their interfaces. The emotional effect is a significant factor that need to be considered in the user experience studies.

DOI: https://doi.org/10.47989/irisic2027

Introduction

The concept of (success or failure) of outcomes for information seeking was introduced by Wilson (1999) in his model of information seeking behaviour. Mansourian (2008) defined search failure as ‘the situation in which users attempt to satisfy their information needs, but they fail to do so’ (p. 29). Unsuccessful search experiences force users to refine their searches, ultimately resulting in low expectations, frustration, and less perseverance. Although the cognitive perspective has long dominated the area of information behaviour, affective behaviour includes emotion, mood, preference, and evaluation [from a non-cognitive perspective] (Julien, et al., 2005), has now been recognised as an integral part of developing a holistic understanding of an individual’s approach to information seeking and use. Much of our daily experiences influence and are influenced by the emotions we feel, our experience with digital libraries has no exceptions. A large body of research suggests that experiencing failure has marked emotional and psychological consequences across a range of individuals and settings (Johnson, 2017).

Previous research confirmed the difficulties that searchers face when interacting with OPAC and digital library systems. Investigation of search failures from the searcher’s perspective is scarce. Thus, understanding search failures and the associated user perceptions might help the designers for better user experiences and provide more usable and useful digital library systems.

Because emotions not only regulate our social encounters, but also influence our cognition, perception and decision-making through a series of interactions with our intentions and motivations (Scherer, 2001), the emotions associated with failure situations need further investigation. Thus, this work attempts to answer the following research questions:

RQ1: What are the reasons behind search failures from the searchers’ perspective?

RQ2: What are the primary emotions individual experienced in the failed searches?

The following section reviews previous work. Next, the methodology is described, and data analysis and results presented. Findings of this study are reported in the discussion section. This is followed by limitations and then conclusion.

Literature review

Generally, online search failures occur when systems fail to retrieve the desired information (Drabenstott and Weller, 1996). Searchers might encounter failures when the system provides too many results, too few results, no results (zero hits), irrelevant or confusing results (Lau and Goh, 2006; Xie and Cool, 2009). In the digital library domain, the failed searches have been investigated implicitly by analysing the query behaviour extracted from the log files, and explicitly by conducting user studies. The former category identified ill queries as the primer failure cause to include: typing and spelling errors (Peters, 1989), query formulation (Antell and Huang, 2008.), irrelevant keywords, spelling mistakes, and incorrect use of Boolean logic (Debowski, 2001). User studies helped to identify three main factors that could be behind failed searches, namely: task characteristics, system performance and design, and user attributes. Examples of relevant task characteristics are: search types (e.g., unknown and known item searches) (Slone, 2000), characteristics of the information needed (e.g., vague and unfamiliar) (Tang, 2007; Wildemuth, et al., 2013). The system effect includes the limited coverage of the digital library (Behnert and Lewandowski, 2017), and user interface (Fast and Campbell, 2004; Blandford, et al., 2001; Capra, et al., 2007).

A pioneer study by Borgman (1996) identified the gap between the online catalogues’ design and the search behaviour that could be the reason behind the search failed. She confirmed that interacting with the online catalogues requires a rich conceptual framework for information retrieval, where most of the end-users lack the conceptual knowledge for searching comparing to the expert librarian searchers. Recently, Xie and Cool (2009), identified user’s domain knowledge and search experience as significant user attributes that cause the failed searches, besides the lack of awareness of the purpose of certain limiters, incorrect selection of the search index or misuse of the facets (Trapido, 2016; Peters, 1989).

A pioneer model that encompasses user affective experience as well as cognitive constructs within the information process is Kuhlthau’s information search process model (Kuhlthau, 1993). Other research suggests that experiencing failure has marked emotional and psychological consequences across a range of individuals and settings (Johnson, 2017). As an example of the setting effect, Poddar and Ruthven (2010) found that the artificial task has higher uncertainty comparing to the genuine search tasks. They also identified the search task types, where complex search tasks have lower positive emotions and more uncertainty before and after searching. The study of Gwizdka and Spence found the time spent on searching (search duration) is associated with subjective feelings of lostness in Web searches (Gwizdka and Spence, 2007).

In the domain of the research in librarianship and information science, a seminal systematic review was conducted by Julien et al. (2005). The review demonstrated the importance of the affective dimension (e.g., emotion or confidence) in human information behaviour. It also indicates that system-oriented research in librarianship and information science pays little attention to affective variables and encourages the research in the librarianship and information science community to move beyond a focus on system technicalities or cognitive aspects of searching behaviour to include affective variables.

Emotions, as one of the affective variables, can be defined as ‘an integrated feeling state involving physiological changes, motor-preparedness, cognitions about action, and inner experiences that emerges from an appraisal of the self or situation’ (Mayer, et al., 2014, p. 508). Bilal investigated children’s information seeking behaviour to include the affective state, among other factors, on fact-based search tasks. The study concluded that children experienced negative feelings in a few cases including lack of matches and difficulty in finding the answer (Bilal, 2000). The importance of considering the affective variables in information behaviour is confirmed by introducing the affective load theory (ALT) (Nahl, 2005). Nahl (2005) reported how an investigation of students’ affective loads revealed that individuals with higher affective coping skills (e.g., self-efficacy, optimism) were better able to function in information seeking situations, even when they had lower cognitive skills. Lopatovska (2014) found that there are direct relationships between primary emotions and search actions. Mckie and Narayan developed a Lib-Bot, a digital library chatbot, aiming to minimise the effects of library anxiety when searching in library databases and librarian services, and instead to induce a sense of ease while using library resources (Mckie and Narayan, 2019).

The positive affect of emotions has been investigated intensively in the research in librarianship and information science research, compared to the negative affect (Fulton, 2009). Our brief review of studies on failed searches suggests that the affective variables have not yet been extensively investigated in the domain of the digital library. To this end, we attempt in this work to inspect what are the emotions associated with failed searches.

Methodology

The study was conducted online by inviting real users to carry out the experiments in their own natural settings. RERO Doc digital library was chosen as the experimental platform. RERO Doc offers free access to its contents and services to users internationally. The items retrieved cover many different domains (e.g., nursing, economics, health, language, computer science, and history). The digital library catalogue lists over 6 million items of various formats including dissertations, books, articles, periodicals, photographs, maps, digitised press, music scores, and sound recordings. The digital library supports four different interface languages: French, English, German, and Italian. The design of the system supports different information discovery activities: searching, browsing, and navigating. Figure 1 shows the experimental interface of the digital library and the (1) information form; (2) the specific task; and (3) the exploratory task.

The participants were encouraged to use the digital library to run two search tasks: one specific and one exploratory, and to focus on topics of their own choosing. We let participants select their own topics, for three reasons. Firstly, the purpose and the nature of the study design required self-assessment of the search results in relation to the real information needs of the participants. Secondly, this avoided the bias that might result from the task narrative and query construction. Thirdly, the digital library collections cover various knowledge domains including nursing, economics, health, computer science and history. Therefore, it would be impossible to design topics that would cover all these different domains and guarantee the engagement of the online participants.

The tasks were set in scenarios typical of an academic search for information and adapted wording from Hoeber (et al., 2019) were used as follows.

Scenario 1:

Suppose you are writing a paper for your specialized major and you need to look-up or verify a specific fact (e.g., the date of an event, the correct spelling of someone’s name, the details of a specific research paper, the name of a book or author). Kindly use RERO Doc as the information source to accomplish this task.

Scenario 2:

Suppose you are writing a paper on a topic of your interest and you wish to gather more information to enhance your existing knowledge or discover new knowledge. Kindly use RERO Doc as the information source.

As the participants started the experiment, an informative form containing a description of the study, an introduction to the research team, and the electronic informed consent form were presented. Participants’ demographic data, search skills and system expertise were collected in this form.

The participants were asked to fill out a pre-questionnaire before searching, and therefore the description of their information needs, perceived knowledge, familiarity with the topic and complexity level were collected explicitly. As the participants finished their search, by completing the post questionnaire, we collected their self-assessment of the overall search performance. Participants also answered open end questions regarding their experiences with the task and with the digital library in general.

Figure 1: The experimental website

Data analysis

This section presents the results of the qualitative and quantitative data analysis. Only the unsuccessful searches were considered in the analysis. Table 1 shows the total number of submitted searches; ninety-six is the number of the total participants, thirty-four of the participations were not completed and thus eliminated for one or more of the following reasons: one or both questionnaires were missing, participants did not conduct real searches for one or both scenarios, participants completed only one scenario, and participants failed to grasp the description of the scenarios (e.g., they conducted both searches with the same task type instead of one specific and one exploratory). The mixed participations refer to situations where both tasks made by the same participant where one task was successful and the other was failed. Thirty-two of them assessed their search outcome of both tasks as failed and provided full questionnaire data. Those failure searches result in 64 search sessions and were all accounted for the analysis here.

| Total no. of participations | Uncompleted participations | Mixed participations | Successful participations | Failed participations |

|---|---|---|---|---|

| 96 | 34 | 10 | 12 | 32 |

Demographic data

The participants are representative of the general users of the library in terms of disciplines, academic status and other user characteristics. As in Table 2, of the 32 participants, 18 were female, 13 males, and one did not specify their sex. Around sixty per cent of the participants were 28–37 years old; 30% were aged 18–27, and three participants were aged 38–44. The participants came primarily from the Faculty of Computer Science (46%) and the Faculty of Communication Sciences and Business (38%). Less than 16% were from the Faculty of Medicine or the Faculty of Architecture and Engineering. Most of the participants (60%) were doctoral and master’s degree students, followed by bachelor’s degree students (20%), researchers (10%), and professors (10%). Table 2 presents the participants’ characteristics.

| Sex | Age | Academic Status | Discipline |

|---|---|---|---|

| 18 Female | 28–37 19 participants (59%) |

Doctoral & master students 19 participants (59%) |

Computer science 15 participants (46%) |

| 13 Male | 18-27 10 participants (31%) |

Bachelor students (21%) 7 participants |

Communication & business 12 participants (38%) |

| 1 Unspecified | 38-44 3 participants (10%) |

Researchers & professors 6 participants (20%) |

Engineering & medicine 5 participants (16%) |

System expertise level

The level of the system expertise was considered as a significant variable according to the studies of (Borgman, 1996, Xie and Cool, 2009, Trapido, 2016) who confirmed that the current design of the digital library requires adequate knowledge of information retrieval mechanism. In this study, the system expertise was self-assessed by the participants considering three aspects: frequency of using the digital library, degree of familiarity with the searching tools in digital libraries (e.g. facets, sort and advanced search), and rating of the digital library searching skills. The participants showed various levels of the system’s expertise that enables us to categorise them into non-expert (N=14) or experts (N=18). The non-expert participants were not familiar with digital library searching tools, they had rarely conducted searches within the digital library, and considered themselves as beginners or intermediate searchers. The experts, on the other hand, were quite familiar with the searching tools in the digital library, frequent users of the digital library, and regarded themselves as advanced searchers (good at using advanced search functions e.g. Boolean operators and filter results by facets). Table 3 shows the expertise analysis results.

| Questions: | Frequency of searching digital library | ||||

|---|---|---|---|---|---|

| How often do you conduct searches in digital libraries? | never | rarely | occasionally | often | always (daily use) |

|

0% 0 participants |

23% 7 participants |

26% 9 participants |

28% 9 participants |

23% 7 participants |

|

| Please indicate your degree of familiarity with searching tools in digital library | Familiarity with the searching tools (facets, sort and advanced search) | ||||

| not familiar at all | slightly familiar | somewhat familiar | moderately familiar | extremely familiar | |

|

6% 2 participants |

20% 6 participants |

27% 9 participants |

27% 9 participants |

20% 6 participants |

|

| How can you rate your searching skill on the digital libraries? | Digital library searching skills | ||||

| Beginner | Intermediate | Advanced | Expert | ||

|

23% 7 participants |

24% 8 participants |

44% 14 participants |

9% 3 participants |

||

Domain expertise level

The topic characteristics, as an influence factor on the information seeking, was also considered in the analysis. Three main aspects of the topic characteristics were measured including: level of the domain knowledge, perceived difficulty, and familiarity. The three aspects were self-assessed based on a five-point Likert scale as it shows in Table 4.

Specific task: most of the participants (90%) reported that they had good or very good knowledge about the topic they searched for. Simultaneously, (92%) of the participants reported that they had a easy or very easy topic. In terms of the familiarity with the topic, (80%) of the participants rated their familiarity with the topic as familiar or extremely familiar.

Exploratory task: while most participants agreed on level of familiarity, knowledge, and easiness of the specific tasks, they had more mixed reaction to the exploratory tasks and their characteristics. In terms of the domain knowledge, fifty per cent reported having good or very good knowledge, 23% had adequate knowledge and 27% had basic or no knowledge. Also, the participants reported their perceived level of difficulty: 41% as easy or very easy, 33% of participants rated their task as being of moderate difficulty and 26% reported that they had a very difficult or difficult topic. Familiarity with the topic also were reported as 43% of participants stated that they were familiar or extremely familiar with the topic, 23% were somewhat familiar and 34% were unfamiliar or not familiar at all with their topic. Table 4 shows the exploratory task characteristics. Accordingly, the participants were divided into knowledgeable searchers, who had good or very good knowledge about the topic, and so were extremely familiar or familiar with the topic (N=15), and non-knowledgeable searchers (N=17).

| Questions: | Knowledge about the topic | ||||

|---|---|---|---|---|---|

|

How much do you know about the topic you are searching for? |

No knowledge | Basic | Adequate | Good | Very good |

| 3 participants (10%) | 5 participants (17%) | 8 participants (23%) | 9 participants (29%) | 7 participants (21%) | |

| How easy do you think the topic is? | Easiness of the topic | ||||

| Very difficult | Difficult | Moderate | Easy | Very easy | |

|

2 participants (8%) |

6 participants (18%) |

11 participants (33%) |

10 participants (31%) |

3 participants (10%) |

|

| How familiar are you with the topic you are searching for? | Familiarity with the topic | ||||

| Not familiar at all | Unfamiliar | Somewhat familiar | Familiar | Extremely familiar | |

|

2 participants (8%) |

9 participants (26%) |

8 participants (23%) |

10 participants (30%) |

3 participants (13%) |

|

Search performance

The main objective of this work is to investigate the reasons behind search failures from the searchers’ perspective. Searching for information is a process affected by personal attributes (e.g., knowledge and experience), task attributes (e.g., type and complexity), system design (e.g., interface design and content coverage), and search performance or interaction outcome (Xie and Cool, 2009).

To measure the search performance, a user-oriented approach was considered here. Based on previous works, three main criteria were identified namely; the user-defined relevance of the information which depend on the users’ knowledge and perceptions, and it is affected by factors including: search situations, the users’ goals, knowledge level and beliefs, the nature of information being evaluated, constraints of time and effort and cost involved in obtaining information (Savolainen and Kari, 2006). Considering relevance alone is not sufficient, the satisfaction with the found information needs to be considered (Belkin, et al., 2008). Satisfaction defines as ‘the extent to which users believe the information system available to them meets their information requirements’ (Ives, et al., 1983, p.785). And, finally evaluate the overall outcome of the search process; once a person finished a search episode. The success of the search process is affected by subjective factors including search expertise, prior knowledge and interest in the topic, and flow experience (Wirth, et al., 2016; Wang, et al., 2017).

The three criteria were assessed by self-rating based on a five-point Likert scale: relevance of the information found (highly relevant=1, not relevant=5), satisfaction with the information (completely satisfied=1, not at all satisfied=5), and search success (extremely successful=1, complete failure=5). Searches with only negative responses were considered in this study.

Behavioural analysis

Because search behaviour is recognised as an indicator of search quality (Debowski, 2001), behavioural signals which refers to the recorded interactions in the log files were quantified and treated as dependent variables. We compared the behavioural signals of experts and non-experts. The main objective behind the behavioural analysis was to gain better understanding of the underlying causes of failed searches, in particular, to what extent the system functionality and the support tools (e.g. advanced and filter functions) were used across different expertise levels. Table 5 shows the metrics used to quantify the behaviour. The analysis covered more than 64 search sessions yielded the following observations.

| Metrics | Description |

|---|---|

| Session duration (SD) | Average duration of the session |

| Action variable | |

| Simple search (SS) | Number of searches by SS |

| Advance search (AS) | Number of searches by AS |

| Facet used (FU) | Number of FU |

| Result page (RP) | Number of visit RP |

| Query behaviour variable | |

| Number of query (Q) | # reformulating query per session |

| Query length (QL) | # of the term/s of each query |

| Search result actions | |

| Clicked result (CR) | Number of CR |

| View item (VI) | Number of VI |

| Downloaded item (DI) | Number of DI |

Generally, the mean session duration for the specific task for both groups (two minutes) was shorter than the mean duration for the exploratory task (eight and five minutes). In the specific searches, the behavioural analysis showed that the advanced search functions and filters (e.g., facets and sort functions) were never used by members of both groups. The results also indicate that the participants did not go beyond the first search results. The expert searchers recorded more complicated and dynamic interactions when they worked on the exploratory task than the specific task. Their sessions lasted around eight minutes on average, going through different iterations, making light use of facets, and returning to the search results page very frequently. Both experts and non-experts tended to click and view items very frequently rather than downloading them (it might be an indication of the unsuccessful searches). Like experts, non-experts spent more time on the exploratory tasks than the specific tasks (five minutes on average), but less time than the experts. They also recorded fewer visits to the search result page, and rarely used advanced search functions or facets. The result of the Mann-Whitney test, as shown in Table 6, indicates that the significant difference between the expert and non-expert searchers is recorded only in two aspects: the session duration (Sig= 0.00 < 0.05) and the number of visits to the result page (Sig= 0.00 < 0.05) when searchers were dealing with exploratory tasks. Expert searchers had longer session duration compared to the non-experts. Simultaneously, they return to the search result page very frequently while conducting exploratory searches. In terms of the specific task, the behaviour of both groups did not differ significantly in any behaviour signals.

| Task Types | Simple task | Exploratory task | ||||

|---|---|---|---|---|---|---|

| Statistical test | U | Z | Sig | U | Z | Sig |

| Session duration | 83 | 1.6 | 0.09 | 8 | 4.5 | 0.00 |

| Simple search | 98 | 1.1 | 0.23 | 81 | 1.5 | 0.06 |

| Advanced search | 119 | 0.88 | 0.37 | 105 | 1.5 | 0.11 |

| Facet used | 114 | 0.79 | 0.42 | 112 | 1.2 | 0.20 |

| Number of queries | 92 | 1.54 | 0.12 | 110 | 0.66 | 0.50 |

| Query length | 104 | 0.92 | 0.35 | 115 | 0.42 | 0.67 |

| Result page | 124 | 0.08 | 0.93 | 49 | 3.0 | 0.00 |

| Clicked result | 124 | 0.08 | 0.93 | 99 | 1.0 | 0.28 |

| View item | 122 | 0.18 | 0.85 | 120 | 0.25 | 0.79 |

| Downloaded item | 126 | 0.00 | 1.0 | 99 | 1.2 | 0.22 |

Causes for failure

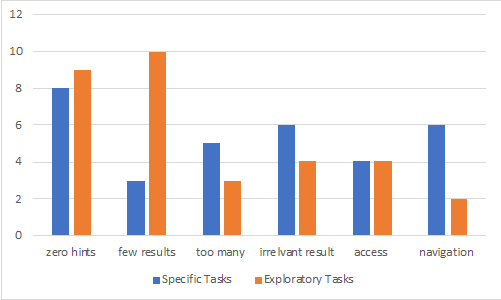

To address RQ1, participants were asked to provide reasons why they thought they failed with their searches. A code schema was developed to analyse the answers (Table 7 shows examples). The reasons were identified and classified into two main categories: digital library coverage (e.g., zero hits, irrelevant results and too many or too few results), and usability problems, including accessibility and navigation issues. Figure 2 shows the frequency of the problems across both tasks - Specific Tasks (ST) and Exploratory Tasks (ET).

| Category | Sub-categories | Number of Participants for each task | Examples |

|---|---|---|---|

| Coverage of the digital library | Too many results | 5 ST 3 ET |

"I was expecting very precise responses." "I would prefer to find more shorter documents." "I wanted to know very basic things and the results returned were too many." |

| Too few results |

3 ST 10 ET |

"The collection of books and research papers is very limited." "Not all the publications of the author were found." "I found only some articles related to my search topic." |

|

| Zero hits |

8 ST 9 ET |

"I did not get any result back." "There were no such books." "I couldn’t find any information about my search." |

|

| Irrelevant results |

6 ST 4 ET |

"The papers found were not exactly what I was looking for." “The documents provided to the query were not relevant." "There were not major key sources." |

|

| Usability | Accessibility |

4 ST 4 ET |

"I did not find it easy to find how to go to the full text of the article." "I would prefer to be able to download the research papers rather than viewing it online with the viewer software." "I could not access the digitalized version of old research papers." |

| Navigation |

6 ST 2 ET |

"It will be good if there is an icon to go back to search results page." "There is no date facet." "The result page include documents in other languages." |

Figure 2: The frequency of the problems for both tasks

Emotions analysis

To answer the second research question, a textual analysis was conducted by using the Linguistic Inquiry and Word Count (LIWC) software. LIWC is a psycholinguistic lexicon tool that is created by psychologists to help the non-specialists to detect psychological statistics in text. LIWC consists of different dictionaries where each word is associated with the emotions it evokes to capture word-emotion connotations. The software can detect different psychological categories embodied in individuals’ verbal and written speech samples including emotional, cognitive and structural components (Santos and Vieira, 2017).

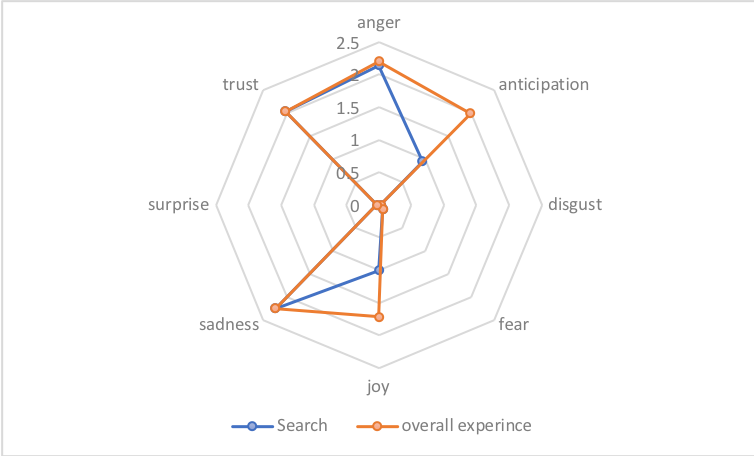

We analysed sixty-four excerpts reporting search failures extracted from the open-question in the post questionnaires and provided by the participants. We asked the participants to reflect on their failure experiences by answering the following opened questions: (1) If you are not satisfied with your searching, can you please explain why? and (2) what do you think of your overall experience with the digital library? Accordingly, the proportion of words that scored positively on LIWC categories was measured. Table 8 shows the average number of excerpts that contain at least one word associated with a particular emotion (based on Plutchik’s Wheel of Emotions). The radar chart, as in Figure 3, confirms that users show a positive attitude toward the digital library in general. This includes emotions such as trust, joy, and anticipation. While joy and anticipation dropped down when participants reflected on their search experiences, participants were still showing the same level of trustfulness in the digital library as an information source. The top two emotions experienced in the failed searches and linked to negative feelings, were anger and sadness. Such unpleasant emotions might prevent user perseverance in achieving their information seeking goals. Being able to detect such emotions might help system designers to improve the design and user experiences with digital library.

Figure 3: The distribution of the emotions

| Emotion | Search | Overall experience |

|---|---|---|

| Anger | 2.1 | 2.2 |

| Anticipation | 0.9 | 1.9 |

| Disgust | 0.0 | 0.0 |

| Fear | 0.0 | 0.0 |

| Joy | 1 | 1.7 |

| Sadness | 2.2 | 2.2 |

| Surprise | 0.0 | 0.0 |

| Trust | 2 | 2.0 |

Discussion

Investigation search failures is crucial to enrich our understanding of the user experiences and help us figure out how to improve the system functionality. Log file analysis is the preferred sources to measure success and failure rates due to its availability and objectivity; however, studying the search failures along with the users’ attributes provides a better understanding of the barriers to successful searching. Identifying the unsuccessful search depends on the searchers’ definition of an unsuccessful search (Trapido, 2016), and users’ personal and cognitive characteristics, together with the nature of tasks (Xie and Cool, 2009).

Therefore, we can answer the first research question: what are the reasons behind the failure searches from the searcher’s perspective? Speculations from our study participants indicated two main reasons: the lack of coverage of the digital library and usability problems. This is in line with the previous work where Antelman, et al. (2006), and Chan and O’Neill (2010) confirmed that the unclear coverage, vague representation of information objects, and ineffective search mechanisms result in unsuccessful searches. Similarly, Trapido (2016) discussed the effect of the size of the underlying database, systems search capabilities and interface design on the unsuccessful searches.

However, in the analysis of usage patterns extracted from the log files, the real use of support tools (e.g., advanced and filter functions) was examined across different expertise levels. The main objective behind such examination was to gain better understanding of the underlying causes of failed searches. We realised that the digital library functionalities were not used fully by both the experts and non-expert searchers. The behavioural analysis demonstrated that several searches might have headed towards success but for various user actions that was precluded. Searchers might not understand how to craft an appropriate search strategy to meet their information needs. As an example, one participant complained about the inability to find old references quickly but did not use the sort function that would display the results chronologically. Another frequent comment was about the difficulty of retrieving English and non-English documents. RERO Doc offers a filtering results function with different facet types (including language), but the usage patterns analysis showed little use of these discovery tools. Using facets could have helped to turn the failed searches to a success one, as Sadeh (2007) argued that facets could reduce a large result set to a manageable size. Our observations are also in line with a number of previous works including Antelman, et al. (2006) and Blumer, et al. (2014) which confirmed the importance of facets as a discovering tool to reduce the frustrating experience with unhelpful result sets. However, Capra, et al. (2007) reported the problems associated with facet implementation and highlighted the need to train users to better utilise facets, considering the fact that not all searchers find facets beneficial.

The inadequate use of these tools could be due to a lack of awareness of their importance, or a poor conceptual understanding of the way they work. And both should be further explored as unreported causes for search failures. We could conclude that there is a mismatch between the searchers’ awareness, real interactions and the functionality of the digital library. Accordingly, there is still a call for bridging the gap between the digital library design, usability issues and user awareness.

In conclusion, almost twenty years ago, Borgman (1996) called for bridging the gap between the search behaviour and the design of the online catalogue. Users are still experiencing search failures because of ill query formulation, and difficulty in identifying the appropriate access points. As solutions, Borgman suggested to provide training to the users, and more importantly design better systems that feeds from the research results.

Today, there persists a need to improve the digital library system and simplify its interface. The use of query suggestions or auto completion functions could better support the search experiences and speed up the time taken by struggling searchers as mentioned by (Barifah and Landoni, 2019). Designing simple, fast, and easy to use search systems and interfaces could be the way forward. In particular, focusing on an interface that increases users’ awareness of the facets and their accurate application remains a worthwhile goal (Trapido, 2016).

In terms of the emotional analysis, referring to the second research question, what are the primary emotions individuals experienced in the failed searches? researchers confirmed that investigating affective aspects along with other information searching factors facilitates our understanding of information behaviour more holistically as the emotional responses may influence the information search process and searchers’ actions (Kuhlthau, 1993; Fulton, 2009). It is important to not consider only how an individual navigates information, but also what emotions are experienced. The effect of the emotions plays a significant role in determining how successful the search sessions might be and to what extent users might persevere in accomplishing a task (Gwizdka and Lopatovska, 2009). Prior works confirmed that emotion is an ubiquitous element in user interactions (Brave, et al., 2005), therefore system designers should consider it when designing usable and intelligent systems (Karat, 2003).

As literature points out that people’s emotions, feelings, and attitudes can be conveyed through the words they use, in this work, we analysed 64 textual excerpts from the post-search questionnaire. Findings indicate that participants were experiencing sadness and anger as their primary emotions when they experienced search failure. This finding is in line with Johnson, et al. (2011) who confirmed that as failure experiences deepened, the feelings of sadness, defeat and frustration increased. This in turn has a detrimental impact upon cognitive functioning as it reduces the accuracy of memory recall (Johnson, 2017).

Together with raising people’s awareness of how to use the system, we need systems that are better tailored to the users’ needs. The results of this study have implications for the system design as follows: from the behaviour analysis, the results showed that the search tools (e.g., facets and filters) were rarely used by the users. One way to support users to achieve more successful searching is to replace the current digital library faceted-based interface with a visualised interface. Visualisation is not a new phenomenon but has rarely been used in the digital libary context. Ruotsalo et al. (2013) designed a scholarly system based on modeling interactive intent, which allows users to provide relevant feedback to the system using an interactive user interface. The interaction process is supported by a radar-based approach that enables users to easily find relevant results. As a future work, we are planning to design a visualised interface and evaluate the user experiences.

Another supporting solution is implementing a chatbot that might help users to redirect their interaction with the digital library. Recently, Mckie and Narayan (2019) developed a Lib-Bot, a digital library chatbot, aiming to minimise the effects of anxiety related to using library databases and librarian services, and produce a sense of ease when using library resources (Mckie and Narayan 2019). Their ultimate goal when developing such a chatbot was to provide a successful and positive experience for the user.

Limitations

We do acknowledge that one of the limitations of this study is the relatively small size of the user sample of32 participants, potentially threatening the validity of our results. However, the sample is still representative covering most relevant types of searchers in terms of demographic attributes (sex, age, academic status, and discipline), system expertise levels (expert vs novice) with different domain knowledge expertise. Another limitation is the dependence on self-reporting, a popular method to elicit emotions. In the near future, we will conduct a controlled laboratory study to validate the results of the online study here reported and explore the use of a different method to collect affective data.

Conclusion

This paper is an attempt to shed the light on causes behind failed searches and identify associated emotions. The nature of digital library search failures was examined from the searchers’ perspective and compared to the real interactions recorded in the log files. Based on feedback from participants, we identified two main reasons behind unsuccessful searches: limited coverage of the digital library and usability issues. The behaviour analysis revealed that there was a mismatch between the searchers’ awareness, real interactions, and the functionality of the digital library. This paper also investigated the emotions associated with failed searches and identified these as being anger and sadness. We conclude that digital library users are encountering the same search failure problems as reported in literature almost 20 years ago. Thus, there is a need to improve digital library systems and simplify their interfaces.

Acknowledgements

Special thanks for Saeed Rahmani for helping to set up the experimental website.

About the authors

Maram Barifah is a PhD candidate at the Faculty of Informatics at the Università della Svizzera italiana, USI, via G. Buffi 13, CH-6904 Lugano, Switzerland. She is interested in digital libraries’ design and evaluation. She can be contacted at maram.barifah@usi.ch

Monica Landoni holds a PhD in Information and Computer Sciences from University of Strathclyde. Her research interests lie mainly in the fields of Interactive Information Retrieval and Electronic Publishing, particularly in the area of design and evaluation of Child-Computer Interactions. She is a senior researcher at the Faculty of Informatics at the Università della Svizzera italiana, USI, via G. Buffi 13, CH-6904 Lugano, Switzerland. She can be contacted at monica.landoni@usi.ch.

References

- Antell, K. & Huang, J. (2008). Subject searching success: transaction logs, patron perceptions, and implications for library instruction.Reference & user services quarterly, 48(1), 68-76. http://dx.doi.org/10.5860/rusq.48n1.68

- Antelman, K., Lynema, E. & Pace, A.K. (2006). Toward a 21st century catalog.Information Technology and Libraries, 25(3), 128-139. http://dx.doi.org/10.6017/ital.v25i3.3342

- Barifah, M. & Landoni, M. (2019). Exploring usage patterns of a large-scale digital library. In Proceedings of the ACM/IEEE Joint Conference on Digital Libraries (JCDL) (pp. 67-76). IEEE. http://dx.doi.org/10.1109/JCDL.2019.00020

- Behnert, C. & Lewandowski, D. (2017). Known-item searches resulting in zero hits: considerations for discovery systems.The Journal of Academic Librarianship, 43(2), 128-134. http://dx.doi.org/10.1016/j.acalib.2016.12.002

- Belkin, N.J., Cole, M. & Bierig, R. (2008). Is relevance the right criterion for evaluating interactive information retrieval? In B. Carterette, P.N. Bennett, O. Chapelle & T. Joachims (Eds.), Proceedings of the ACM SIGIR 2008 Workshop on Beyond Binary Relevance: Preferences, Diversity, and Set-Level Judgments. http://research.microsoft.com/~pauben/bbr-workshop (Archived by the Internet Archive at https://web.archive.org/web/20190330110412/https://www.microsoft.com/en-us/research/wp-content/uploads/2017/01/bbr-workshop-2008.pdf)

- Bilal, D. (2000). Children's use of the Yahooligans! Web search engine: I. Cognitive, physical, and affective behaviors on fact‐based search tasks.Journal of the American Society for information Science, 51(7), 646-665. http://dx.doi.org/10.1002/(SICI)1097-4571(2000)51:7%3C646::AID-ASI7%3E3.0.CO;2-A

- Blandford, A., Stelmaszewska, H. & Bryan-Kinns, N. (2001). Use of multiple digital libraries: a case study. InProceedings of the 1st ACM/IEEE-CS joint conference on Digital libraries (pp. 179-188). ACM Digital Library. http://dx.doi.org/10.1145/379437.379479

- Blumer, E., Hügi, J. & Schneider, R. (2014). The usability issues of faceted navigation in digital libraries. it, 5(2), 85-100.

- Borgman, C.L. (1986). Why are online catalogs hard to use? Lessons learned from information‐retrieval studies. Journal of the American Society for Information Science, 37(6), 387-400. http://dx.doi.org/10.1002/(SICI)1097-4571(198611)37:6%3C387::AID-ASI3%3E3.0.CO;2-8

- Brave, S., Nass, C. & Hutchinson, K. (2005). Computers that care: investigating the effects of orientation of emotion exhibited by an embodied computer agent.International Journal of Human-Computer Studies, 62(2), 161-178. http://dx.doi.org/10.1016/j.ijhcs.2004.11.002

- Capra, R., Marchionini, G., Oh, J.S., Stutzman, F. & Zhang, Y. (2007). Effects of structure and interaction style on distinct search tasks. In Proceedings of the 7th ACM/IEEE-CS joint conference on Digital libraries (pp. 442-451). ACM Digital Library. http://dx.doi.org/10.1145/1255175.1255267

- Chan, L.M. & O'Neill, E.T. (2010). FAST: Faceted Application of Subject Terminology:principles and applications (1st. ed.). Libraries Unlimited.

- Debowski, S. (2001). Wrong way: go back! An exploration of novice search behaviours while conducting an information search.The Electronic Library, 19(6), 371-382. http://dx.doi.org/10.1108/02640470110411991

- Drabenstott, K.M. & Weller, M.S. (1996). Failure analysis of subject searches in a test of a new design for subject access to online catalogs. Journal of the American Society for Information Science, 47(7), 519-537. http://dx.doi.org/10.1002/(SICI)1097-4571(199607)47:7%3C519::AID-ASI5%3E3.0.CO;2-X

- Fast, K.V. & Campbell, D.G. (2004). “I still like Google”: university student perceptions of searching OPACs and the web.Proceedings of the American Society for Information Science and Technology, 41(1), 138-146. http://dx.doi.org/10.1002/meet.1450410116

- Fulton, C. (2009). The pleasure principle: the power of positive affect in information seeking. Aslib Proceedings, 61(3), 245-261. http://dx.doi.org/10.1108/00012530910959808

- Gwizdka, J. & Spence, I. (2007). Implicit measures of lostness and success in web navigation. Interacting with Computers, 19(3), 357-369. http://dx.doi.org/10.1016/j.intcom.2007.01.001

- Gwizdka, J. & Lopatovska, I. (2009). The role of subjective factors in the information search process.Journal of the American Society for Information Science and Technology, 60(12), 2452-2464. http://dx.doi.org/10.1002/asi.21183

- Hoeber, O., Patel, D. & Storie, D. (2019). A Study of Academic Search Scenarios and Information Seeking Behaviour. In Proceedings of the 2019 Conference on Human Information Interaction and Retrieval (pp. 231-235). ACM Digital Library. http://dx.doi.org/10.1145/3295750.3298943

- Ives, B., Olson, M.H. & Baroudi, J.J. (1983). The measurement of user information satisfaction. Communications of the ACM, 26(10), 785-793. http://dx.doi.org/10.1145/358413.358430

- Johnson, J., Gooding, P.A., Wood, A.M., Taylor, P.J. & Tarrier, N. (2011). Trait reappraisal amplifies subjective defeat, sadness, and negative affect in response to failure versus success in nonclinical and psychosis populations.Journal of Abnormal Psychology, 120(4), 922-934. http://dx.doi.org/10.1037/a0023737

- Johnson, J., Panagioti, M., Bass, J., Ramsey, L. & Harrison, R. (2017). Resilience to emotional distress in response to failure, error or mistakes: a systematic review. Clinical Psychology Review, 52, 19-42. http://dx.doi.org/10.1016/j.cpr.2016.11.007

- Julien, H., McKechnie, L. E., & Hart, S. (2005). Affective issues in library and information science systems work: A content analysis. Library & Information Science Research, 27(4), 453-466.

- Karat, J. (2002). Beyond task completion: evaluation of affective components of use. InA. Jacko & A. Sears, The human-computer interaction handbook: fundamentals, evolving technologies and emerging applications(pp. 1152-1164). L. Erlbaum Associates Inc.

- Kuhlthau, C.C. (1993). A principle of uncertainty for information seeking.Journal of Documentation, 49(4), 339-355. http://dx.doi.org/10.1108/eb026918

- Lau, E.P. & Goh, D.H.L. (2006). In search of query patterns: a case study of a university OPAC.Information Processing & Management, 42(5), 1316-1329. http://dx.doi.org/10.1016/j.ipm.2006.02.003

- Lopatovska, I. & Arapakis, I. (2011). Theories, methods and current research on emotions in library and information science, information retrieval and human-computer interaction.Information Processing & Management, 47(4), 575-592. http://dx.doi.org/10.1016/j.ipm.2010.09.001

- Lopatovska, I. (2014). Toward a model of emotions and mood in the online information search process.Journal of the Association for Information Science and Technology, 65(9), 1775-1793. http://dx.doi.org/10.1002/asi.23078

- Mansourian, Y. (2008). Coping strategies in web searching.Electronic Library and Information Systems, 42(1), 28–39.

- Mayer, J. D., Roberts, R. D., & Barsade, S. G. (2008). Human abilities: Emotional intelligence. Annual Review of Psychology, 59, 507-536.

- Mckie, I.A.S. & Narayan, B. (2019). Enhancing the academic library experience with chatbots: an exploration of research and implications for practice.Journal of the Australian Library and Information Association, 68(3), 268-277. http://dx.doi.org/10.1080/24750158.2019.1611694

- Nahl, D. (2005). Affective and cognitive information behaviour: interaction effects in internet use.Proceedings of the American Society for Information Science and Technology, 42(1). https://doi.org/10.1002/meet.1450420196|

- Peters, T.A. (1989). When smart people fail: an analysis of the transaction log of an online public access catalog.Journal of Academic Librarianship, 15(5), 267-273.

- Poddar, A. & Ruthven, I. (2010). The emotional impact of search tasks. In Proceedings of the third symposium on Information interaction in context (pp. 35-44). ACM Digital Library. http://dx.doi.org/10.1145/1840784.1840792

- Ruotsalo, T., Athukorala, K., Głowacka, D., Konyushkova, K., Oulasvirta, A., Kaipiainen, S. & Jacucci, G. (2013). Supporting exploratory search tasks with interactive user modeling. Proceedings of the American Society for Information Science and Technology, 50(1), 1-10. http://dx.doi.org/10.1002/meet.14505001040

- Sadeh, T. (2007). Time for a change: new approaches for a new generation of library users.New Library World, 108(7/8), 307-316. http://dx.doi.org/10.1108/03074800710763608

- Lanclos, D. (2016). Embracing an ethnographic agenda: context, collaboration, and complexity. In A. Priestner & M. Borg (Eds.), User experience in libraries: applying ethnography and human-centred design (pp. 21-37).

- MacDonald, C.M. (2017). "It takes a village”: on UX librarianship and building UX capacity in libraries. Journal of Library Administration, 57(2), 194-214. http://dx.doi.org/10.1080/01930826.2016.1232942

- Santos, H. & Vieira, R. (2017). PLN-PUCRS at EmoInt-2017: Psycholinguistic features for emotion intensity prediction in tweets. In Balahur, S.M. Mohammad & E. van der Goot (Eds.), Proceedings of the 8th Workshop on Computational Approaches to Subjectivity, Sentiment and Social Media Analysis(pp. 189-192). Association for Computational Linguistics. http://dx.doi.org/10.18653/v1/W17-5225

- Savolainen, R. & Kari, J. (2006). User‐defined relevance criteria in web searching. Journal of Documentation, 62(6), 685-707. http://dx.doi.org/10.1108/00220410610714921

- Scherer, K.R. (2001). Appraisal considered as a process of multilevel sequential checking. In K.R. Scherer, A. Schorr & T. Johnstone (Eds.), Appraisal processes in emotion: theory, methods, research (92-120). Oxford University Press.

- Slone, D.J. (2000). Encounters with the OPAC: on‐line searching in public libraries.Journal of the American Society for Information Science, 51(8), 757-773. http://dx.doi.org/10.1002/(SICI)1097-4571(2000)51:8%3C757::AID-ASI80%3E3.0.CO;2-T

- Tang, M.C. (2007). Browsing and searching in a faceted information space: a naturalistic study of PubMed users' interaction with a display tool.Journal of the American Society for Information Science and Technology, 58(13), 1998-2006. http://dx.doi.org/10.1002/asi.20689

- Trapido, I. (2016). Library discovery products: discovering user expectations through failure analysis.Information Technology and Libraries, 35(3), 9-26. http://dx.doi.org/10.6017/ital.v35i3.9190

- Wang, Y., Liu, J., Mandal, S. & Shah, C. (2017). Search successes and failures in query segments and search tasks: a field study. Proceedings of the Association for Information Science and Technology, 54(1), 436-445. http://dx.doi.org/10.1002/pra2.2017.14505401047

- Wildemuth, B., Freund, L. & Toms, E. (2013). Designing known-item and fact-finding search tasks for studies of interactive information retrieval. In Huvila (Ed.), Proceedings of the second association for information science and technology ASIS&T (European Workshop)(pp. 131-162). Åbo Akademi University.

- Wilson, T.D. (1999). Models in information behaviour research. Journal of Documentation, 55(3), 249-270. http://dx.doi.org/10.1108/EUM0000000007145

- Wirth, W., Sommer, K., Von Pape, T. & Karnowski, V. (2016). Success in online searches: differences between evaluation and finding tasks. Journal of the Association for Information Science and Technology, 67(12), 2897-2908. http://dx.doi.org/10.1002/asi.23389

- Xie, I. & Cool, C. (2009). Understanding help seeking within the context of searching digital libraries. Journal of the American Society for Information Science and Technology, 60(3), 477-494. http://dx.doi.org/10.1002/asi.20988