Proceedings of the Tenth International Conference on Conceptions of Library and Information Science, Ljubljana, Slovenia, June 16-19, 2019

From metrics to representation: the flattened self in citation databases

Lai Ma

Introduction. Citation data are used in bibliometric analyses to describe scholarly communication networks, while citation-based metrics are widely used in the evaluation of research performance at individual, institutional, and national levels. The meaning of metrics changes when they are used in different contexts and for different purposes. In everyday academic discourse, citation-based metrics have become representation of persons and work.

Method. This paper is a conceptual piece about the nature of metrics, as well as representation in citation databases and scholarly communication networks using concepts developed in sociology and science and technology studies.

Analysis. The analysis is consisted of four parts: (a) the necessity of representation in databases and the nature of metrics as representation in scholarly communication networks and evaluative practices; (b) metrics as ‘numbers that commensurate’; (c) decontextualisation in citation database; and (d) quantified control facilitated by information infrastructure.

Conclusions. There is a danger of metrics exerting strategic influence on the decisions of academic work. Although most cannot change how these metrics are being accessed, manipulated, ranked, added or removed in citation database, we can defend, explain, justify, protest about their validity and legitimacy in evaluative practices.

Introduction

In 1955, Eugene Garfield published an article entitled, ‘Citation Indexes for Science: A New Dimension in Documentation through Association of Ideas’ (1955), in which he proposes ‘a bibliographic system for science literature that can eliminate the uncritical citation of fraudulent, incomplete, or obsolete data by making it possible for the conscientious scholar to be aware of criticisms of earlier paper’. The citation index was designed to facilitate information retrieval and scientific progress. Twelve years after founding the Institute of Scientific Information, Garfield (1972) published a paper entitled, ‘Citation analysis as a tool in journal evaluation’, where he proposes using citation analysis to assist journal selection, especially in academic libraries. Although Garfield invented citation analysis, he had always been aware of its limitations for evaluating journals and research performance in general; he had advised that metrics such as Journal Impact Factor (JIF) should be used responsibly (Garfield, 1963).

Nevertheless, different citation-based metrics have been developed over the last few decades for estimating and measuring the quality and impact of research articles, journals, and researchers. They are currently widely used in the evaluation of research performance at individual, institutional, and national levels. That is to say, metrics do not only measure, but have also transformed into representation of persons and work. These metrics are being collocated, manipulated, ranked, added or removed in citation databases, where one cannot argue, defend, explain, justify, or protest. Persons and work are flattened and objectified in citation databases, and metrics are embedded in departmental reports, strategic plans, grant applications, and university rankings. An automatic system does not differentiate good or bad, canonical or mediocre work, it ranks using metrics.

In the following, we will consider (a) the necessity of representation in databases and the nature of metrics as representation in scholarly communication networks and evaluative practices; (b) metrics as ‘numbers that commensurate’; (c) decontextualisation and the flattened self in citation database; and (d) quantified control facilitated by information infrastructure. The paper will conclude with a call for reflection and discourse in shaping the social norms and practices pertaining to the use of metrics. It should be noted that the terms, ‘metrics,’, ‘citation-based metrics’, and ‘indicators’ are used interchangeably in this short paper.

Representation, Citation, and Metrics

In our day-to-day life, documents representing us abound, most notably, passports, driver licenses and birth certificate. Sometimes, these documents are more real than a physical person, in the sense that one cannot prove one’s identity without the documents. Passport control does not care how you justify your identity: no matter where you are from and what kind of a good person you are, the documents and their records about you—even though they are only a representation of you—are acceptable as a form of validation and you as a person are not.

Representation plays a very important role on the digital platforms we use every day, including search engines, social media, and enterprise systems in corporations, governments, and universities. It is because databases use data points to construct our digital identities and build relationships with other entities. In other words, things, events, persons, all of which are represented by data points in big and small databases. When we create a profile online or indeed fill in any information online, we provide data points that create our digital selves and records; they are also digital traces of our past and present for predicting our consumer needs and even eligibility for health insurance and mortgage.

Using data points to construct profiles is not new. For decades, representation is an important aspect of library and information work. Librarians have been creating catalogues of not only books and other library materials, but also authors and their bibliographic identities. The practice is traditionally described as authority control, which has now been more widely understood with the use of ORCID. Library catalogue or web searches would not be possible or efficient without these data processing procedures. Put another way, searching online, including library catalogues and databases, is not possible without first creating representations.

Citation index is a form of representation and was initially designed as a reference tool for searching related literature. The index allows for bibliometric analysis by establishing relationship between authors and works that show us the networks of scholars and topics, their strong and weak ties, and even how ideas travel from one person or discipline to another. The nodes in these networks—articles/publications, scholars, researchers, research centres, institutions, countries—are representations in scholarly communication networks. These analyses do not assess or evaluate the inherent value or quality of persons and work, for they are regarded as variables. For example, if you were to calculate the distance between European cities and describe the strong and weak ties between them, say, in terms of migration and trade, there is no qualitative judgement as to which city is more welcoming or having a better life style. Similarly, there is no qualitative evaluation of the persons and work in scholarly communication networks.

Citation-based metrics for the purposes of research assessment and ranking, however, constitute a different kind of representation in citation databases and analyses, particularly when they are used for making decisions about promotion and grant application as these social processes implicate value judgement of research quality. Strictly speaking, evaluative metrics are quantitative measures of different aspects of publications or individuals. For example, the Journal Impact Factor (JIF) is calculated by dividing the number of citations in the Journal Citation Reports (Web of Science) year by the total number of articles published in the two previous years. It is useful for demonstrating the relatively immediate impact of a journal based on citations. The h-index, presumably, indicates the productivity and impact (in terms of citations) of an individual researcher; however the index has been considered faulty (Arnold and Fowler, 2011; Gingras, 2014). Nevertheless, every indicator has its functions and limitations and different metrics measure different things. However, the measures transform into values when they are considered as representations of persons and work—when the metrics are displayed on an academic profile and when they are embedded in annual reports, strategic plans, and so forth.

There is a flawed translation, as Wouters (2014) aptly points out, “from the communication regime into the accountability regime” (p. 60) when we consider representations in bibliometric analyses and in research assessment and related uses. Nodes and data points in scholarly communication networks represent unique identifiers, whereas JIF or h-index has become representation of persons and work in the context of academic recruitment and promotion and grant funding, notwithstanding the indicators are calculated based on different assumptions and have limitations as to what they tell about productivity or quality. The meaning of metrics changes as they travel from citation databases as a quantitative measure to social space as an indicator of quality.

‘Numbers that commensurate’

The wide use of evaluative metrics for research assessment and ranking of subject and universities can be considered by a sociology of quantification, where Espeland and Stevens (2008) conceptualise two kinds of number: ‘numbers that mark’ and ‘numbers that commensurate’. On the one hand, ‘numbers that mark’ are like the nodes in scholarly communication networks, social security number, and bus route numbers. They are unique identifiers. These numbers do not indicate value or quality; they cannot be ranked for decision making. ‘Numbers that commensurate’, on the other hand, creates categories of classification and requires considerable social and intellectual development, including infrastructure, because

Commensuration creates a specific type of relationship among objects. It transforms all difference into quantity. In doing so it unites objects by encompassing them under a shared cognitive system. At the same time, it also distinguishes objects by assigning to each one a precise amount of something that is measurably different from, or equal to, all others. Difference or similarity is expressed as magnitude, as an interval on a metric, a precise matter of more or less. (p. 408)

Further, Espeland and Stevens (2008) maintain that systems of numbers require ‘expertise, discipline, coordination and many kinds of resources, including time, money, and political muscle’ (p. 411) and would not be possible without substantial and supportive infrastructures. The authority of numbers can become self-evident when their accuracy or validity is represented in a combination of phenomena comprised of ‘metrological realism’, ‘pragmatism in accounting’, and ‘proof in use’; all of which ‘depends on documenting the consistency of a form of perception and establishing the independence of what is measured from the act of measuring” (p. 418). In sum, Espeland and Stevens (2008) assert that quantification offers a shared language and discipline that transcends differences.

Evaluative metrics are used in different contexts. In bibliometric analyses, they provide insights concerning the influences and dissemination of an article, the traces and paths a piece of writing travels. In universities and funding agencies, evaluative metrics can be used to rank applicants; increasingly, it is understood that the indicators are perceived as objective measures of determining the quality or potential of persons and work. They constitute the prestige and quality of an article and its author(s) as it is often presumed that the more citations an article receives, the more impactful it is. In the wider social contexts, evaluative metrics are embedded in the methodology of university rankings (which affect recruitment of students) and even value of money in terms of public spending on universities. Put simply, indicators are used instead of in-depth analyses or reviews in bureaucratic procedures. The metrication of research quality provides a shared language and its authority has become more self-evident through use because of its presumed accuracy and objectivity, its ease for comparison and ranking and the efficiency and effectiveness by minimising argumentation and justification.

Evaluative metrics are ‘numbers that commensurate’. Their objectivity and validity become self-evident since quantitative measures are regarded as accurate as representation of the world, and in the context of research assessment, quality of research. The status of JIF or h-index as measures of quality is taken-for-granted as they are embedded in evaluation practices for decision making, partly because they provide a basis for comparison in a presumably fair manner. The self-evident objectivity and validity of metrics is a reality defined by databases and the analyses they support (Espeland and Stevens, 2008). They are convenient tools for accounting and management, however, the reasons as to why there were few objections voiced by academics and researchers until recent publications such as The Metric Tide (Wilsdon, et al., 2015) and the Leiden Manifesto (Hicks, et al., 2015) deserve socio-historical examination.

Decontextualization in citation databases

If you ask an academic, ‘What is journal impact factor?’ or ‘What is h-index?’, many do not know how these indices are calculated or what they actually measure, although they have meanings for them—a higher number means better quality and/or higher impact of one’s publications—at the least for hiring and promotion committees and grant funding panels. Increasingly, they are also data points of academic profiles. Biagioli (2018) describes the use of citation-based metrics elegantly:

Citations have thus become reified as numerical icons of an unspecific, nonpresent value. Because value is left undefined, the citations have moved from being the units of measurement of value to become valuable tokens in and of themselves; that is, the citation has become the value. (p. 258)

He further asserts that the use of metrics in two incommensurable regimes of evaluation: one that casts as external audit necessary for accountability, and the other concerns internal judgement, broadly understood as peer review, that is, the ‘qualitative, reading-based, ‘craft’ form of evaluation’ (p. 255).

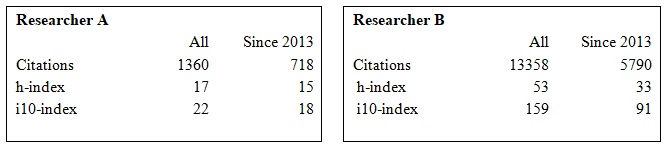

Let’s consider the Google Scholar profile of two researchers in information science (Figure 1). What can one tell about the quality of work and the contributions of their work? Is one researcher better than the other? If we cannot answer this question by comparing the numbers, it is because the metrics are decontextualized representation of scholarly outputs. These representations do not differentiate publication types, disciplines, or even the length of a piece of writing; and they do not consider the context of a piece of writing. Who are the audience of the work of these two researchers? What kind of dissemination would be justified as ‘good’? Were certain pieces written in a socio-political era that would incite more responses and citations? How can we compare the works that are timely with those that are timeless? In citation databases, a magnum opus in philosophy is considered the same as a 4-page conference proceedings. Metrics are decontextualized information in databases and larger information infrastructure. It doesn’t matter how much blood, sweat, and tears one has put into one’s publications, persons and work are represented and recognised by these metrics. If we cannot tell much about a researcher’s curiosity, originality, and breadth and depth of knowledge from an ORCID profile, then we can tell less with metrics, as they are a more abstract form of representation.

Few would agree that there is a one-to-one relationship between one’s metrics and oneself. Nevertheless, metrics represent decontextualized information about persons and work, not to mention they are inherently measures of different purposes and limitations. Persons and work are flattened when these metrics in citation databases are used in research assessment of all forms. One cannot argue, defend, explain, justify, or protest the representations in citation databases; one could only hope these metrics are used responsibly.

When the legitimacy of metrics becomes normalised in academic institutions and larger social systems, however, there is a danger that one also identifies oneself with metrics. ‘The Data—It’s Me!’ (Day, 2014a) is not an overstatement of the status of metrics in the academic lifeworld, for it ‘becomes necessary within such a political economy for an academic to act like the entrepreneur that the neoliberal university desires—advancing his or her career by attempting to grow and tend various forms of ‘evidence’ for impact’ (Day, 2014a, p. 73). There is a growing literature signalling how metrics or indicators have been embedded into everyday research practices of academics and how academics are comparing with one’s self and with others in terms of metrics (see, for example, Ma and Ladisch, in press; Müller and de Rijcke, 2017). Yet, metrics are aggregates of effects; citations are not representation of impact but its traces, although they have become value in and of themselves (Biagiolo, 2018). It is absurd to see the use of metrics in non-critical manners; it is more absurd to find academics using metrics to assess their own worth.

Quantified Control and Information Infrastructure

In his investigation of literacy and written records, Clanchy (1993) ponders how writing things down and the privilege of being able to write can affect ourselves and our relationship with each other. He contends that ‘lay literacy grew out of bureaucracy, rather than from any abstract desire for education or literature’ (p. 19) and he suggests that literacy was a technology in and of itself, not least one that was used for producing an elite class, yet eventually creating a class of illiteracy or layman, who would not be able to use and understand words—jargons—in formal documents in commerce and legal settings. Not only had written records detached events from people, it had also affected the perception of time: ‘before documentation was properly understood, the measurement of time was related to a variety of persons and events and not to an external standard’ (p. 303). Clanchy (1993) quotes Shakespeare, ‘The ability to write is a tool of authorities, a method of domination, whether by law or magic, employed by the strong against the weak, hence the sign of a rejection of communal equality’ (p. 124).

On the one hand, Clanchy (1993) raises questions as to how writings affect social dynamics with the introduction of elite class. On the other hand, there were questions concerning the purposes of record keeping would be considered as a tool of authorities and a method of domination. These questions are important when we consider the nature of metrics as they are central to the construction of citation databases and information infrastructure.

If writing is a form of representation, quantification of quality is another level of abstraction. And if writing can be a tool of authorities and a method of domination, quantification is more subtle and ideological as a form of power when the relations between numbers and values have become increasingly enacted via algorithms (Burrows, 2012). Authorship and publications have been considered as ‘symbolic capital’ in the reward systems in academia (Biagioli, 2006; Cronin, 2005), whereas citations are ‘symbols of the political economies of citation behaviour in academic and scientific research’ (Day, 2014b). There is little doubt that much opportunity, rank, and power is mediated through metrics in academia.

Biagioli (2018) asserts that metrics are ‘doubly alien’ form of knowledge, for they are ‘both produced and used by people who are not practitioners of the field to which the publications belong’ (p. 252), and, not least, they claim the rhetorical moral upper hand. Burrows (2012), likewise, considers metrics as rhetorical device ‘with which the neoliberal academy has come to enact academic value’ (p. 361). For Espeland and Stevens (2008), ‘Measures create and reproduce social boundaries, replacing murky variation with clear distinctions between categories of people and things’ (p. 414). In the context of citation databases, metrics have become representation of categories of research active and inactive, or productive and unproductive researchers, as well as the impact and quality of academic writings. For some university management and funding agencies, a low h-index can be a reason for a promotion or grant application to be turned down. Metrics are used to justify decision making and management practices without the need to understand nuances of arguments or the context of a piece of writing. They are representation of representation.

When metrics travel from citation databases to documents such as grant applications, their meanings and uses adapt to different contexts and purposes. In other words, metrics are recontextualised in different settings. Metrics as representation in a scholarly communication network and a grant application signify different values. Their uses in various settings require the support of infrastructure, from indexing in citation databases to the template of application forms (Brenneis, 2006), inasmuch as bureaucratic procedures and socio-political fabric in the larger society. Following the conception of infrastructure developed by Star and Ruhleder (1996), Wouters (2014) argues that infrastructures ‘are shaped by standards and conventions of particular communities and are embodiment of these standards, in turn shaping the conventions of particular communities’ and the citation network as ‘the assemblage of databases, publishers, consultancies, bibliometric centers, and users of citation indexes in their various forms’ (p. 61).

Metrics are standards of measurement; metrics are also standards of evaluation (i.e., whether an article or a researcher is of good quality). When we are looking at the same number such as an h-index, their meanings differ in different contexts. Researcher A’s h-index of 17 (Figure 1) is a measure, but it also represents the researcher himself. The h-index is him when it is used for ranking.

Ideally, normative standards—what is good or bad, right or wrong—should be constructed bottom-up. They should represent the norms and conventions agreed upon by members of a community. The uses of metrics as standards of evaluation fall into this category. The decision as to whether an h-index represents the productivity and impact of an individual researcher is not an objective but a normative judgement. If h-index is widely rejected as a good measure (see, for example, Arnold & Fowler, 2011, Gingras, 2014), the consensus should lead to the abandonment of it being used for research assessment. The reasons for it being deployed by funding agencies and universities as de facto criterion for research assessment are multi-faceted. Yet, the uses can be seen as a top-down approach to exert strategic control over research and publication practices, particularly when the authority and legitimacy, as well as techniques and technologies, of citation-based metrics are withheld by few but the authors themselves.

In the last few decades, there have been critiques of quantification (and new public management) and questions concerning the value of universities (see, for example, Collini, 2012; Power, 1997; Readings, 1996; Shore, 2008). There is also a trend to understand the use of metrics as quantified control. In much of these discussions, the audit culture is considered as an organisational and systematic issue. The social norms and practices that shape the audit culture, or regime of evaluation, or regime of quantification, in academia, however, have not been examined carefully. That is to say, the voices of authors who produce the academic writings and who are being evaluated have rarely been heard.

Conclusion

Once represented by a bundle of metrics, and the bundle of metrics speaks for the impact, quality, novelty, originality, and productivity in a system of evaluation, a person and her work are flattened in the citation databases and information infrastructure. Yet, social norms and practices, are produced and reproduced not only through cultural knowledge and personal experiences in social interactions, but also through the social values embedded in information infrastructures as well as the types of information that has been collected, stored, and made accessible. The more receptive we are to the values embedded in metrics and the more we identify ourselves with metrics as representations of us, our work, and our worth, the more we reinforce the authority and power to those who maintain the infrastructures (for example, Scopus, Web of Science, GoogleScholar, among others).

No representation in an information infrastructure is perfect. No profiles on Scopus, SciVal or ResearchGate capture the true sense of a real person. As designers and owners of information infrastructure, we ought to consider whether different platforms, including citation databases, are constructed for public good or private gains, or a tool of authority and domination. Furthermore, we ought to be cautious about using metrics as a representation of persons and work. Although most of us cannot change how these metrics are being collocated, manipulated, ranked, added or removed in citation database, we can defend, explain, justify, protest about their validity and legitimacy. It would be a dangerous sign if information infrastructures producing these metrics are exerting strategic influence on the decisions of academic work, while time and space becoming scarce for critical discourse and reflection.

About the author

Lai Ma is an Assistant Professor at School of Information and Communication Studies at University College Dublin, Ireland. She serves as the Director of Master of Library and Information Studies programme and is a Council member of the Library Association of Ireland. She can be contacted at lai.ma@ucd.ie.

References

- Arnold, D. N. and Fowler, K. K. (2011). Nefarious numbers. Notices of the AMS, 58 (3), 434–437.

- Biagioli, M. (2006). Documents of documents: Scientitsts’ names and scientific claims. In A. Riles (Ed.), Documents: Artifacts of Modern Knowledge (pp. 127-157). Ann Arbor: The University of Michigan Press.

- Biagioli, M. (2018). Quality to impact, text to metadata, publication and evaluation in the age of metrics. KNOW, 2 (2), 249-275.

- Brenneis, D. (2006). Reforming promises. In A. Riles (Ed.), Documents: Artifacts of Modern Knowledge (pp. 41-70). Ann Arbor: The University of Michigan Press.

- Burrows, R. (2012). Living with the h-index? Metric assemblages in the contemporary academy. The Sociological Review, 60 (2), 355-372.

- Clanchy, M. T. (1993). From memory to written record: England 1066-1307. Blackwell.

- Collini, S. (2012) What Are Universities For? London: Penguin.

- Cronin, B. (2005). The Hand of Science: Academic Writing and Its Rewards. Lanham, Maryland: The Scarecrow Press.

- Day, R. (2014a). “The Data—It Is Me!” (“Les données—c’est Moi!”). In B. Cronin and C. Sugimoto (Eds), Beyond Bibliometrics: Harnessing Multidimensional Indicators of Scholarly Impact (pp. 67-84). The MIT Press.

- Day, R. (2014b). Indexing It All: The Subject in the Age of Documentation, Information, and Data. The MIT Press.

- Espeland, W. N., & Stevens, M. L. (2008). A sociology of quantification. European Journal of Sociology, 49 (3), 401-436.

- Garfield, E. (1955) ‘Citation indexes for science’, Science, 122: 108-111.

- Garfield, E. (1963). Citation indexes in sociological and historical research. American Documentation, 14 (4), 289-291.

- Garfield, E. (1972) ‘Citation analysis as a tool in journal evaluation’, Science, 178: 471-479.

- Gingras, Y. (2014). Bibliometrics and Research Evaluation: Uses and Abuses. Cambridge, Massachusetts: The MIT Press.

- Hicks, D. et al. (2015). The Leiden Manifesto for research metrics, Nature, 520, 429-431.

- Ma, L., & Ladisch, M. (in press). Evaluation complacency or evaluation inertia? A study of evaluative metrics and research practices in Irish universities. Research Evaluation.

- Müller, R. and de Rijcke, S. (2017) ‘Exploring the epistemic impacts of academic performance indicators in the life sciences’, Research Evaluation, 26/3: 157–168.

- Power, M. (1997) The Audit Society: Rituals of Verification. Oxford: Oxford University Press.

- Readings, B. (1996) _The University in Ruin_s. Cambridge, Massachusetts: Harvard University Press.

- Shore, C. (2008) ‘Audit culture and illiberal governance: Universities and the politics of accountability’, Anthropological Theory, 8/3: 278–298.

- Star, S. L., & Ruhleder, K. (1996). Steps toward an ecology of infrastructure: Design and access for large information spaces. Information Systems Research, 7 (1), 111-134.

- Wilsdon, J., et al. (2015). The Metric Tide: Report of the Independent Review of the Role of Metrics in Research Assessment and Management. DOI: 10.13140/RG.2.1.4929.1363

- Wouters, P. (2014). The citation: From Culture to infrastructure. In B. Cronin and C. Sugimoto (Eds), Beyond Bibliometrics: Harnessing Multidimensional Indicators of Scholarly Impact (pp. 47-66). The MIT Press.