Improving university students’ assessment-related online work

M. Asim Qayyum and David Smith

Introduction. The purpose of this study was to examine how university students read their online assessment tasks and associated readings while focusing on the essential elements of their assessment task and online readings. Under scrutiny was their use of retrieval tools, manipulation of search terms and results, and their reading patterns to investigate the assessment support structures.

Method. Participants were senior undergraduate students in a study using a mixed methods approach. Students’ behaviour in response to an online assessment task was monitored and digitally recorded, and follow-up interviews were conducted.

Analysis. The observations and interviews were analysed to develop categories and themes of assessment related to reading behaviour and information tools usage.

Results. Findings suggest that even experienced university students need support when undertaking online assessment because they often miss key elements in assessment task descriptions, resulting in the formation of ineffectual search terms. Online reading was often unfocused as students searched for perceived keywords in-text, and the selection of subsequent search terms was typically derived from recently browsed materials.

Conclusion. Additional scaffolding of assessments and online readings may improve student understanding of the assessment and reading tasks, and result in effective information searching practices leading to better research outcomes.

Introduction

The use of technology in higher education teaching and learning practices is increasing, and students and academics are now required to engage with the Internet for a range of study-related purposes. Students utilise the Internet to find information, access class materials, engage with fellow students and instructors, cultivate online content and complete online assessment items. Familiarity is, however, often confused with expertise in using the Internet, and many students still experience difficulty and varying degrees of competence when it comes to information literacy (Jones, Ramanau, Cross and Healing, 2010).

Given this shift in higher education learning patterns, the purpose of this research project was to explore how university students read their online assessments and readings, with a focus on the essential elements of the assessment task and the online readings. In particular, the study investigated the assessment support structures by studying the search behaviour of students, including the information tools they used, the search terms they formed and used to discover information, and how they identified relevant search results, chose resources, and their subsequent online reading patterns.

Information tools as defined in this research modify Qayyum and Smith’s list (2015) and comprise computer-based applications that students may use to find information on the Web, for example, library catalogues and databases, search engines like Google and Google Scholar, video podcasts as are found on YouTube, and blogs and wikis in social media. Other examples of information tools are Web portals like Yahoo, MSN.com or single organisation-based portals that package relevant information according to the needs of their clients. These information tools package relevant material from various sources, and present that information to the users in an easy-to-use browse and search format. Therefore, findings from this study are expected to enhance our understanding of how the support structure and scaffolding of an online assessment and learning materials can be improved by observing the students as they carry out research work related to their assessment tasks, use the information tools and read the online resources for learning purposes.

The significance of this study relates to the way online learning environments have changed study contexts, a change that requires a rethink in teaching practices in higher education environments. For example, Holman (2011) suggests the way millennials search for information differs generationally from their older peers, with millennials often opting for simplistic techniques influenced by their experience using online search engines. Students have also been found to be heavy users of Google and Wikipedia (Judd and Kennedy, 2010), and tend to use a restricted number of information searching platforms and search engines (Qayyum and Smith, 2015). Therefore, it is very important that educators gain a better understanding of the online learning behaviour of university students to plan and create improved online learning assessments and associated materials.

Creating online learning exercises for the appropriate academic stage of degree study is important because it provides a scaffold for students whose tertiary study settings and requirements vary. The scaffolding structure is integral to student learning as it furnishes elements such as regulated and staged support as well as a progressive element of diagnosis (Puntambekar and Hübscher, 2005). In its simplest form, a scaffolded assessment is one which contains hints and prompts for the students. In the increasingly complex learning environments now, Puntambekar and Hübscher describe scaffolding as the support provided in the shape of technological tools, peer interactions, and classroom discussions. A key component of this study is the focus on the technology used in distance learning environments, and in such cases, learning from the learner's online behaviour using observation, interviews, and eye tracking devices is a great way to optimise and design material suited to a student's needs.

Review of the literature

A range of factors influence students’ information search behaviour beginning with the information tool itself. Cothran’s (2011) study of Google Scholar found that its perceived usefulness and loyalty among graduate students is very high. There are other more complex factors that impact the comprehension and analysis of students in the assessment task. For example, in her study of student search patterns of undergraduate students, Georgas (2014, p. 511) found that ‘the search terms the undergraduates used almost completely mirrored the language of the research topics presented to them’ and noted that students rarely used synonyms or related terms in their searches. This process can be compared to the initiation or selection stage of Kuhlthau's (1993) model of information search process, where uncertainty exists in the user’s mind amidst cautious optimism. Students at this stage of research have been found to favour natural language and keyword queries over advanced or Boolean searches (Georgas, 2014; Lau and Goh, 2006) and tend not to focus on locating the best materials, but rather rely on the search tool to determine relevance and go with those tools that are convenient and easy to access (De Rosa, Cantrell, Hawk and Wilson, 2006), a behaviour also referred to as satisficing (Prabha, Connaway, Olszewski and Jenkins, 2007). As such, Georgas (2014) highlighted the need for including support structures such as topic analysis and terminology development within the assignment task itself in order to improve student search and evaluation behaviour. This assertion is supported by Thompson (2013, p. 21), who also analysed student technology use patterns and concluded that ‘educators should not assume that students are fully exploiting the affordances of the technology or using it in the most productive way for learning’. Thompson then called on teachers to not rely on students’ familiarity with technology, but to provide explicit instruction in the areas of search term development and evaluating links.

The next stage in student research is when they start reading the discovered material. This stage can be compared to Kuhlthau’s (1993) exploration stage, where the readers try to reduce uncertainties and make sense of the information presented through the technology available to them. Therefore, online search trends are influenced by on-screen reading habits, which tend to be mostly nonlinear (Liu, 2005) with online readers preferring to skim material rather than perform in-depth reading (Stoop, Kreutzer and Kircz, 2013; Williams, 1999).

Rather than blaming the online environment itself, it has been argued that online distractions have a bigger effect on a reader’s focus (Coiro, 2011; Konnikova, 2014) and by removing distractions such as flash banners and images, similar reading outcomes, comparable to those on paper, can be achieved, especially if notes are simultaneously taken (Subrahmanyam et al., 2013). The vast amount of information at hand also has the potential to overwhelm students and possibly inhibit in-depth reading (Thompson, 2013; Weinreich, Obendorf, Herder and Mayer, 2008). Given the advantages of and increasing reliance upon electronic resources, the ability to successfully develop and implement search strategies to navigate the abundance of information online and the capacity to effectively evaluate information sources appear to be highly critical elements of digital literacy (Greene, Seung and Copeland, 2014).

Despite traditional notions of digital natives (Prensky, 2001), students still fail to fully maximise the learning potential of the technology available to them. However, in her study of digital natives, Ng (2012) found that students could still be encouraged to use such technology more effectually. Ng’s finding implied that teaching staff need to understand the affordances of the respective tools, know how to use them, and model or explicitly teach students about their effective use. Others have similarly advocated for the provision of additional training for university students (Georgas, 2014; Qayyum and Smith, 2015; Thompson, 2013) and students have themselves indicated that they would like additional instruction in the use of technology for university learning despite their relative familiarity with its use more generally (Kennedy, Judd, Churchward, Gray and Krause, 2008). However, in a study involving 264 students, Lai, Wang and Lei (2012) found that students’ technology use was overwhelmingly influenced by the conditions created by their lecturers. Margaryan, Littlejohn and Vojt (2011) support this notion, noting that the learning expectations of students related to technology were influenced by the teaching approaches of their lecturers.

Debate exists around whether moulding educational search tools to reflect student behaviour, or improving students’ information literacies to make better use of existing tools, is the way forward (Holman, 2011). Modelling effective search behaviour, providing specialised training and improving the structuring and design of online learning tasks (Goodyear and Ellis, 2008) are also pertinent approaches. Within the context of information searching for assessments, the observations recorded in this research seek to better understand students’ online search behaviour in order to inform the selection of a technique or intervention aimed at improving their search habits. Therefore, this study is not seeking to fit one technology to solve the problem, but rather to understand the problem so that a solution can be devised which is free of the underlying technological platform.

This study builds on an earlier phase of the study which looked at the search behaviour of first year university students enrolled in a transition-to-university subject (Qayyum and Smith, 2015). The learnings from the previous conducted phase one were used to design the phase two reported here. This current phase is unique in a way because most online information search literature tends to focus on new or first-year students (Holman, 2011) or alternatively group combinations of student year levels together (Georgas, 2014; Kirkwood, 2008; Prabha et al., 2007). The study reported here was instead focused on understanding the online reading behaviour and assessment support and scaffolding for senior undergraduate students when they selected and used information tools, formed search terms, analysed search results, and read discovered resources related to their assessments.

Methodology

This research was undertaken at Charles Sturt University in Australia with students enrolled in a subject within the Faculty of Arts and Education. It adopts a similar mixed method study approach to that used in the preceding phase of the research to maintain continuity (see Qayyum and Smith, 2015). Such an approach was adopted because it allows researchers to investigate in-depth problems that students experience with online assessments and readings in a relevantly authentic context as recommended by George and Bennett (2005). Moreover, use of this mixed methods approach provided the researchers with the opportunity to observe university students using computers to search for relevant information in relation to an assessment task (Runeson and Höst, 2009). The participating students were required to undertake a individual, forty-five minute long information searching session, which was digitally recorded. This session was immediately followed by a retrospective interview of up to ten minutes duration with each participant, to gather more information on their observed searching, reading, and browsing activities during the observation session. The participants were questioned about their usual information searching practices and any disparities between the observed and reported behaviour were queried.

The participants were five volunteer students in their senior (third and fourth) years of an undergraduate programme who responded to an invitation sent to all students enrolled in an online subject. The particular subject was selected as some of the enrolled students lived near the university campus and could easily reach the campus to participate in the research. Five was deemed to be an adequate number as Nielsen (2012a; 2012b, Chap 1) recommends using only a handful of users and deems that even a small sample size is sufficient to identify the key usability issues. All students were enrolled in one online subject in the Bachelor of Teaching, and as part of their subject’s assessment, they were required to complete an essay with a rubric-based assessment structure.

This study was conducted, under the auspices of the university’s ethics guidelines, approximately one to two weeks before the assessment’s due date, to study the information behaviour of students as they searched, browsed and read online materials for the assessment. Briefly, the assessment required students to identify and describe some common teaching issues in a context that was familiar to them. These issues guided the creation of a personal philosophy to address the key beliefs required for the assessment, and six main preventative areas required by the assessment needed to be incorporated. Students were then required in the assessment to explain how the created philosophy addressed the issues identified earlier, and justify their philosophical approach. Over a one week period allocated to data collection for this research, the researchers arranged to meet individually with the five participants at a mutually convenient time, as they initiated background investigations required for their assessment task. These participants were asked to do some of those assessment-focused investigations in a computer laboratory session.

The observation sessions were held in the on-campus computer laboratory where the participants’ online study techniques could be monitored by recording all screen movements, including eye movements (indicated by the red dot generated by an eye tracker), types of information tools used, the search terms formed, Web resources visited, documents viewed, and mouse and keyboard actions. The computer laboratory is housed in the library where information searching is one of the main activities. A laboratory-based study was deemed adequate for this small scale study as recommended by Tullis, Fleischman, McNulty, Cianchette and Bergel (2002) because most issues can be identified in this manner. Moreover, a review of usability literature (Bastien, 2010) supports the notion that laboratory-based studies yield results at par with remote, field-based testing. During these observation sessions, all the audio in the room was also captured along with the screen recordings.

The recorded observations and interview transcripts of participants were analysed using a constant comparative audit of data to discover emerging themes according to the grounded theory’s data analysing approach (Strauss and Corbin, 1998). Grounded theory was thus used only for guiding the data analysing process as this study did not start with any hypothesis and was focused on discovering key emergent themes to inform the assessment support structures in higher education. The collected data was coded using a constant comparison approach to constantly check each new bit of data against the already analysed data to form or support an emerging theme. All three researchers individually carried out open coding of data by examining and developing rules, and assigned their own codes as descriptors of data elements. The discovered themes were thus easier to identify when all the three analyses were put together, and that process led into axial coding to link the emerging themes.

The three researchers met as a group several times to compare their codes and the coding rules, and to refine and agree on the terminology used. Emerging categories or themes were discussed at length among the three coders as the clustering of codes continued to happen around the themes. Finally, the selective coding process allowed a consensus to be reached on the following four core themes:

- Information tool selection and use

- Selection and evaluation of search terms

- Selection and review of search results

- Reading, evaluation, and analysis of information resources.

The results and findings section below is now reported according to the four themes. Limitations noted for this study are the small sample size in one particular academic discipline, and the use of a concise assessment task under time pressure and in laboratory settings.

Findings of the study

As stated before, the preceding phase of the study investigated the reading and search habits of novice students. That study found that very few students carefully read the required task, and in all but one instance the search engine used was Google. This is despite the assertion by some students that they use only Google Scholar or the library database search facility for academic research. The findings from phase one also suggested that the few students who displayed elements of re-reading, comparison and reading slowly, did exhibit better achievement in the task result. Findings from the current phase two of this study now follow.

1. Information tool selection and use

Screen recordings indicated that all participating students were familiar with the popular information tools, typically utilising a library catalogue and its databases as well as Google Scholar. Note that in this study Information tools have been defined as a computer-based application that students use to find information over the Web. Recordings show that three of the five students started their search with the library catalogue, and three used some of the advanced search features of the catalogue to narrow down their searches. All but one participant used Google Scholar at some point during their research.

During the follow-up interviews, participants stated that they usually engaged in the following sequence of tool usage when conducting academic research (see Table 1 below: P01 represents participant No. 1, P02 is participant No. 2, and so on). Four of the five student participants indicated that subject guides were one of their most preferred tools because as P1 stated, ‘[I] start with the subject guide first, because that has recommended texts and sources’. This articulated preference however, differed from their observed behaviour, as no participant consulted their subject guide during the research session. The non-usage of subject guides may have been influenced by a reluctance to access a password secure Website during the recorded research session, or perhaps because of issues regarding time. This non-usage was, however, the only main discrepancy between the observed information-seeking behaviour and the responses in the interviews for all five participants.

| Usual order of use | P01 | P02 | P03 | P04 | P05 |

|---|---|---|---|---|---|

| Tools preferred in academic research | |||||

| 1 | Subject guides | Library catalogue and databases | Google Scholar | Subject guides | |

| 2 | Library catalogue | Subject guides | Subject guides | Library catalogue and databases | Google Scholar |

| 3 | Google Scholar | Google Scholar | Sometimes library catalogue & databases | Sometimes Google | Library catalogue |

| 4 | YouTube | Rarely YouTube | Subject guides | ||

| 5 | YouTube | Other people | |||

| Tools not preferred in academic research | |||||

| Social media | YouTube | Social media | Social media | YouTube | |

| Social media | Google Scholar | ||||

As per the observations from the screen recordings of the eye tracking data, Google Scholar was highly favoured by two of the five participants because, as one participant reasoned, ‘I find Google Scholar a little bit easier to use, it tends to be a little bit more intuitive and it tends to have a bit more smarts behind the interpretation of keywords’. Another chose to start with Google in order to understand the concepts better, saying ‘if you just go to standard Google it’s more in layman’s terms and it’s really easy to understand and then you can get your ideas, get your concepts and then build off it with the Google Scholar’.

From the interviews, three of the five participants stated their preference for using library databases when a targeted search was required, or when they had a good understanding of what they were looking for. Three participants also said that the library would be the preferred place to go if they were looking for a book.

Social media is still, as the name suggests, for social purposes. All five participants shared this opinion and stated that they would use social media only if required to in coursework. However, two participants qualified their first statement, stating that YouTube may be of some academic use in certain circumstances such as tool training.

2. Selection and evaluation of search terms

The observed behaviour for the formulation and manipulation of search terms was varied in this research. For example, on one occasion a participant formed a search term after having read a reference in the reference list of an article, while another drew upon the references provided in the learning materials. Most of the time, participants simply created search terms after reading the assessment requirements. After initially forming the search term, participants rephrased terms on average four to five times per person as they expanded their searches for relevant materials. Different user behaviour was observed; one participant revised the search terms as many as seven times, while another person revised the search term only once.

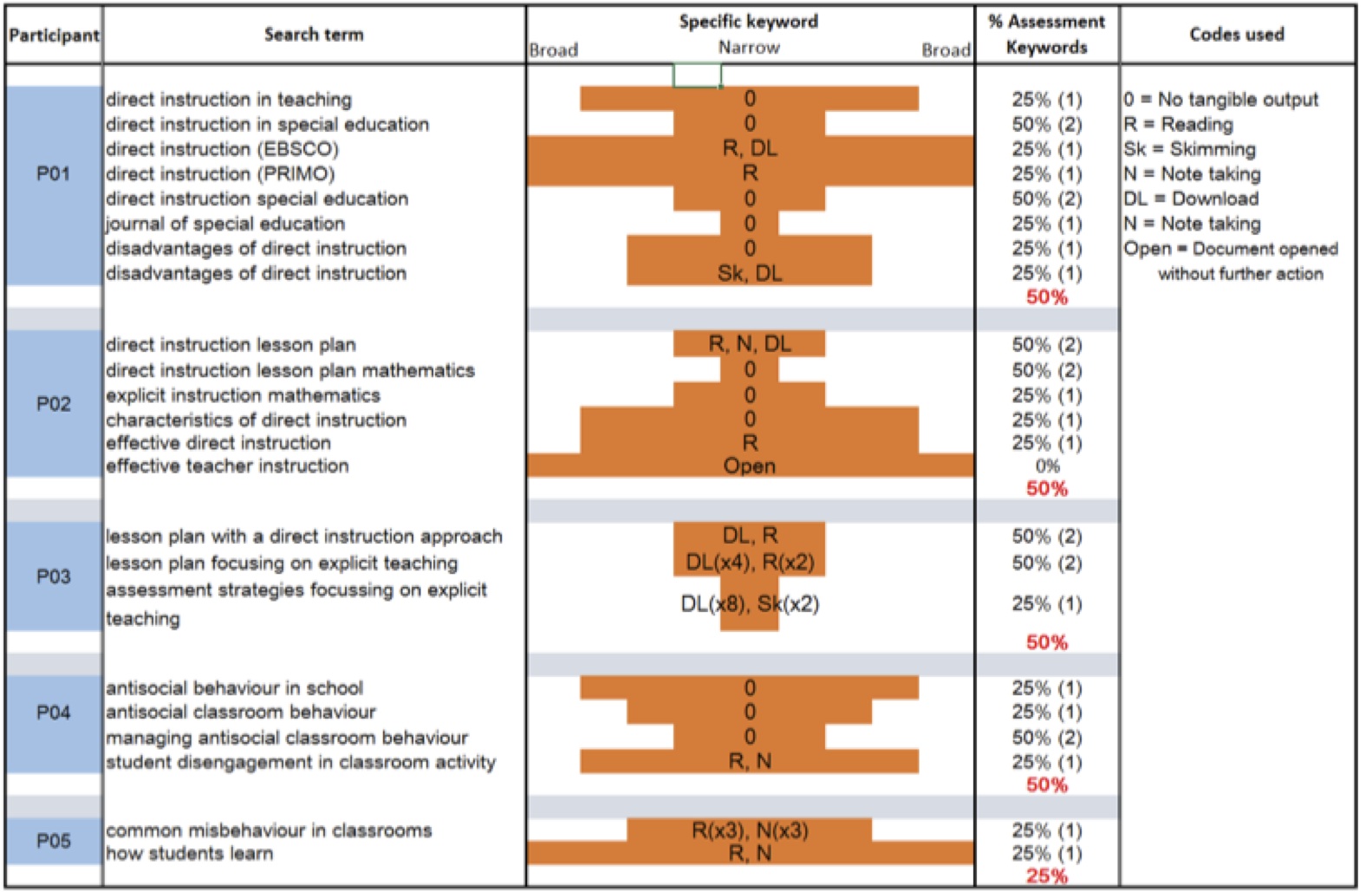

Search term use and refinement behaviour is captured in Figure 1. Note that P01 started with quite a broad search, which turned out to be quite ineffectual as indicated by zero downloads, and was thus narrowed down but still failed to result in an article download. Third and fourth attempts were made in library databases with broad terms where some relevant resources were discovered, resulting in being read [R], and downloaded [DL]. Three more unproductive attempts followed before the participant found relevant material when a search term better related to the topic was formed. P02 and P04 also made a few unsuccessful searches which lead to no reading or downloads, and only P03 seemed to be successful in forming a focused and relevant search term right in the beginning to find and read useful materials.

Figure 1: Search term formation and refinement by participants

During the interviews, three users stated that they created a mental model of what needed to be searched based on the assessment and related learning materials. The refinement of search terms happened once they had reviewed the search results and read some of the literature identified. For these participants, the purpose of the assessment was also not immediately clear and they engaged in some initial browsing to help provide clarity. This aspect of mental modelling is discussed in more detail in the next section.

3. Selection and review of search results

Observations from eye-tracking data on participants’ search behaviour whilst using Google Scholar revealed that participants read higher placed titles and their related blurbs more than lower placed titles (note: four of five participants used Google or Google Scholar). This observation was typical when participants began their search, but once they began to delve for more information they also looked at the lower-placed results on the page and read their Google search results.

Once participants reached the abstract of an article, only one read the abstracts carefully before downloading the full text. Typically the first half of abstracts was read, while the rest was mostly skimmed, scanned, or perhaps even skipped, especially if the reader decided to abandon the article after reading the abstract. Students placed considerable value on abstracts during their information searches and one participant mentioned that the abstract was read to determine the worth of an article. Overall, it was observed that an abstract was the most closely read section within an article.

The behaviour of three participants indicated that users create mental connections in their minds between the discovered information, the task, and what they had previously learnt before formulating the next search string or keywords. Though this approach may not be the same for all, there was some similarity observed in the case of these three individuals each time they began a new search. For example P04’s stated mental search approach was, ‘I kind of just followed the trail really and I don’t really think in a very logical sequence. I tend to just be really messy, but in my mind it’s all making connections to prior learning’, and ‘[after doing some research] I started to get more clarity around what the purpose of the assessment is’. P05 stated, ‘I just went through the five things [in the article], broke them down and then related it back [to the search].’ A common element in the approach used by all these three participants was that they evaluated search blurbs on the first page of the search results before deciding if they wanted to click on the link for further information.

4. Reading, evaluation, and analysis of information resources

Reading and evaluation behaviour

On average, each participant was observed engaged in deep reading only once. Typically, the iris movements indicated deep reading when a person slowly read the text, and then perhaps re-read that piece of text, as if trying to make sense of what was written. Otherwise their reading behaviour was mostly just skimming and scanning as they quickly went through texts, pausing only at words such as summarising, concluding or acronyms. P03 described this behaviour as, ‘Briefly [I] read them [articles] online until I come across some key points that are going to be beneficial’. P05 stated that, ‘Probably I would try and read the question and then go through and do the reading …. possibly pick your key points out of the reading and then do it that way.’

After going through the abstract, as described in the previous section, those who continued reading the article, typically four of the participants quickly scanned the contents list, and the introductory sections, e.g. literature review, with actual reading happening only occasionally and in minor spells. The last parts of these early sections of an article were usually skipped. Mid-sections of an article, like the methods and results, were usually read in the beginning but the majority of these sections was skipped. Later parts of an article (like the discussions section) were mostly skipped entirely, or a reader would read a bit of text in the beginning of a section, or randomly some text in the middle if it caught their eye while scanning the document. Finally, references were skimmed by three participants, and as stated before, the search queries of two participants were influenced by references found in resources.

For the two participants who read the text in detail, the eye scanning results indicated that the favourite reading places were bulleted points, italicised parts, or blocks of text where they stopped skimming and quickly read the text. Reading was observed to be usually in a non-sequential reading pattern, titles and headings were mostly read, or at least skimmed, while two participants read the top headings and the first row following that. For illustrations, tables attracted less attention than figures. Two skipped tables, while three skimmed them, and four participants skimmed the figures when encountered.

Analysis of discovered information

Note-taking was frequently observed, with three participants taking notes, two of them electronically while searching and browsing the information, while the third participant took notes on paper. Preferences for paper or electronic note taking were similarly split, with an indication that the options available to participants in this research were not suited for all, for example, the favourite notetaking device for P2 was a Kindle. P1, who did not take any notes during this research session stated that, ‘if it's for an assessment task I usually start by like putting the title of the journal, the title of the book and then I take some notes and usually put the records under it, just do that in one Word document’. The observed notes were mostly simple jotting down of the headings or titles associated with an article, with only one person displaying elements of synthesising information while developing notes.

Discussion and conclusions

The information search procedures adopted by participants in this research project have been documented in the findings section above, where the participants started by reading the assessment task, chose an appropriate or favourite search platform, created search terms and then modified them based on further readings and search results, and finally analysed the discovered information. Note that even with all the modern tools available to students, the information discovery and manipulation process still pretty much follows the six stages in Kuhlthau’s (1993) more than two decades old model: initiation, selection, exploration, formation, collection and presentation.

The findings of this study first focused on the information tool that the participants used to start their information search process. Recall that a limited range of information tools were frequently used by the study participants, namely Google Scholar, the library catalogue, and Google. It was also noted that usage of the advanced search features offered by these tools was very limited, thus supporting previous research which suggests that there is a need to scaffold information search skills for university students (Smith and Qayyum, 2015). If such scaffolding is in place then the students will be able to choose the information tools carefully, read the online assessment task better and identify relevant keywords, and then be able to use those keywords in forming effective search terms. Finally, they will be able to better conduct their searches while using the advanced search features offered by the information tools.

If the information search process was successful then the participants usually downloaded or opened an online document and read it. Reading online documents to gain a good understanding is another key part of the required scaffolding, and as this research indicates, much of the time the students quickly scanned the reading material and stopped to read only when a perceived keyword or a key point was detected, or stopped to read where key text was perceived to be present e.g. in titles, headings, or bulleted lists. Such novice reading skills reinforce the need to train students in practices that develop information literacy skills and online reading capabilities. Students need to be trained in discrete skills of reading (decoding, sight-word recognition, reading fluency, and accuracy) and the top-down cognitive processes involved in comprehension to understand texts (Roman, Thompson, Ernst and Hakuta, 2016, p. 14). Moreover, to place some onus on authors of online texts, this research also supports the literature which indicates that there is a need to structure online readings by instructors to improve the in-depth reading and study practices of students, and thus motivate students to sustain their on-line study practices (Ji, Michaels and Waterman, 2014). Therefore the student support structures should include training so that students become better readers, and should include a rethink and redesign of the assessments and online texts by teachers, writers and publishers to facilitate the keyword hunting type of reading by students.

Exploring keyword formation in more depth, the findings indicated that two participants used the search results to refine and modify their search terms, while another two participants referred back to the learning materials before modifying the search terms. The latter was deemed to be a better strategy as it was observed to yield improved results when participants quickly found useful information. Otherwise, as the findings indicated, one participant made five revisions to their search terms without achieving any success, a practice that should be discouraged through improved searching skills training, perhaps through skills training workshops by the institution’s librarians. Thompson (2013) reports similar searching trends from a survey of 388 first year university students and recommends students be given explicit instruction in forming search terms and evaluating the discovered information. Therefore, any scaffolding of students’ information skills should include training users to refer to appropriate learning materials before forming search strategies. That way all relevant keywords required for the search will be part of a student’s vision that can then be translated into actual search.

A final part of assessment scaffolding would be to encourage students to take notes, electronically or on paper, as they read online. The change of reading habits from paper to electronic may have sidelined the index card style of note-taking, but the need remains just as much. Moreover, this focus on note-taking should involve analysing the discovered findings on the go rather than just jotting down words from some headings, or perceived keywords. P04 followed the latter practice and did not have much success in forming good keywords for subsequent searches. P05 took better notes than other study participants, which included some synthesis of the discovered information and as a result had much better success in forming good search keywords. Therefore, effective note-taking in electronic environments especially needs to be encouraged by instructors, preferably supported by note-taking training exercises.

To conclude, the foremost observation of this study is that the support structures and systems that are currently used in distance learning environments related to online assessment tasks need improvement. Specifically, the task descriptions and online reading materials should be better structured to scaffold assessments and achieve better searching, reading, and learning outcomes. Discussing searching and reading strategies can easily be done in a traditional classroom setting, but is usually not possible in distance settings and therefore training must be carried out separately, or cues should be built into the tasks themselves to scaffold the assessment. Specific guidance in the task should assist students in selecting better search terms so that the students engage with the online contents, and stay connected with them for effective information discovery. A good scaffold in tasks should also reduce behaviour such as using hit and trial techniques to come up with better search terms, or quickly scanning online documents without any significant outcome.

Given that the findings from this research suggest the possible benefits of scaffolding online reading and assessment tasks, the researchers are planning to conduct an additional phase of the study exploring the use of an intervention in the online assessment process. Such an intervention will require the students to use keyword visualization software to analyse texts, and the project will examine the impact of such an intervention on their searching and reading practices.

Acknowledgements

This research project was funded by a grant from the Faculty of Arts and Education at Charles Sturt University. We acknowledge and thank Simon Welsh for his support and work done during data analysis. We also acknowledge and thank Natasha Hard who worked as a research assistant during the write-up phase of this project. Finally, we thank the anonymous reviewers for their detailed and constructive comments.

About the authors

Muhammad Asim Qayyum is a Lecturer in the School of Information Studies at Charles Sturt University, Australia. Much of Asim's teaching is in the areas of knowledge management and information architecture, while his research is focused on two topics; the use of technology to improve student learning, and the role of wisdom in knowledge work. Asim can be contacted at aqayyum@csu.edu.au

David Smith is Head of the School of Education in the Faculty of Arts and Education at Charles Sturt University, Australia. David has developed mobile apps and has expertise in online learning design. His interests include the integration of technology in learning and the effective implementation of eLearning strategies in the education and training sector. He can be contacted at davismith@csu.edu.au

References

- Bastien, J. M. C. (2010). Usability testing: a review of some methodological and technical aspects of the method. International Journal of Medical Informatics, 79(4), e18-e23.

- Coiro, J. (2011). Talking about reading as thinking: modeling the hidden complexities of online reading comprehension. Theory Into Practice, 50(2), 107-115.

- Cothran, T. (2011). Google Scholar acceptance and use among graduate students: a quantitative study. Library & Information Science Research, 33(4), 293-301.

- De Rosa, C., Cantrell, J., Hawk, J., & Wilson, A. (2006). College students’ perceptions of libraries and information resources: a report to the OCLC membership. Dublin, OH: OCLC Online Computer Library Center. Retrieved from http://www.oclc.org/content/dam/oclc/reports/pdfs/studentperceptions.pdf (Archived by WebCite® at http://www.webcitation.org/6ulJ9YpUf).

- Georgas, H. (2014). Google vs. the library (part II): student search patterns and behaviors when using google and a federated search tool. Libraries and the Academy, 14(4), 503-532.

- George, A. L., & Bennett, A. (2005). Case studies and theory development in the social sciences. Cambridge MA: MIT Press.

- Goodyear, P., & Ellis, R. A. (2008). University students’ approaches to learning: rethinking the place of technology. Distance Education, 29(2), 141-152.

- Greene, J. A., Seung, B. Y., & Copeland, D. Z. (2014). Measuring critical components of digital literacy and their relationships with learning. Computers & Education, 76, 55-69.

- Holman, L. (2011). Millennial students' mental models of search: implications for academic librarians and database developers. The Journal of Academic Librarianship, 37(1), 19-27.

- Ji, S. W., Michaels, S., & Waterman, D. (2014). Print vs. electronic readings in college courses: cost-efficiency and perceived learning. The Internet and Higher Education, 21, 17-24.

- Jones, C., Ramanau, R., Cross, S., & Healing, G. (2010). Net generation or digital natives: is there a distinct new generation entering university? Computers & Education, 54(3), 722-732.

- Judd, T., & Kennedy, G. (2010). A five-year study of on-campus internet use by undergraduate biomedical students. Computers & Education, 55(4), 1564-1571.

- Kennedy, G. E., Judd, T. S., Churchward, A., Gray, K., & Krause, K.-L. (2008). First year students’ experiences with technology: are they really digital natives. Australasian Journal of Educational Technology, 24(1), 108-122.

- Kirkwood, A. (2008). Getting it from the Web: why and how online resources are used by independent undergraduate learners. Journal of Computer Assisted Learning, 24(5), 372-382.

- Konnikova, M. (2014, July 16). Being a better online reader. The New Yorker. Retrieved from http://www.newyorker.com/science/maria-konnikova/being-a-better-online-reader (Archived by WebCite® at http://www.webcitation.org/6ulJIOqe6)

- Kuhlthau, C. C. (1993). A principle of uncertainity for information seeking. Journal of Documentation, 49(4), 339-355.

- Lai, C., Wang, Q., & Lei, J. (2012). What factors predict undergraduate students' use of technology for learning? A case from Hong Kong. Computers & Education, 59(2), 569-579.

- Lau, E. P., & Goh, D. H. L. (2006). In search of query patterns: a case study of a university OPAC. Information Processing & Management, 42(5), 1316-1329.

- Liu, Z. (2005). Reading behaviour in the digital environment. Journal of Documentation, 61(6), 700-712.

- Margaryan, A., Littlejohn, A., & Vojt, G. (2011). Are digital natives a myth or reality? University students’ use of digital technologies. Computers & Education, 56(2), 429-440.

- Ng, W. (2012). Can we teach digital natives digital literacy? Computers & Education, 59(3), 1065-1078.

- Nielsen, J. (2012a). How many test users in a usability study? Retrieved from http://www.nngroup.com/articles/how-many-test-users/ (Archived by WebCite® at http://www.webcitation.org/6ulJOAOAW)

- Nielsen, J. (2012b). Mobile usability. Berkeley, CA: New Riders.

- Prabha, C., Connaway, L. S., Olszewski, L., & Jenkins, L. R. (2007). What is enough? Satisficing information needs. Journal of Documentation, 63(1), 53-74.

- Prensky, M. (2001). Digital natives, digital immigrants, part 1. On the Horizon, 9(5), 1-6.

- Puntambekar, S., & Hübscher, R. (2005). Tools for scaffolding students in a complex learning environment: What have we gained and what have we missed? Educational Psychologist, 40, 1-12.

- Qayyum, M. A., & Smith, D. (2015). Learning from student experiences for online assessment tasks. Information Research, 20(2), paper 674. Retrieved from http://InformationR.net/ir/20-2/paper674.html (Archived by WebCite® at http://www.webcitation.org/6ZGFnGeN5)

- Roman, D., Thompson, K., Ernst, L., & Hakuta, K. (2016). WordSift: a free web-based vocabulary tool designed to help science teachers in integrating interactive literacy activities. Science Activities: Classroom Projects and Curriculum Ideas, 53(1), 13-23.

- Runeson, P., & Höst, M. (2009). Guidelines for conducting and reporting case study research in software engineering. Empirical Software Engineering, 14(2), 131-164.

- Smith, D. J., & Qayyum, M. A. (2015). Using technology to enhance the student assessment experience. World Academy of Science, Engineering and Technology International Journal of Social, Education, Economics and Management Engineering, 9(1), 340-343. Retrieved from https://waset.org/Publication/using-technology-to-enhance-the-student-assessment-experience/10000611

- Stoop, J., Kreutzer, P., & Kircz, J. G. (2013). Reading and learning from screens versus print: a study in changing habits. New Library World, 114(9/10), 371-383.

- Strauss, A., & Corbin, J. (1998). Basics of qualitative research: techniques and procedures for developing grounded theory. Thousand Oaks, CA: Sage Publications.

- Subrahmanyam, K., Michikyan, M., Clemmons, C., Carrillo, R., Uhls, Y. T., & Greenfield, P. M. (2013). Learning from paper, learning from screens: impact of screen reading and multitasking conditions on reading and writing among college students. International Journal of Cyber Behavior, Psychology and Learning (IJCBPL), 3(4), 1-27.

- Thompson, P. (2013). The digital natives as learners: technology use patterns and approaches to learning. Computers & Education, 65, 12-33.

- Tullis, T., Fleischman, S., McNulty, M., Cianchette, C., & Bergel, M. (2002). An Empirical Comparison of Lab and Remote Usability Testing of Web Sites. Paper presented at the Usability Professionals Association Conference, Orlando, Fl.

- Weinreich, H., Obendorf, H., Herder, E., & Mayer, M. (2008). Not quite the average: an empirical study of Web use. ACM Transactions on the Web (TWEB), 2(1), 5.

- Williams, P. (1999). The net generation: the experiences, attitudes and behaviour of children using the Internet for their own purposes. Aslib Proceedings, 51(9), 315-322.