A social diffusion model of misinformation and disinformation for understanding human information behaviour

Natascha A. Karlova and Karen E. Fisher

The Information School, University of Washington, Seattle, WA 98195

Introduction

In Japan after the March 2011 earthquake, radiation leaked from the Fukushima nuclear power station. The Japanese government declared they are working on a cleanup effort, but people question whether this is true (Nagata 2011), and are uncertain about the safety of returning to their homes (Fackler 2011).

In spring 2012 European economies are struggling, and German Chancellor Merkel is making political moves to bring other countries in line with German fiscal policies. Consequently, there are also growing concerns about Germany’s power in the European Union (Ehlers et al. 2011), and people are wondering about the implications of strong German influence on Eurozone economic policies (Eleftherotypia 2011).

These examples demonstrate possible consequences of inaccurate and deceptive information: suspicion, fear, worry, anger, and decisions resulting from these consequences. As gossip and rumours abound, it is difficult to distinguish among information, misinformation, and disinformation. People enjoy sharing information, especially when it is ‘news’. Although they may not believe such information themselves, they take pleasure in disseminating it through their social networks. In this way, misinformation (inaccurate information) and disinformation (deceptive information) easily diffuse, over time, across social groups. Social media, such as Twitter and Facebook, have made dissemination and diffusion easier and faster. High-impact topics, for example, health, politics, finances, and technology trends, are prime sources of misinformation and disinformation in wide-ranging contexts, for example, business, government, and everyday life.

Despite the plethora of inaccurate and misleading information in the media and in online environments, traditional models of information behaviour seem to suggest a normative conception of information as consistently accurate, true, complete, and current, and they neglect to consider whether information might be misinformation (inaccurate information) or disinformation (deceptive information). To better understand the natures of misinforming and disinforming as forms of information behaviour, we build from such normative models and propose our own model of misinformation and disinformation. Our model illustrates how people create and use misinformation and disinformation. In our discussion, we argue that misinformation and disinformation need to be included in considerations of information behaviour, specifically elements of information literacy, because inaccuracies and deceptions permeate much of the world’s information.

The purpose of our paper is thus to: 1) demonstrate how misinformation and disinformation are most usefully viewed as forms of information; 2) illustrate the social diffusion process by which misinforming and disinforming function as types of information behaviour; and 3) elucidate how misinforming and disinforming can be modeled to account for vivid examples in different domains.

Extending information

Information scientists have long debated the nature of information: what it is, where it comes from, the kinds of actions it affords humans, etc. Misinformation and disinformation tend to be limited and understudied areas in efforts to understand the nature of information (Rubin 2010; Zhou and Zhang 2007). From its earliest stages, information science has sought to define information, beginning with Shannon and Weaver’s (1949) idea that information can be quantified as bits of a signal transmitted between one sender and one receiver. This model does not clarify understanding misinformation and disinformation because they may carry multiple, often simultaneous levels of bits and signals (as opposed to one signal), and because describing misinformation and disinformation as merely ‘noise’ ignores their informativeness (discussed below). Later, Taylor (1962) argued for the need to study 'the conscious within-brain description of the [information] need' (p. 391). Belkin and Robertson (1976) notably advocated for a view of information as 'that which is capable of transforming structure' (p. 198) of information inside a user’s mind. Dervin and Nilan (1986), in a landmark ARIST paper, contended that information ought to be viewed 'as something constructed by human beings' (p. 16).

Tuominen and Savolainen (1997) articulated a social constructionist view of the nature of information as a 'communicative construct which is produced in a social context'. They focused on discursive action as the means by which people construct information. A constructionist view of information is useful when discussing misinformation and disinformation because it emphasizes social context and conversations among people as ways of determining what information is and what can be informative. Misinforming and disinforming are information activities that may occur in discourse between people, and so, through this conversational act, misinformation and disinformation can be information people may use to construct some reality. In this way, misinformation and disinformation are extensions of information.

Misinformation

Unfortunately, misinformation does not seem to earn the attention it deserves. While the Oxford English Dictionary defines misinformation as, 'wrong or misleading information', few authors have discussed the topic in detail. Authors commonly cite the OED definition without further analysis or discussion (e.g., Bednar and Welch 2008; Stahl 2006). Fox’s (1983) pioneering work on misinformation clearly delineated the relationship between information and misinformation. Fox (1983) stated that, 'information need not be true'; that is, there is no reason that information must be true, so misinformation may be false. He wrote that,'misinformation is a species of information' and, thus, drew the relationship clearly: misinformation, albeit false, is still information and, therefore, can still be informative.

In an article about the nature of information, Losee (1997) stated that misinformation may be simply information that is incomplete. Zhou and Zhang (2007) added to this discussion with additional types of misinformation, including concealment, ambivalence, distortion, and falsification (because they do not disambiguate between misinformation and disinformation). However, incomplete and even irrelevant information may still be true, accurate, current, and informative, therefore, meet many of the same qualifications commonly accepted for information. Karlova and Lee (2011) added that misinformation may also be inaccurate, uncertain (perhaps by presenting more than one possibility or choice), vague (unclear), or ambiguous (open to multiple interpretations). Information that is incomplete may also be a form of deception, which frequently qualifies as disinformation.

Disinformation

The Oxford English Dictionary describes disinformation as, 'deliberately false information' and states that the term, disinformation, comes from a Russian term, dezinformacija, coined in 1949. Given the political and cultural milieu in the Soviet Union at that time, the strong association between disinformation and negative, malicious intent probably developed as a result of Stalinist information control policies. Since the term disinformation has been created relatively recently, perhaps it is not surprising that not much work has explored the concept. Authors typically treat disinformation as a kind of misinformation (Losee 1997; Zhou et al. 2004). Fallis (2009), however, analysed disinformation to uncover sets of conditions under which disinformation may occur. He concluded that, 'while disinformation will typically be inaccurate, it does not have to be inaccurate. It just has to be misleading. So, disinformation is actually not a proper subset of inaccurate information [misinformation]' (p. 6). Fallis argued that disinformation can be misleading, in the context of a situation. His analysis of disinformation builds further support for a subjective, constructionist view of information, as articulated by Hjørland (2007).

Although disinformation may share properties with information and misinformation (e.g., truth, accuracy, completeness, currency), disinformation is deliberately deceptive information. The intentions behind such deception are unknowable, but may include socially-motivated, benevolent reasons (e.g., lying about a surprise party, adhering to cultural values, demonstrating community membership, etc.) and personally-motivated, antagonistic reasons (e.g., manipulating a competitor’s stock price, controlling a populace, ruining someone’s reputation, etc.). Since misinformation may be false, and since disinformation may be true, misinformation and disinformation must be distinct, yet equal, sub-categories of information.

| Information | Misinformation | Disinformation | |

|---|---|---|---|

| True | Y | Y/N | Y/N |

| Complete | Y/N | Y/N | Y/N |

| Current | Y | Y/N | Y/N |

| Informative | Y | Y | Y |

| Deceptive | N | N | Y |

Informativeness of misinformation and disinformation

How can it be that we can be informed by misinformation and disinformation? Buckland (1991) wrote that, '[b]eing “informative” is situational' (double quote marks in original). In this sense, informativeness depends on the meaning of the informative thing (e.g., sentence, photo, etc.). Different situations imbue different meanings on different things, and these meanings may depend on the knowledge of the receiver. Buckland’s idea illustrates why misinformation can be difficult to define and to identify: what is misinformation in one situation might not be in another because the meanings might be different. The act of disinforming may be weakly situation-dependent compared to misinforming because the intent of the speaker is a constant, even if the speaker does not act on that intent. A deceiver will intend to deceive, regardless of the situation, but someone who simply misinforms may not intend to do so.

However, the success, or failure, of the deceiver may be strongly situation-dependent if some aspect of the world changes unbeknownst to the deceiver between the time that he speaks and that the receiver acts upon the disinformation. For example, Jack wishes to deceive Sarah (for unknown reasons) and tells her that the movie starts at 15:30, even though he knows that it starts at 15:00. However, Jack is unaware that the movie theater projector is broken and the movie start is delayed by 30 minutes. When Sarah arrives in time for a 15:30 showing, she may not realize that Jack made either a false or an inaccurate statement. This case illustrates two important aspects of disinformation. Here, the deceiver failed to disinform, despite intent to do so; the informativeness of (dis)information may depend on the situation.

In his influential article, Buckland (1991) advocated the view that, depending on the situation, information is a thing, a process, and knowledge because he focused on wanting to understand informativeness. Misinformation and disinformation may also be things, processes, or knowledge, and therefore informative, by implying or revealing information. The speaker of misinformation may reveal information (perhaps accidentally) or may imply information or state of the world. Misinformation tends to be accidental, but the informativeness of it may depend on the relationship between the speaker and the receiver. Disinformation could possibly be more informative than misinformation, perhaps because any reveal or implication may be deliberate.

Consider an instance in which a speaker provides partially distorted information to the receiver (e.g., 'The new phone comes out next year' when, in fact, the new phone comes out this year). In this case, the receiver is partially informed about the fact that a new phone is coming out. Disinformation may reveal the malicious intent of the speaker. If the receiver happens to know that the new phone comes out this year, she might suspect that the speaker is intending to deceive her. Here, the receiver is informed about the potential intent of the speaker, which is external to the message actually being delivered.

Additionally, disinformation (as well as misinformation) may reveal the ignorance of the speaker. Disinformation may imply partial disclosure or a false state of the world. For example, imagine that Alice is an expert on giraffes and Erik, perhaps unaware of the extent of her expertise, confidently tries to convince her that giraffes are officially listed as an endangered species. From this exchange, Alice might: 1) suspect that Erik is trying to deceive her and start questioning his intent and/or 2) believe that Erik is simply misinformed about the state of the world (both of these responses are equally possible). These hypothetical examples suggest that perhaps misinformation and disinformation provide different levels of informativeness, depending on the situation.

Model

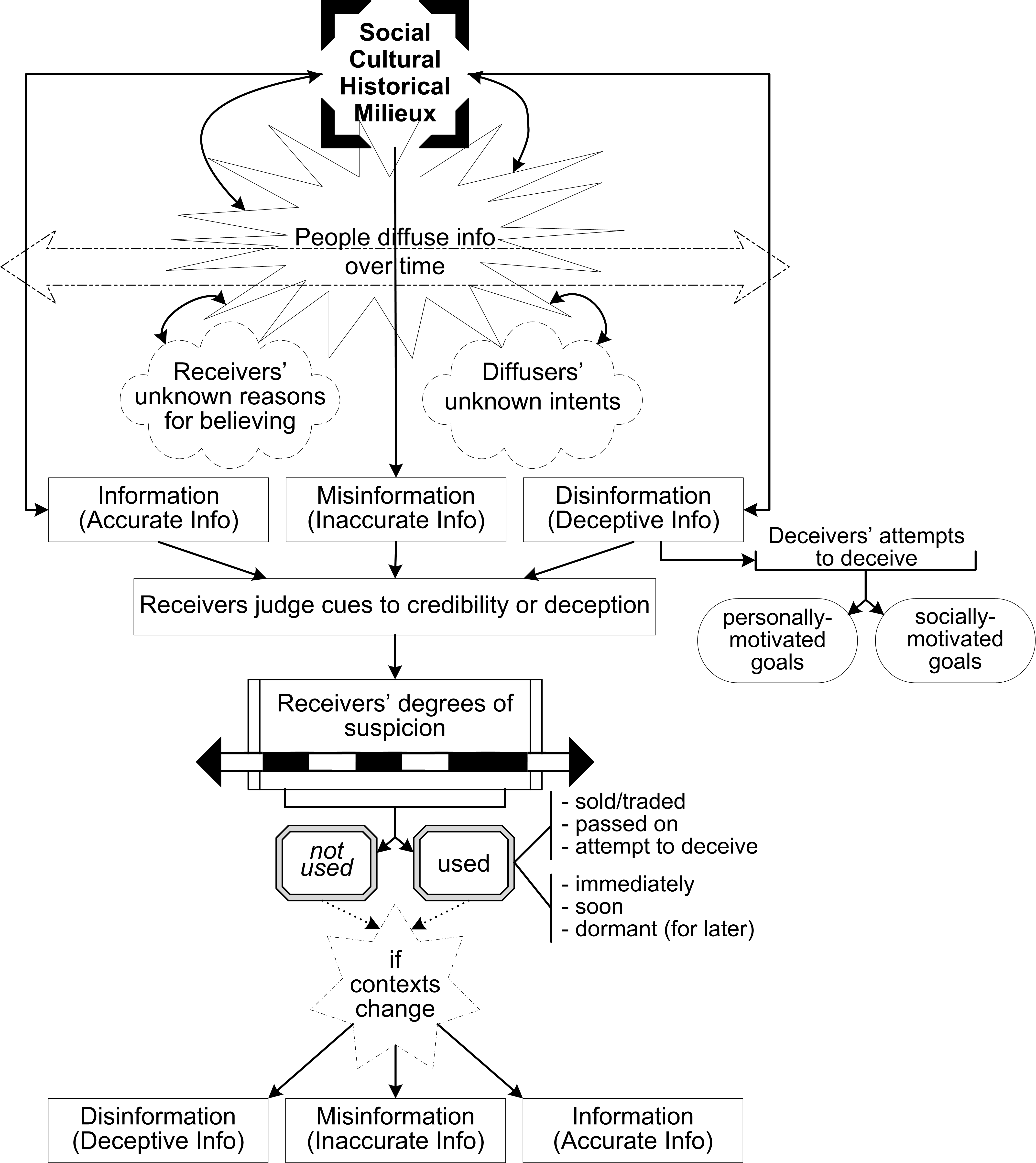

The field of information behaviour has a strong tradition of model-building to help explain ideas (e.g., Fisher et al. 2005), and we harness this tradition to introduce a social diffusion model of misinformation and disinformation. In order to accurately describe misinforming and disinforming as information behaviour, the model (Figure 1) depicts information, misinformation, and disinformation as products of social processes, illustrating how information, misinformation, and disinformation are formed, disseminated, judged, and used in terms of key elements, beginning with milieux.

Figure 1: Social diffusion model of information, misinformation, and disinformation

Milieux

Information does not form in a vacuum. Our model seeks inclusivity and context-awareness. Social, cultural, and historical aspects may influence how information, misinformation, disinformation, cues to credibility, and cues to deception are perceived and used. For example, the misinformation and disinformation diffusing throughout Europe about Germany’s rising economic influence may stem from Germany’s history. This example illustrates how information can be perceived as either misinformation or disinformation, depending on the social, cultural, and historical contexts. In this model, information, misinformation, and disinformation are socially-, culturally-, and historically-mediated.

Diffusion

Given these elements, personal and professional social networks involving positive, negative and latent ties of varying strengths, are leveraged to diffuse information, misinformation, and disinformation over time. Even if they may not believe such information themselves, people (and governments and businesses) share information, and they may not recognize it as inaccurate or deceptive. Naturally, as information diffuses, cues to inaccuracy or deception may change, disappear, or emerge. Diffusion may be rapid, as in an emergency situation (e.g., an earthquake) or a political mobilization (e.g., #TahrirSquare, #ows); it may adopt a leisurely pace (perhaps because it is low-impact or inconsequential); or it may take a much longer time to diffuse, perhaps due to variabilities such as relevance, value, etc. Information, misinformation, and disinformation also diffuse across geographies, as they travel through social groups across the globe. Social media technologies, such as Twitter and Facebook, have made the diffusion of information, misinformation, and disinformation easier and faster.

Unknowns

Information, misinformation, and disinformation are diffused by people, governments, and businesses. The intents behind such diffusion, however, are unknown because they cannot be known. Human intentionality is typically vague and mercurial; it is difficult to know - with any level of certainty - the precise intent of another human being at any given moment. Of course, the presence of intent in communication cannot be denied. But even if asked about their intents, people may be unwilling to express their true intents, unable to recall their original intents, or unable to articulate them. The diffusion of inaccurate and deceptive information may be motivated by benevolent or antagonistic intents, but the nature or degree of the intent cannot be determined solely by behaviour or discourse. Information, misinformation, and disinformation may be diffused without being believed by the speaker. Even when a statement of belief is expressed, the intents behind that statement are unclear. A speaker may diffuse such information as an expression of identity or of relationship among the community, or as a result of perceived social pressure. Some information, misinformation, and disinformation may be believed sometimes by some people, governments, and businesses. But the reasons for belief are as unknowable as the nature of human intentionality.

Deception

After the receivers’ and diffusers’ unknowns in the model depiction, the process usually produces information, misinformation, and/or disinformation. In the production of disinformation, deceivers attempt to deceive. They can only attempt because even when intent to deceive is present, deception does not guarantee success in the accomplishment of goals, regardless of whether they are personally-motivated or socially-motivated. Rubin (2010) cited Walczyk, et al. (2008), who argued that deception allows the accomplishment of goals both malevolent (such as suggesting that a co-worker has been embezzling money) and benevolent (such as lying about a surprise party for a friend). People often disinform in the service of socially acceptable expectations, such as the performance of community membership, adherence to cultural values, avoidance of an argument, etc. In these cases, it seems inappropriate to describe people’s motivations as antagonistic, yet neither do they seem obviously benevolent either.

Such a variety of goals illustrate why deception is so complex, and why the nature of intent is often unknowable. For example, if deception is occasionally socially acceptable, then the idea of intent as either antagonistic or benevolent becomes a false dichotomy and challenges whether these are the appropriate views on the topic of intent. Therefore, it may be best to view cues to deception as context-dependent or relationship-dependent, such that there might be different sets of cues for different contexts or relationships.

Judgement

Regardless of whether diffusers are attempting to deceive, receivers make judgements about their believability using cues to credibility and cues to deception. Deceivers use cues to credibility to achieve deception. For example, phishing e-mails (Phishing 2013) purport to be from legitimate companies (e.g., eBay, PayPal, Facebook, Twitter, etc.) in order to obtain personal information. These e-mails often use a believable domain name (e.g., custserv@paypal.com), the company’s logo and font, and the company’s physical mailing address location as cues to credibility. As a defense against such deception, receivers may rely on cues to deception. For example, phishing e-mails may include information in the header of the e-mail that may indicate its origination, egregiously incorrect spelling and grammar, and external hyperlinks that, when a mouse cursor rolls over them, reveal a suspicious or bogus URL (e.g., hottgirlz.com). But, as described earlier, much of the interpretation of misinformation and disinformation can be influenced by social, historical, and cultural factors.

Thus while cues to credibility may be used by deceivers to deceive and cues to deception may be used by receivers to defend against deception, neither set may be successful in deception or defense. Much depends on the degree to which receivers may suspect misinformation or disinformation, or the degree to which certain aspects of messages strike them as suspicious, bogus, or benign. Significantly, this section of the model represents the convergence of the information literacy activities occurring constantly and simultaneously throughout the entire course of the model.

Use

As receivers use their information literacy skills to make judgements about information, misinformation, and disinformation, this information is used by people, governments, and businesses to make decisions and take action. When recognized as such, information, misinformation, and disinformation can be valuable to people, governments, and businesses. Correcting inaccurate information can present opportunities for meaningful engagement, public awareness and education, and commercial information service provision. People can use disinformation to harness influence over others (e.g., insinuating knowledge of personal information). Governments can use disinformation to exercise control over a populace. Businesses can use disinformation to maintain or repair their own reputation or to damage the reputation of a competitor. These examples suggest only a few of the ways that disinformation can be used. Misinformation and disinformation, if recognized, can also be sold or traded, diffused out through social groups, used to attempt to deceive, etc. Misinformation and disinformation may be used immediately in a situation, soon after receiving such information, or it may be kept dormant for later use or verification.

Changes in context may influence how or whether misinformation or disinformation is used. Because the world can change so rapidly, the information that receivers’ may have judged as misinformation or disinformation can quickly become information, misinformation, or disinformation. For example, after the earthquake in Japan in March 2011, a Twitter user tweeted (Twitter... 2011) that his friend needed to be rescued, and asked others to retweet the message. The friend was rescued the following day, but people continued to retweet. This example illustrates how true, accurate information can become misinformation due to a change in context.

Discussion: implications for information literacy

Information, misinformation, and disinformation develop in social, cultural, and historical milieux. From this, they develop over time and are diffused by diffusers. The intents and beliefs of diffusers and receivers are unknown, however. Diffusers may attempt deception, and, in response, receivers will exercise judgement by looking for cues to credibility and to deception. Finally, both diffusers and receivers may use information, misinformation, and disinformation. This process highlights the need for critical analysis of diffusion and information sharing, and of cues to credibility and their usage. These are elements of information literacy, activities in which people engage as they ferret out the nuances of misinformation and disinformation. In this section we discussion the implications of our proposed model for information literacy.

Since its 1974 coining by Zurkowski, information literacy has been a core service of libraries with definitions and competencies adopted by organizations worldwide (AASL/AECT 1998; ACRL 2008; Garner 2006), several guides (Lau 2006; Sayers 2006) and models, e.g., radical change (Dresang 2005), Big6 (Lowe amd Eisenberg 2005), seven faces (Bruce 1997), seven pillars (SCONUL 2011), the empowering eight (Wijetunge and Alahakoon 2005), and the information search process (Kuhlthau 2005) for basing services in K-12 (primary and secondary) schools, colleges and the workplace. At its essence, information literacy refers to being able 'to identify, locate, evaluate, and effectively use information' (Garner 2006).

Despite observations on the growing importance of context (corporeal and social sources) in understanding and promoting information literacy (see (Courtright 2008) for a good review), however, the prevailing paradigm focuses on individual users engaged in learning about tools and problem solving processes on their own behalves, sometimes for life-long learning. This orientation reflects Tuominen, Savolainen and Talja’s observation that: 'Information Literacy thus far has been more of a practical and strategic concept used by librarians and information specialists rather than the focus of empirical research' (2005: 330). Regarding public libraries, Lloyd and Williamson (2008: 7) concluded, 'information literacy research is still in its infancy, with very little research pertaining to community perspectives of information literacy being reported' adding, 'in community and cross-cultural settings, IL [information literacy] may also take on a different shape that cannot be accommodated by library-driven frameworks and standards' (p. 8). Harding (2008) and Walter (2007) also lament the lack of direction for public library participation in information literacy delivery, citing a higher onus of expectation and responsibility.

These calls in the literature for a wider perspective in approaching information literacy, understanding community perspectives, and engaging greater empirical understanding support our observation that conceptual and empirical investigations must include misinformation and disinformation to reflect the complexities of modern life. Two specific areas in which misinformation and disinformation may be regarded within information literacy include diffusion and sharing, and cues to credibility, discussed as follows.

Diffusion and sharing

As discussed, people enjoy sharing information or are naturally inclined, especially when it piques their interest, or if they think a friend would benefit (Coward and Fisher 2010; Shibutani 1955). In this way, people diffuse information through networks over time. Not all networks, however, are connected. Burt (1992, 2004) uses the term, 'structural holes' to describe disconnections, the empty space, between networks. To fill these holes and link sets of networks, information brokers serve a crucial role in the diffusion of information. Because information brokers connect sets of networks, they are enormously powerful, and may easily diffuse misinformation and disinformation with limited consequence to their reputation within a network. Further, members of disparate networks may be unable to verify information received from their network’s broker because that broker may be the sole contact between networks. Again, the ambiguity of human intentionality clouds the motivations behind information brokers diffusing a piece of information between otherwise disconnected networks. As information diffuses through networks, it can reach a saturation point, such that most or all of the people in the network are aware of the information, misinformation, or disinformation. They may not, however, recognize misinformation or disinformation as such. In her work on the diffusion of employment-related information among low-income workers, Chatman (1986: 384) found that, 'information has limited utility when diffused'. Here, Chatman described a complete saturation of information in a (relatively) small network with few connections to other networks. In terms of misinformation and disinformation, however, diffusion saturation may not reduce utility. For example, misinformation may be incomplete or inaccurate, and may become useful, even after complete saturation, when members of a network find other information to complete or correct the misinformation. When disinformation, for example, has diffused throughout a network, an outsider may use that disinformation to deceive some members of that network.

Cues to credibility

In networks, misinformation and disinformation may spread easily because cues to deception and cues to credibility may shift in their meaning, relevance, and context. A cue to credibility (or deception) in one network may function as a cue to deception (or credibility) in another network. For example, wearing a business suit among business executives may provide credibility in that network, but may cue deception among artists. Information users look for cues to credibility when making judgements about information. In these situations, cues to credibility are necessary tools, both for users and for creators of information. Cues to credibility communicate legitimacy and trustworthiness to an audience. Deceivers, however, also rely on cues to credibility - often for the same goals as non-deceivers (e.g., trust, believability, etc.). For example, deceptive political advertising may feature actors dressed as firefighters and police officers because these people have influence and respect in the community. The advertisement, in this case, leverages the community’s esteem for these people as a cue to credibility. Because cues to credibility can be used in deceptive ways, their utility becomes questionable. Perhaps common cues to credibility have become too easily malleable. For example, ordinary consumers may not know whether an item on eBay is authentic or fake when sellers use common cues to credibility (e.g., official logos, photos, etc.). Deceivers also often reveal, or leave behind, cues to deception.

Cues to deception can include physical cues (e.g., dilated pupils, elevated heart rate, etc. (DePaulo et al. 2003)), verbal cues (often influenced by the type of relationship; (Buller and Burgoon, 1996)), and textual cues (e.g., excessive quantity, reduced complexity; (Zhou et al. 2004)). Online environments (e.g., Facebook, Twitter, World of Warcraft, etc.) may offer additional or alternate sets of cues; future research may help uncover such sets. While cues to credibility can be used by both deceivers and non-deceivers to influence receivers of information, we argue that cues to deception may be more useful to ordinary consumers because cues to deception generally are not used by deceivers to convince others. Cues to deception can be perceived by information receivers and used as a defense against such deception, and to make judgements about the likelihood of deception. Nonetheless, because consumers may use a combination of cues to credibility and cues to deception to form judgements about information, information literacy efforts should include ways of recognizing misinformation and disinformation.

Conclusions

Misinforming and disinforming are forms of information behaviour, specifically regarding information literacy. Misinformation and disinformation extend the concept of information by their informativeness. A chart (Table 1) summarizes the features of information, misinformation, and disinformation. A model (Figure 1) illustrates how information, misinformation, and disinformation evolve through social diffusion processes. In this model, information, misinformation, and disinformation are generated in various cultural, historical, and social milieux, and through processes of social diffusion, people come to make judgements (and deceivers may seek to deceive) influenced by their degree of suspicion, and either do not use or use information, misinformation, and disinformation. Because misinforming and disinforming are types of information behaviour, their links to information literacy are numerous, including diffusion and sharing and cues to credibility.

In this paper, we are not trying to determine or describe how people make judgements about information or about cues to deception or credibility. We are, however, suggesting that these topics demand further attention from information science. If information science is to understand the nature of trustworthiness, credibility, cognitive authority, and related topics, then misinformation, disinformation, and cues to deception must be included in the research agenda. We anticipate that this model will open additional avenues for further research.

Additionally, the truth or falsity of information cannot be determined ‘objectively’. We hope, however, that information literacy efforts are sufficiently effective, such that individuals and teams can make situational decisions about the degree of truth or falsity of information. That is, regardless of whether truth or falsity can or cannot be determined ‘objectively’, people still need information and make decisions about it based on their subjective determinations of truth or falsity.

Misinformation and disinformation can have serious consequences for governments, people, businesses, information professionals, and user experience designers, as well as other groups. Misinformation is problematic largely because it can create confusion and mistrust among receivers, and can make information difficult to use. For example, receivers may feel uncertain about the information, and therefore, uncertain about whether they can take action or make a decision. If receivers recognize the errors, they may seek another information source, repeat their previous work, or compensate in some other way. For librarians, information architects, and other information professionals, misinformation in metadata may cause web pages, for example, to be incorrectly indexed, and absent from appropriate search results. User experience designers should understand that when confusing or even conflicting information is presented to users, it can ‘break’ the user experience by disrupting the flow of use. Misinformation can cause credibility problems as well. When governments or companies provide erroneous information, for example, receivers may question whether the government or company is legitimate and an authoritative source. Receivers may also begin to suspect whether information that appears to be inaccurate may actually be misleading, disinformation. Additionally, misinformation is, however, not always easily detected. An exploration of how people determine and use cues to misinformation can illuminate methods of detection. But the difficulty in detection is only one aspect of misinformation.

Misinformation offers opportunities for users to leverage their experiences to improve available information. For example, when e-government initiatives use crowd-sourcing, misinformation can be corrected in datasets, bus schedules, city council meeting notes, voters’ guides, and other information produced and disseminated by governments at all levels. When the public is invited to improve or correct misinformation about a product, company, or service, such as errors in books or users’ manuals, misinformation can offer opportunities for engagement and create lasting and meaningful experiences for users and consumers. Because misinformation may result from accidental errors, experts, such as medical doctors, scientists, and other professionals, can seize an opportunity to educate information users. Because misinformation can be difficult to detect, governments, companies, and professionals can harness misinformation as opportunities for crowd-sourcing corrections, for meaningful engagement, and for education.

Disinformation can have significant consequences for individuals, governments, and companies. When individuals believe deceptive information, it can influence their actions and decisions. For example, when her accountant lies to Kelly by telling her she owes more in taxes than she actually does, Kelly may decide to seek out a second opinion, or give her accountant additional money. In this example, her accountant disinforms Kelly by leading her to believe an inaccurate situation. Governments may also be susceptible to disinformation. For example, a gang running drugs may try to deceive law enforcement about their location by discussing their location over the phone (knowing it has been tapped). In this case, the gang disinforms law enforcement by leading them to believe a false situation. Disinformation can cause negative effects for businesses as well. A company’s reputation may be damaged, perhaps due to a competitor, market speculators, or industry-wide struggles. For example, a rumor about a possible bank failure may cause a run on the bank, as happened in December 2011 in Latvia (Swedbank... 2011). In a state of information uncertainty, people queued for hours to withdraw their funds because they did not trust the bank.

Disinformation also provides business opportunities. Online reputation management firms, such as Metal Rabbit Media and Reputation.com, rely on disinformation to serve their clients. In this business, people try to control the information about them available online, particularly via search engines such as Google. Some people find the task sufficiently daunting to hire professional firms to manage their online reputation (Bilton 2011). In the service of their clients, these companies can provide websites, portfolios, Twitter streams, blogs, Flickr accounts, Facebook pages, etc. The extent of the content depends on the level of service for which the client pays. Reputation management firms can also leverage search engine optimization techniques to ensure that their content appears towards the top of a search result list. These firms’ services exemplify disinformation because the information they provide is often true, accurate, and current - yet deceptive. It is intended to show the client in a different light. By studying highly nuanced cues to disinformation, these firms can improve their services and leave smaller, less noticeable cues. The field of marketing offers numerous opportunities to harness misinformation and disinformation. Guerilla, undercover, and viral marketing may use deceptive techniques (e.g., evasion, exclusion, vagueness) to market products and services to often unsuspecting consumers. Coolhunting is a type of marketing dependent on rumor tracking, and is strongly subject to misinformation and disinformation because the aesthetic of cool often requires secrecy and because rumors may be unreliable information sources.

In future work, we are adapting a naturalistic approach to observe and capture the richness and dynamism of misinforming and disinforming as forms of information behaviour and literacy in a real-life setting, such as 3D, immersive, virtual worlds (e.g., Second Life, World of Warcraft, Star Wars: The Old Republic, etc.). These environments present the challenges of computer-mediated communication, such as a lack of physical cues, but may offer opportunities for users to use other cues to disambiguate between misinformation and disinformation, thus serve as excellent candidates for the exploration of the concepts discussed in this paper. While the work on textual cues in e-mail exchanges (Zhou et al. 2004) begins to address the lack of physical or verbal cues, work in 3D, immersive, worlds may provide additional or alternate sets of cues. These new sets may prove useful as immersive environments become increasingly common in classrooms and boardrooms. For example, as players develop their skills, there is some evidence to suggest that they develop “avatar literacy.” That is, they can “read” an avatar, visually, and learn a great deal of information. Teamwork, however, plays a key role in many online games. Players self-organize into distributed, often asynchronous teams. Recognizing the influence of team dynamics, a few questions arise: How might such teams collaboratively distinguish cues to misinformation from cues to disinformation? How might a team agree, to some extent, on what constitutes a “cue”? How might they respond to or use such cues? Much of the misinformation, disinformation, and cues literature has focused on individual deceivers or pairs of conversants. By focusing on teams, we can understand better the core elements of misinformation and disinformation: relationships and context.

About the authors

Natascha Karlova is a Ph.D. Candidate in Information Science at the University of Washington Information School in Seattle, WA, USA. For her dissertation research, she is studying how teams of online game players - as a goup - identify cues to misinformation (mistaken information) and disinformation (deceptive information). She can be contacted at nkarlova@uw.edu

Karen Fisher, Ph.D. is a Professor at the University of Washington Information School in Seattle, WA, USA. You can find out more about her research here: http://ibec.ischool.uw.edu/ and contact her at fisher@uw.edu