Content validation for level of use of feature rich systems: a Delphi study of electronic medical records systems

Norman Shaw

Ted Rogers School of Management, Ryerson University, 350 Victoria Street, Toronto, ON, M5B 2K3, Canada

Sharm Manwami

Henley Business School, University of Reading, Greenlands, Henley-on-Thames, Oxfordshire, RG9 3AU, UK

Introduction

Computers link companies and individuals together for many social and business transactions (Carr 2003) with worldwide spending on IT in 2011 estimated at $3.5 trillion (Gordon et al. 2011). However, because the growth of feature rich computer systems has outpaced individuals' abilities to take advantage of built-in functionality (Hsieh and Wang 2007), there has not been a corresponding increase in productivity (Turedi and Zhu 2012; Tambe et al. 2011; Brynjolfsson 1993).

To gain a deeper understanding of factors that influence use, theories of acceptance (Davis 1989; Venkatesh and Davis 2000) and post adoption (Bhattacherjee et al. 2008) have been proposed. Their dependent variable is system use, which, in many studies, is measured in terms of some combination of time and frequency (Venkatesh et al. 2003; Legris et al. 2003). The implication is that those who spend more time on the system make better use of it. But for feature rich systems, time on the system is an inadequate measure. Less time may be spent by more experienced users who achieve their objectives by utilising more advanced features (Jasperson et al. 2005). There is a need, therefore, to reconceptualise the dependent variable when investigating feature rich systems.

In this paper, we adopt Burton-Jones and Straub's suggestion (2006) of replacing the lean measure of time with a richer measure which takes into account how the user deploys the features of the IT system under study. We define the dependent variable as level of use (Zmud and Apple 1992). In their stage model, Cooper and Zmud (1990) describe how the initial level of use is the adoption of basic features. Once these features become routine, users have the confidence to move to the infusion stage where they deploy more advanced capabilities. Levels of use have been described as initial use, extended use or exploratory use (Saeed and Abdinnour-Helm 2008) or alternatively as shallow, routine or extended (Hsieh and Wang 2007). We adopt the terminology of basic, intermediate and advanced. We then propose that, when investigating feature rich systems, the dependent variable should be level of use, specified as a formative construct and measured as a function of core features deployed (Griffith 1999).

Measurement of the construct requires an instrument and the first step in instrument development is content validity (Cronbach and Meehl 1955; Straub 1989). The content is validated from the researcher's experience and a review of the literature (Straub 1989; Lin et al. 2008). In addition, expert opinions can be gathered via the Delphi process (Delbecqet al. 1975), which seeks consensus through a number of rounds conducted asynchronously. The indicators for level of use are context sensitive and we apply the methodology in the context of the post adoption use of electronic medical records (EMR) by primary care physicians in Ontario, Canada. EMRs are feature rich systems. They have basic features such as recording notes, intermediate features such as setting reminders and advanced functions such as creating templates (Blumenthal and Tavenner 2010). Their adoption and increased usage is recognised by practitioners and governments as a means of delivering health care more efficiently (Schoen et al. 2009).

This paper is organised as follows: the next section is a review of the literature about the dependent variable, system use; the third section describes the context of the study with an additional review of the literature relevant to the context; the fourth section outlines the research methodology and how it was applied to EMR use; the fifth section provides more detail about the consensus seeking process; the sixth section summarises the results from the empirical study; finally, we discuss the results and their limitations and offer suggestions for future research.

Literature review

Reconceptualising the dependent variable

In the technology adoption model, perceived usefulness and perceived ease of use are the key constructs mediating an individual's intention to use a system (Davis 1989). Because the model uses just two predicting variables, it is easily generalised and has been applied in many different fields of study, such as e-mail communication (Szajna 1996), telemedicine (Hu et al. 1999), systems development tools (Polancic et al. 2010), internet banking (Lai et al. 2010) and health care (Holden and Karsh 2010). The focus of these studies and others is the adoption and initial acceptance of the system as a whole (King and He 2006; Turner et al. 2010) where the dependent variable is defined as system use, commonly measured as time on the system. This is not sufficient for research focussed on feature rich systems, because they require a view focused on those features, where post adoptive behaviour is defined as the 'myriad feature decisions' made by individuals to accomplish their regular activities (Jasperson et al. 2005).

After the system has been adopted, individuals make decisions at the feature level. Immediately after acceptance, they select certain features for initial adoption, and once in use, they decide whether to continue their use. According to the technology adoption model, individuals will adopt features if they deem them to be useful and easy to use. They will be influenced by their past experience of using the system. Have they found the features easy to use? Has past feature adoption met expectations of perceived usefulness? By evaluating usage at a feature level, rather than a system level, the model and its extensions may be applied to explain why some users progress to a more advanced stage of system use. The few studies (Kim and Malhotra 2005; Lippert 2007) that have attempted this have been limited by their definition of the dependent variable.

Many studies using the model have measured the dependent variable in terms of frequency and duration (Adams et al. 1992; Agarwal and Prasad 1997; Igbaria and Iivari 1995; Laiet al.. 2010; Venkatesh and Bala 2008; Venkatesh et al. 2002). Such measurements assume that more time on the system represents greater acceptance and more information system success (Seddon 1997). But Burton-Jones and Straub (2006) suggested that such an omnibus measure that only captures one dimension, such as time on the system, is 'lean' and is not rich enough to capture feature usage by individuals.

Features are the tools designed by the software vendors that enable individuals to complete tasks (Harrison and Datta 2007). Users commence by learning the new capabilities offered by the system (Agarwal et al. 1997). With repetition, they become familiar with the standard features and their deployment becomes routine (Gefen 2003; Limayem and Cheung 2008). As users become more comfortable with the system, some of them may advance to the next stage where they explore additional capabilities (Burton-Jones and Straub 2003; Straub and Burton-Jones 2007; Burton-Jones and Straub 2006) and create functionality 'that goes beyond typical usage leading to better results and returns' (Hsieh and Wang 2007: 217). These features can be divided into core versus tangential (Griffith 1999), where the former make up the features that define the 'spirit' of the system (Desanctis and Poole 1994) and the latter are non-critical whose use is optional (Griffith 1999). Practitioners desire that systems deliver the productivity that they promise, but in many cases, the core features are not fully utilised (Hsieh and Wang 2007).

Reconceptualising the dependent variable as core feature usage will enable theories of post adoption use to explore why some users are more advanced in their deployment of core features than others.

The dependent variable: level of use

Recognising that duration or frequency do not capture feature usage, Venkatesh et al. (2008) suggested that the concept of intensity should be added. They specified it as a simple reflective variable in answer to 'how do you consider the extent of your current system use?' (Venkatesh et al. 2008). Appropriation of the technology is a richer measure: appropriation refers to the way that users evaluate, adopt, adapt and integrate a technology into their everyday practices (Chin et al. 1997; Carroll et al. 2002; Beaudry and Pinsonneault 2005). In the first stage of appropriation, the basic features of the system are used to replace existing tasks. The second stage is when these features become routine due to experience with the system and resulting organisational structural changes. In the third stage, users learn new processes in a recursive and reflexive manner such that the use of the system affects the surrounding structure (Carroll et al. 2003). IT systems aim to deliver benefits according to the features built in by the system designers, but the benefits delivered depend upon the appropriation by the user (Orlikowski 1992). The degree to which features are adopted according to the designer's intent is a measure of their appropriation. Scales for measuring appropriation have been developed (Chin et al. 1997) where users are asked to assess how their use of features compares with the intentions of the system designer. However, this is a reflective measure with no external reference to the actual features within the system.

More objective measures of system use are possible when the capabilities of the system are taken into account. Zmud and Apple (1992) investigated the discrete 'levels of use' of scanners in supermarkets, where infusion of the scanners was marked by deeper and more comprehensive usage. The infusion level of scanners was scored on a scale of 1 to 3, where 3 represented the use of the most advanced capabilities of inventory management. Other studies have grouped feature usage into levels, where there is a gradation from simple use by a novice user to more extended use by an experienced user. See Table 1: Levels of Post-Adoption Use.

| Authors | Levels of post-adoption use | Study | Information technology artefact |

|---|---|---|---|

| Cooper & Zmud (1990) | 1. Acceptance 2. Routinization 3. Infusion |

Cross-sectional field survey with 62 participating companies | MRP (Manufacturing Requirements Planning) |

| Zmud & Apple (1992) | 1. Inter-deparmtent 2. Intra-departments 3. External organisations |

Quantitative study of use of electronic scanners in US retail chains from 1983 to 84 | Electronic scanners in supermarkets |

| Saga & Zmud (1994) | 1. Acceptance 2. Routinization 3. Infusion |

Review of literature on post-adoption use | Different systems |

| Auer (1998) | 1. Pre-novice 2, Routine 3. Advanced |

Case study of 50 participants in a large Finnish company | Spreadsheet |

| Nambisan & Wang (1999) | 1. Information access 2. Work collaboration 3. Core business transactions |

Review of literature on use of web technology | Web technology adoptions |

| Eder & Igbaria (2001) | 1. Low 2. Medium 3. High |

Cross-sectional field survey with 422 respondents | Intranet |

| Carroll, Howard, Peck & Murphy (2002) | 1. Non-appropriation: decision to adopt/non-adopt 2. Dis-appropriation: exploration and selecting features to use 3. Appropriation: using selected features routinely |

Qualitative study of perceptions and use of mobile phones by 16 to 22 year olds | Mobile phones |

| Jasperson, Carter & Zmud (2005) | 1. Feature adoption 2. Routine use 3. Extension |

Development of research model from literature | General use of information systems in organisations |

| Hsieh & Wang (2007) | 1. Simple and shallow use 2. Routine - no longer 'new' 3. Extended - more functions |

Questionnaire sent to seventy-nine users of enterprise resource planning in three firms in China | ERP |

| Saeed & Abdinnour-Helm (2008) | 1. Initial use 2. Extended use 3. Exploratory use |

Questionnaire of 1,032 students in a Midwest USA university | Student information system |

Although there is no common terminology, level of use represents the gradation from early shallow use of a few features to experienced deeper use of many features (Schwarz and Chin 2007). To assist future research into post adoption usage, we propose that the construct, level of use, should be specified as formative and defined as a composite value of the features that the individual has deployed. Our assumption is that the choice of which features to adopt is voluntary. Even when an organisation mandates system usage, it is not feasible to mandate all the features that should be used and how they should be extended. The mandate refers to the basic use of the system, which still allows individuals to select voluntarily the additional features they will adopt and extend (Bagayogo et al. 2010; Wang and Butler 2006; Brown et al. 2002).

Level of use as a formative construct

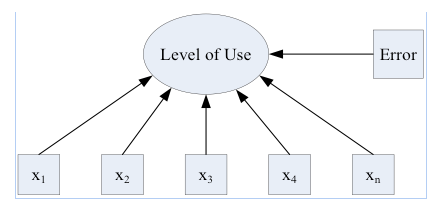

Since mis-specification can lead to incorrect theoretical validation, it is important to show that this rich construct 'level of use' is formative rather than reflective (Jarvis et al. 2003; Bollen 2007; Petter et al. 2007). As a first criterion, we look at the direction of causality. Because we have defined level of use as a composite of a number of indicators, the flow is from the indicator to the construct. Any change to the value of an indicator will change the value of the construct: for example, in Figure 1, if x1 to xn represent features, the value associated with level of use will depend upon which features are being used. This direction of causality is consistent with a formative construct (Jarvis et al. 2003).

Source: Diamantopoulos & Winklhofer (2001)

Another criterion asks if the indicators are interchangeable. Again, by definition, level of use is a composite of the feature usage. If any of these features are dropped, the value and the meaning of the construct will change (MacKenzie et al. 2005). An individual who is using features x1 and x2 has a different level of use than an individual who is only using x1. The indicators are not interchangeable which is consistent with a formative construct (Jarvis et al. 2003).

A third criterion looks at the covariance of the indicators. For reflective constructs, the indicators will covary, but for the construct 'level of use', each indicator is an independent measure of the use of a specific feature and therefore any covariance with any other feature should be investigated further to determine if one of the indicators is superfluous. If, for example, x1 and x5 do covary significantly, then one of them could be dropped. Again, this is consistent with a formative construct.

Any theory testing depends upon measurement of the latent constructs within the model and the correct methodological sequence is to validate measurement instruments prior to testing the selected structural model (Straub 1989). Through their indicators, constructs must be shown to reliably measure what is intended. Convergent and discriminant statistical tests are not relevant for formative constructs and therefore content validity becomes the essential first step for instrument development (Straub et al. 2004) for a formative construct. Context is important because for the dependent variable, level of use, the indicators are the deployment of core features that are available within the specific IT system under study. We next describe the empirical setting of our study.

The study

The dependent variable has been defined as level of use. As a formative construct, it can be represented as an index, which is the composite of features that have been adopted by the user. To validate the content of this construct, the system under study must be feature rich, with a gradation of features from basic to advanced. The Electronic Medical Record (EMR) is such a system. Its basic component is the recording of patient notes. Once physicians have routinised this, they could add features such as setting reminders for a patient follow-up. Subsequently, because system designers have included the ability to extend features through customisation, some physicians may design their own templates, that, for example, would guide them through a specialised patient examination. Furthermore, increased understanding of electronic medical records will assist in the delivery of efficient healthcare, which is a priority for governments (Schoen et al. 2009; Obama 2009; Flaherty 2009).

In a journey through the health system, a patient may see a primary care provider, be referred to a specialist or receive treatment in a hospital. In all scenarios, physicians maintain information about their patients, recording details from each visit, such as lab results, medicines prescribed, hospital discharge notices and specialist letters. Historically, this record has been maintained in paper form in a physical folder in the doctor's office. Time is spent filing and retrieving. Data is being added and information is being accumulated, but knowledge is more difficult to extract.

Recognising that increasing efficiency and cost savings are advantages of implementing computer systems (Hillestad et al. 2005), many countries in the OECD are encouraging physicians to use EMRs (Protti 2007; Protti et al. 2009; Kondro 2007). By moving from paper to a computer system, doctors have easier access to a single source of secure patient data and the provision of healthcare becomes more efficient and safer (Flegel 2008; Ortega Egea et al. 2011). Patient history can be quickly reviewed, prescription refills provided with a touch of the button and patients themselves are able to follow their own key personal statistics, such as cholesterol level, through a graphical chart. In some countries, the medical record is shared across multiple providers, but in Canada, the record is only shared within the confines of the physician's practice. In this study, we adopt Protti's definition (2007) of an electronic medical record as a system where the clinical data for an individual is location specific maintained by a single physician's practice and not directly accessible to other health care providers (ISO 2005).

In Canada, each province has the mandate to deliver healthcare to its population and, in 2010 for the Province of Ontario, the number of general practitioners who had adopted electronic medical records was approximately 42% (National Physician Survey 2010a). However, adoption is too broad a measure and we need to analyse these numbers further. For example, of those physicians who are using electronic medical records, all have an electronic interface to labs, while 68% are using reminder systems (National Physician Survey 2010b). Are some of these physicians at a more advanced level of use?

Factors such as prior experience and technical support (Terry et al. 2012; Shaw and Manwani 2011) influence level of use, which is the dependent variable for theories that seek to explain the differences in physicians' electronic medical records system usage. In our study, we have specified level of use as a formative construct that requires an instrument for its measurement. The first step in developing an instrument is content validation of the construct so that researchers are confident that level of use is being measured in a consistent meaningful manner (Petter et al. 2007; MacKenzie et al. 2011). Our research methodology is described in the next section.

Research methodology

An instrument must measure 'a sample of the universe in which the investigator is interested' (Cronbach and Meehl 1955: 282). There is a 'virtually infinite' variety of possible content and we limited the scope by first conducting a literature review of electronic medical records functionality. Because usage of such records is dependent upon the geographical area that defines the governance of the regulatory medical association, the results of the literature review were supplemented by an analysis of regulatory standards, and further refined with the help of professional experts (Straub 1989; Lin et al. 2008).

Literature review of electronic medical records functionality

The International Organization for Standardization (ISO) defines the primary purpose of an Electronic Medical Record (EMR) as the provision of 'a documented record of care that supports present and future care by the same or other clinicians'(ISO 2005: 15). Schoen et al (2009) compared the use of EMRs in eleven countries. They defined the core features as: the electronic ordering of tests, electronic access to patients' test results, electronic prescribing of medication, electronic alerts for drug interaction and the electronic entry of clinical notes. From a review of the literature as well as interviews with experts, Jha et al (2008) classified the core functions into four groups: electronic capture of clinician's notes, management of test results, computerised physician order entry and decision support.

The definition of core differs according to the standards and permissions of a particular geographical area governed by a regulatory governing association. These various standards are another source of data (Hayrinen et al. 2008; Jha et al. 2008). The US Department of Health and Human Services (DHSS) has initiated the meaningful use programmw where doctors receive incentive payments if they implement an electronic medical records system within a specific period of time and demonstrate meaningful use (Blumenthal and Tavenner 2010; Hsiao et al. 2011; Decker et al. 2012). With input from the public and the medical profession, the incentive programme has been divided into two tracks. The first track lists fifteen core objectives which, once accomplished, indicate that the physician has captured patient data electronically and is starting to use this data to manage patients. To give doctors some extra latitude in feature adoption, the second track is a menu of ten additional items from which doctors choose any five to implement in the period 2011-2012. See Table 2.

| No. | Eligible professional core objectives | No. | Eligible professional menu objectives |

|---|---|---|---|

| 1 | Use computerized physician order entry for medication orders | 1 | Implement drug formulary checks |

| 2 | Implement drug-drug and drug-allergy interaction checks | 2 | Incorporate clinical laboratory test results into electronic health records system as structured data |

| 3 | Maintain an up-to-date problem list of current and active diagnoses | 3 | Generate lists of patients by specific conditions |

| 4 | Generate and transmit permissible prescriptions electronically | 4 | Send patient reminders |

| 5 | Maintain active medication list | 5 | Provide patients with timely access to their health information |

| 6 | Maintain active medication allergy list | 6 | Identify patient-specific education resources |

| 7 | Record demographic data | 7 | Medication reconciliation when receiving patient from another setting |

| 8 | Record and chart changes in specific vital signs | 8 | Provide summary care record when patient transitions to another setting |

| 9 | Record smoking status | 9 | Submit electronic immunisation data to registries or information systems |

| 10 | Report clinical quality measures to Center for Medicare and Medicaid Service | 10 | Submit electronic surveillance data to public health agencies |

| 11 | Report clinical quality measures to Center for Medicare and Medicaid Service | ||

| 12 | Provide patients with an electronic copy of their health information | ||

| 13 | Provide clinical summaries for patient for each office visit | ||

| 14 | Exchange key clinical information | ||

| 15 | Protect electronic health information |

In Canada, each province is responsible for medical services to its population. In the province of Ontario, the provincial government together with the Ontario Medical Association has set up a daughter organisation, OntarioMD, whose role is to accelerate the diffusion and adoption of electronic medical records (OntarioMD 2009b). They educate doctors about their benefits and publish specifications, which software vendors must meet to be certified (OntarioMD 2009a). These requirements are summarised in Table 3.

| Functional specifications | Selected features |

|---|---|

| Demographic information | Maintains patient non-medical information |

| Electronic medical record | Maintains medical and surgical data, history, allergies, adverse reaction data |

| Encounter documentation | Provides forms and template for common encounters as well as free text. Links all notes for chronological access. |

| Medication management | Creates and maintains prescription records. Prints prescriptions. Performs checks of drug-to-drug interaction. |

| Laboratory test management | Alerts the physician to new incoming laboratory reports. Presents results in a table format. |

| External document management | Supports referral letters. Imports external documents and links to record. |

| Cumulative patient profile | Displays cumulative patient profile: problem list, family history, allergies, medication summary, risk factors, medical alerts. |

| Reporting, query and communications requirements | Generates patient recall list for preventive care programs. Provides ad hoc queries and reports. Ability to search and report on all text fields. |

| Work queue requirements | Supports the creation of new ad hoc tasks. Ability to assign tasks to others. |

| Scheduling requirements | Patient scheduling. Integrated with billing. |

| Billing requirements | Satisfies the mandate of Ontario. |

| System access requirements | Security of access and privacy of data. |

Similar to the US meaningful use programme, OntarioMD also administers a funding programme to encourage physicians to adopt electronic medical records. Physicians receive payment once they declare that they are using their electronic medical records for the lesser of 2/3 or 600 of their active rostered patients. They must be using them for certain tasks: making patient appointments, entering encounter notes, entering problem lists, making new prescriptions/renewals, generating automated alerts and reminders and receiving electronic laboratory results directly into their electronic medical records (OntarioMD 2009c).

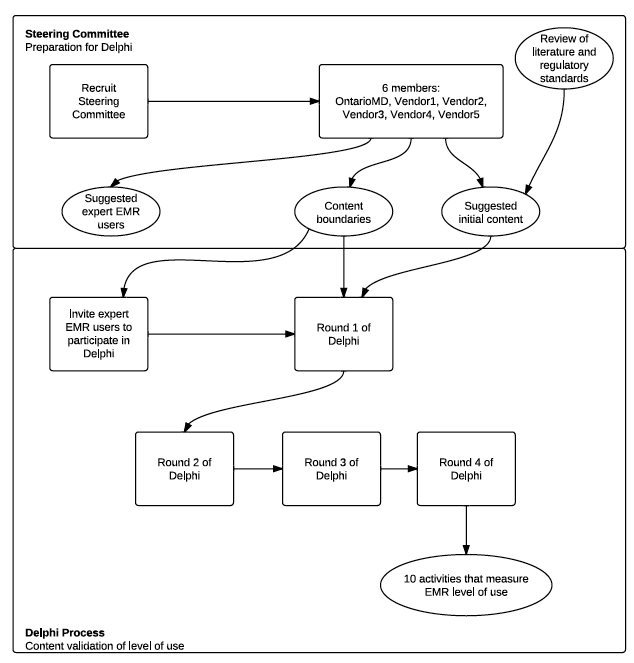

Research into the literature, including the review of standards, provides a list of meaningful features that would indicate that the electronic medical records are being used for core functionality. This list needs to be further analysed as our definition of the dependent variable is the physician's level of use to improve patient care. When constructing the index to measure level of use, the unit of analysis is the physician and the indicators should be measuring core features that improve patient care performed by the physician and not by the clinic. For example, such tasks as scheduling and billing may make the clinic run efficiently, but they are not included in the index because they are not representative of use of core functionality by the physician. By employing the Delphi technique, experts who are familiar with electronic medical records can assist in this next step of analysis (Zmud and Apple 1992; Saeed and Abdinnour-Helm 2008; Nakatsu and Iacovou 2009). The research steps are shown in Figure 2.

The Delphi process

To refine the list of features to be included as indicators of level of use, our methodology was a consensus seeking process with a panel that has expertise within the defined empirical context of primary care physicians who have adopted an electronic medical records system in Ontario. Simply bringing a group of experts together physically has the disadvantage that social motivation allows opinions to be swayed by dominant personalities, novel ideas are suppressed and individuals often take stances to defend their ideas (Delbecq et al. 1975). These shortcomings are overcome by the Delphi technique where participants do not meet. Instead a coordinator communicates separately with each member whose identity remains anonymous to the group (Delbecq et al. 1975) and their individual responses are consolidated and presented back for further analysis and consensus building. More ideas are generated than by an unstructured group meeting and it is independent of place and time because opinions are gathered from each participant asynchronously (Van de Ven and Delbecq 1974). The Delphi method has been used in information research in general (Singh et al. 2009; Doke and Swanson 1995; Duggan 2003; Harrison and Datta 2007; Okoli and Pawlowski 2004), and medical informatics in particular (van Steenkiste et al. 2002; Sibbald et al. 2009; Poon et al. 2006). Jha et al (2009) used a similar methodology in the context of Electronic Health Records in US hospitals. In our study, we are seeking the independent opinions of expert EMR users and, through the process, we guide them to a consensus (Iden and Langeland 2010; DesRoches et al. 2008; Addison 2003; van Steenkiste et al. 2002). The result is a list of core features that can be used as indicators to measure the formative construct, level of use.

The coordinator plays a central role, ensuring the anonymity of participants, and moving the process forward in rounds which progressively converge the opinions of the group without the opportunity for personalities to shape the final outcome (Dennis 1996). To gain a wide cross section of ideas, the panel should consist of between six and twenty members, after which there is saturation where no new ideas are generated (Murphy et al. 1998; Schmidt 1997; Duboff 2007; Novakowski and Wellar 2008). Expert opinions are gathered via a questionnaire. Given the prevalence of e-mail today, it is the preferred medium of communication (Brill et al. 2006). Typically three rounds are required to reach consensus (Delbecq et al. 1975; Jones and Hunter 1995; Sibbald et al. 2009; Schmidt 1997).

Conducting the Delphi process

Steering committee: formation and role

Initially a steering committee was formed (van Steenkiste et al. 2002). The first member of this steering committee was a representative of OntarioMD, which is the organisation that promotes the diffusion of e-health programs to primary care physicians in the Province of Ontario in Canada (OntarioMD 2009b) and certifies electronic medical records software. Four of the certified software vendors have 90% of the market share for electronic medical records systems and senior representatives from these vendors were invited to join the Steering Committee. The composition of the Steering Committee is shown in Table 4.

| Organisation | Representative |

|---|---|

| OntarioMD | Vice President |

| EMR Software Vendor 1 | President |

| EMR Software Vendor 2 | Vice President |

| EMR Software Vendor 3 | Vice President |

| EMR Software Vendor 4 | President |

With the aid of the results of the literature review plus their own experience, the Steering Committee helped prepare the starting questionnaire for Round 1 of the Delphi process. Given that the study took place in Ontario and OntarioMD had published specifications for electronic medical records system functionality, the Steering Committee was able to assure that the universe of indicators that they had selected were measuring capabilities that existed across all the major certified software offerings and that members of the Delphi panel would be familiar with these features.

Having advised on the functions to be included, the Steering Committee assisted with the recruiting of the panel of experts. The committee invited physicians whom they considered to be expert users, to participate in the research. All experts were familiar with at least one certified electronic medical records system. Once the physicians agreed to participate, they were in direct and confidential contact with the researchers. Twelve physicians agreed to participate throughout the study which is within the range suggested as satisfactory by Murphy et al (1998). Each panel expert was asked to fill out a questionnaire that collected personal, professional and clinical information. The panel consisted of 10 males and 2 females and on average, the experts had been using an electronic medical records system for 8.3 years and nine of them had been practising medicine for 15 years or more. See Table 5.

| Age range | Count | Years of practice | Count |

|---|---|---|---|

| 34-40 yrs in age | 1 | 6-10 years | 2 |

| 41-44 yrs in age | 2 | 11-15 years | 1 |

| 45-54 yrs in age | 6 | 15-20 years | 5 |

| 55-64 yrs in age | 3 | 21-25 years | 2 |

| 65+ yrs in age | 0 | 25+ years | 2 |

As part of this questionnaire each expert was asked to list 'at least three features of your electronic medical records system that you use which you feel classify you as an advanced user'. Examples they gave included customising templates, building flow sheets and using the system to write referral letters with macros that automatically filled in patient demographics. These responses confirmed that the physicians had progressed beyond the routine stage and were deploying advanced capabilities for their own benefit. They were qualified as advanced in line with the definition as a person who extends the system (Jasperson et al. 2005; Hsieh and Zmud 2006). They were then engaged in the consensus seeking rounds of the Delphi process.

Round one: brainstorming

The first round is to solicit opinions from the panel within the scope defined by the Steering Committee (Schmidt et al. 2001). Once the panel of experts had been identified and their role explained by us, they were asked:

If you were to meet other physicians and discuss with them how they use their electronic medical records system, you would be able to judge whether their use can be classified as basic, intermediate or advanced. You would probably ask them questions about which functions they use and how they use those functions. From their answers, you would be able to make your judgment. The objective of this Round of the process is for you to classify the type of replies that you would expect.

Rather than using the terminology of low, medium and high (Eder and Igbaria 2001) or pre-novice, routine and advanced (Auer 1998), we described the levels of use as basic, intermediate and advanced so that the panellists would understand, without the need for further explanation, that their task was to differentiate between different levels of use. Prior to the finalisation of the seed question to be sent to the panellists, a draft was tested by sending it to the Steering Committee who commented on the clarity of the instructions. The panellists were then sent a semi-structured questionnaire listing the core features suggested by the Steering Committee and were asked, for each feature, what actions would help them identify if the user were basic, intermediate or advanced.

The semi-structured questionnaire was sent as an attachment to an e-mail explaining that the objective of the research was to determine how physicians could be supported to gain full value from their electronic medical records system to improve the delivery of healthcare. To ensure confidentiality, e-mails were sent as blind copies to the panellists. When the responses were returned, the confidentiality of each panellist was further protected by copying them into a master table where the sole identification of the originator was a coded reference number known only by the researcher.

Round two: narrowing down the responses

From the semi-structured questionnaire used in round one, each of the questions had multiple suggestions from each of the expert panellists. In many cases, the descriptions were very similar. To prepare the questionnaire for round two, the responses were consolidated prior to soliciting further feedback. One of the major objectives of implementing an electronic medical records system is to improve patient care (Tevaarwerk 2008; Flegel 2008) and, in round two, the panel of experts was asked to rate the importance for each item on a six point Likert scale. Specifically, the experts were asked.

...to determine which items contribute the most to the improvement of patient care. Please review the items and indicate their contribution, using the scale from 1 to 6 where 1 is "very little improvement to patient care" and 6 is "very significant improvement to patient care".

The importance score for each question was calculated as the average of all responses for that question. The functions that scored an average of 5 or higher were included in round three. In the opinion of the expert users, these tasks were the core functions that enabled the physician to improve the delivery of healthcare.

Round three: confirmation

In round three, the list of important features was sent back to the panel for confirmation. They were asked if the wording was clear and if there was any overlap of features. Reponses from the experts were consolidated to provide input for round four.

Final round: consensus seeking

The Delphi process continues until there is consensus (Murphy et al. 1998). To reach consensus, the participants were provided feedback in successive rounds so that they could confirm their understanding and move to consensus (Schmidt 1997). As a result, consensus was reached at the end of round four on the list of tasks that would serve as indicators of level of use.

Results

In the setup process, the steering committee defined three functional areas and identified a total of sixteen functions within these groups. See Table 6.

| No. | Function | Area |

|---|---|---|

| 1 | Clinical note writing | Clinical |

| 2 | Cumulative patient profile | |

| 3 | Flowsheet (chart or graph of vital statistics over time) | |

| 4 | Reports | |

| 5 | Drug interactions | |

| 6 | Specialist reports | |

| 7 | Use of electronic charts | |

| 8 | Referral letters | |

| 9 | Test result management | |

| 10 | Clinical decision support | |

| 11 | Custom forms for specialised tests | Computerised physician order entry |

| 12 | Ordering lab tests | |

| 13 | Prescriptions - new or renewal | |

| 14 | Prescriptions - new or renewal | Practice management |

| 15 | Reminders | |

| 16 | Statistical summaries |

This list was used as the input for the semi-structured questionnaire that was sent out to the panel of experts at the start of the Delphi process. Responses to round one from each panellist described how they would recognise the level of use for each of the functions listed. See Table 7.

| No. | * | Area Function |

Description of advanced use |

|---|---|---|---|

| 1 | * | Clinical Clinical note writing |

Uses templates with discrete structured fields to capture data consistently for future display and reporting |

| 2 | * | Clinical Cumulative patient profile |

Maintains and regularly updates all areas of the profile for all patient encounters |

| 3 | * | Clinical Flow sheet |

Builds and manipulates flow sheet views to display desired data in preferred manner; Uses flow sheets to monitor ongoing conditions and engage patient during encounter |

| 4 | * | Clinical Drug interactions |

Enters allergies and diagnoses as discrete structured data, so that system is able to warn about drug-drug, drug-allergy and drug-disease interactions. |

| 5 | * | Clinical Use of electronic charts |

Uses electronic charts only |

| 6 | * | Clinical Referral letters |

Generates letters using templates within the system; Sends electronically wherever feasible. |

| 7 | * | Clinical Handling incoming patient data |

Incoming electronic data is entered into discrete structured fields, which can be searched; Non-electronic data is scanned and attached to patient record; image is converted to text with optical character recognition where possible. |

| 8 | * | Clinical Test result management |

Tracks and receives laboratory results electronically; Reviews results and requests patient visit or sets reminders, all within the system. |

| 9 | Clinical Clinical guidelines |

Applies widely-accepted guidelines through reminders, templates and periodic searches of the patient population. | |

| 10 | Computerized physician order entry Custom forms - e.g., labs |

Orders services and tests using the system, automatically transmitting to service providers by HL7 interface or eFax using forms generated automatically by the system after the physician has completed the notes for the patient encounter | |

| 11 | * | Computerized physician order entry Prescriptions |

Prescribes directly from system; Uses aliases and custom lists of medications |

| 12 | Practice management Communication to patient |

Uses letter templates for all communications; Uses secure e-mail |

|

| 13 | * | Clinical Preventative health care |

Schedules screenings and preventive intervention based on clinical guidelines; Uses searches to recall patients who are overdue. |

| 14 | * | Practice management Reminders |

Schedules screenings and preventive intervention based on clinical guidelines; Uses searches to recall patients who are overdue. |

| 15 | * | Practice management Statistical reports |

Creates reports to evaluate any clinical or administrative area of the practice |

They confirmed that basic was very little use of features, intermediate was moderate use and advanced was full use including customisation. Panellists were in agreement with the objectives of the research that users should be at an advanced level. Their comments are best summarised by one physician who stated:

The higher the level of computerization and the more we automate the things we do in our practice over and over, the less time spent on doing those things and therefore the more time we can spend talking with our patients. This, in itself, improves patient care. By not having to remember all of the little details about preventative care, allergic reactions, drug interactions, call-backs etc. allows us to really concentrate on the person in front of us.

In round two, panellists were asked to rate each feature according to its importance, using a Likert scale from 1 to 6. There were ten features that scored an average of five or higher (they are marked by an * in Table 7). In round three, the experts were given the opportunity to comment on clarity of feature description. They were also given the option of weighting any features that they considered were more indicative of advanced use than others. All the experts were satisfied with a common weighting of one as they felt that all tasks were equally significant and in their opinion, level of use could be measured by responses to these ten indicators. Comments from round three were consolidated and the feature descriptions with minor modifications were fed back for round four. With the feedback from round four, there was consensus on ten specific tasks that could be asked in a self-reporting questionnaire. The tasks are listed in Table 8.

| 1 | Prescriptions written in the office are written using the electronic medical records system |

| 2 | Medications are selected from custom lists within the system |

| 3 | Allergies are entered in a structured field that allows the system to determine related drug allergies |

| 4 | Patient population is searched in order to apply widely-accepted guidelines |

| 5 | Flow sheet views are built and manipulated to display desired data in a preferred manner |

| 6 | Patient reminders are used |

| 7 | Templates (i.e., stamps or custom forms) are used |

| 8 | Data in the cumulative patient profile are maintained up to date |

| 9 | Non-electronic data (e.g., letters from consultants) are scanned and attached to the patient record |

| 10 | Incoming data (e.g., from laboratory reports) are entered into discrete structured fields (i.e., they can be tracked and graphed) |

Discussion and conclusions

A common measure of use in prior research is the holistic measure of time spent on the system. We have critiqued this measure in relation to feature rich systems. As the system becomes more embedded, some users move to a more advanced level and extend the system by modifying features. Practitioners seek to design interventions that can help basic users become more advanced, where 'advanced' refers to increased deployment of available core features. For feature rich systems, we have shown that the holistic measure, system use, is inadequate and should be replaced by 'level of use', specified as a formative construct.

We have described a methodology for the content validation of the construct, starting with a review of the literature, and then ensuring that the content satisfies the specific context by employing the Delphi technique. Selected experts were asked to classify level of use into three levels adopting the simple terminology of basic, intermediate and advanced which is easy to comprehend by both researchers and practitioners. We have found that the Delphi process is a suitable and novel methodology to validate content. Because the dependent variable has been specified as formative, the context is important. We empirically tested the methodology by validating the content of the construct that measures the post-adoption level of use of EMRs by primary care physicians in Ontario.

A limitation of this research is that the indicators are difficult to measure objectively. Ideally, access to computer logs with details of what functions were used would provide the source data to be used to triangulate the conclusions drawn from the Delphi process. However, this is impractical because of the sheer volume of data to be filtered as well as the limitation of access in the empirical medical setting due to privacy considerations. A further limitation is the selection of the panel of experts for the Delphi process. Because the selection was through recommendations by software vendors, it is possible that a few of the experts may have limited knowledge of some of the EMR advanced capabilities. This limitation was mitigated by turning to OntarioMD who considered the final list of experts to be representative.

The content validation that we have performed in this study is the first step of instrument development. The recommended next steps for future researchers are to develop a scale for level of use and test its reliability (Petter et al. 2007) from the quantitative responses collected from a survey of physicians. Researchers should follow the suggestion of Diamantopoulos and Winklhofer (2001) and add reflective constructs that are nomologically correlated to the formative construct. The resultant model then becomes sufficiently identified to apply standard tests of covariance.

Our conclusion is that feature rich systems need to be studied at the feature level. This paper has addressed a key theoretical gap in post adoption research by reconceptualising the dependent variable 'system use' as 'level of use' and specifying it as a rich formative construct that measures core feature usage. Further, our research shows that the combination of a context-specific literature review followed by the consensus-seeking Delphi technique is a suitable method to validate the content of this construct as the first step of instrument development. To demonstrate the methodology, we applied it in the context of electronic medical records systems, which are increasingly important in advancing productivity in healthcare.

Acknowledgements

The authors wish to thank OntarioMD, which played a major role in inviting primary care physicians to participate in the research.

About the authors

Norman Shaw is Assistant Professor at the Ted Rogers School of Management, Ryerson University in Toronto, Canada. His current research interest is the post-adoption use of technology. He can be contacted at norman.shaw@ryerosn.ca

Sharm Manwani is Executive Professor of IT Leadership at Henley Business School, Reading University in Henley-on-Thames, United Kingdom. He teaches and researches business and IT integration covering strategy, architecture and change programmes. He can be contacted at sharm.manwani@henley.ac.uk