Vol. 12 No. 3, April 2007 | ||||

In high risk, safety critical processes, operators must incorporate efficient and effective communication and negotiation of essential information to avoid accidents and maintain system safety. Access to information in the modern world has been greatly improved with advances in computer technology, but the distributed use of information remains a weak link between actual information, the meaning given to information and the sense individuals, and consequently groups of individuals, make of the information within the boundaries of their work environment. This distribution of information can result in miscommunication about the condition of a configuration and serve as a precursor to an accident. By studying safety critical systems using an approach that focuses on information behaviour and the nature of the work, researchers may gain understanding as to why processes occur as they do, and what motivates the information practice of individual operators and groups of operators in an environment with low tolerance for mistakes.

In this paper, I will lead the reader through basic concepts of relevant theories to consider as a foundation upon which to research real-world, safety critical activity. As a human factors researcher and an information scientist, I would like the reader to keep in mind that my concern rests not solely with evolving a theory but also with developing practical, measurable applications of the work. This concept represents a step in that direction, or as Liam Bannon (1990) eloquently notes, A Pilgrim's Progress.

To begin the discussion we must first understand what is the substance of information behaviour? Is it information that behaves or is it the users who decide the behaviour of the information? Research in Information Science has endeavoured to classify what is intended as information behaviour. Wilson (2000) describes human information behaviour as the totality of human behaviour in relation to sources and channels of information, including active and passive information seeking and information use. Marchionini (1995) describes information seeking as a process in which humans purposefully engage in order to change their state of knowledge. Ellis (1989; Ellis et al. 1993 ) modelled the features researchers employ in information seeking behaviour to include: starting, chaining, browsing, differentiating, monitoring and extracting. Ellis's work has demonstrated patterns of information behaviour across varied situations and contexts. The substance of what represents information behaviour suggests that users have an information need and thus perform an activity to make use of sources to satisfy the information need. Information behaviour encompasses a cyclical nature and remains dynamic to the situation at hand.

Estimates point to human error contributions in up to 80% of all aviation accidents through such particulars as captain's authority, crew climate and decision skills (Dismukes et al. 1999). Stick and rudder skills, which were once the basis on which to conduct a flight, are no longer adequate in the ever expanding, technologically driven, increasingly distributed world of aviation. Pilot duties include information assessment and integration to form a cohesive representation of the current and future state of the aircraft, known as situation awareness (Endsley 1999). Commercial pilots are more than mere drivers-of-aircraft as they must transform data presented to them from a myriad of digitised and auditory interfaces into a meaningful exact representation of the real world as it exists presently and as it will exist in the near future, all the while keeping in mind how it existed in the immediate past. The data pilots use to conduct a flight is highly predictable due to flight planning, known aircraft characteristics and standard operating procedures but remains sufficiently flexible so it can be adjusted for context-specificity to serve the needs of the individual flight. That is to say, data for the mission often share common elements or arrangement, but flight data are dependent on the specific properties of the unique flight (i.e., weather, location, weight, aircraft, etc.) at a specific point in time. As an example, flight instruments are consistently monitored, but the frequency and pattern of monitoring the instruments depends on the state of the flight. An approach for landing requires more frequent monitoring and update of information, due to the proximity of the terrain and obstacles, than does straight-and-level flight at higher altitudes, where there are no terrain avoidance problems.

Historically, the design and information needs of the flight deck have been studied through the lens of individual cognition, performance and perception. Nardi (1996) notes that system design benefits from explicit study of the context in which users work. Bishop, et al. (2000 ) note a limited range of problems and use scenarios that designers and users can anticipate with limited exposure to a new system. Researchers must take advantage of opportunities to study information use within the context of entrenched work conventions. Grounding research in Activity Theory through situated ethnographic study allows researchers to capture the integration and obscuration of events that inform the design and evaluation of decision support tools and is desperately called for in the aviation environment. However, its practice in aviation has been limited due to the proprietary nature of airline information and the capacity of the federal government to find fault with and take action against individual pilots. Therefore, much of the time, aviation studies are performed in simulators or other laboratory environments. But simulator studies need careful consideration to reflect the complex interactions and environment encountered by pilots in their work. Laboratory studies that focus only on one display system out of context of a flight regime, while important, may be a disservice to the needs of pilots in the structure of the actual distributed environment in which they perform their work. These studies may aid the pilot's discrete actions toward a technology, but are separated from the dynamic relations and characteristic activities surrounding the actual aviation environment (cf. Engestrom 1987, 1990).

Certainly understanding limits on cognition and human information processes illuminate how digital flight information should be presented and these factors must be considered before decision support tools are designed and put into practice. Yet, errors may be socially situated and understanding them as such may allow us to answer why some categories of error persist over time and others seemingly appear to get resolved. Cognitive, individual error causation is just part of the picture and human factors research has devoted much effort to understanding this type of error. Yet it comprises one form of activity or orientation that is part of the more comprehensive ecology of information (failure) on the flight deck. As soon as there is disagreement about what constitutes an error and how it was made, the door is open to ongoing debate concerned with how mistakes can be identified and rectified. The focus here is not on what is error, but on how errors may be socially propagated, not only through what the operators in a system do, but also through what they say, thus how they share information. As Kling (1999) notes, the focus of (social informatics) research must become interdisciplinary to understand and account for the design, uses and consequences of information technologies and their interaction with institutional and cultural contexts. Bishop and Star (1996) note that several overlapping, yet distinct, research communities have studied the social aspects of knowledge structure and communication in computer-based information systems. While differing fields have published numerous studies regarding communication networks and knowledge structurization, there has yet to be an ethnographic study of the nature and use of information among aviation crews in their entirety (birth to death, as it were). By drawing principles from (seemingly heterogeneous) fields of work laid down by researchers such as Vygotsky (Vygotsky, 1972; Wertsch,1985), Bourdieu (1977), Leont'ev (1974), Suchman (1987), Hutchins (1996) and Hanks (1996), to name but a few, researchers can create an accurate reference point from which to study information activity, including cultural and habitual information patterns, surrounding the distributed information practice of any high risk, safety critical system (e.g., aviation, medical, nuclear, nautical, rescue, etc).

There are several schools of thought on the development of Activity Theory and differing models used as a basis in relation to information seeking behaviour (see Wilson, 2006). Kaptelinin et al. (1999) describe Activity Theory as concerned with an activity directed at an object that motivates it and gives it a specific direction. Activities may be goal-directed to satisfy an object (goal) and the actions within the activity may be conscious and varied to meet the goal. They note that actions implemented through automatic operations do not necessarily have their own goals but they do assist the actions of users in their current situations. Luria (1974) suggests that research should focus on the influence of complex social practices rather than reducing the research to actions toward single elements. He argues that embedded social practice determines an individual's socio-historical consciousness and thus their motivation for action. The tools that individuals use toward action then provide a new layer in the system of practice constructed upon a previously established meaning associated with the tool and the new reality of how is presently used.

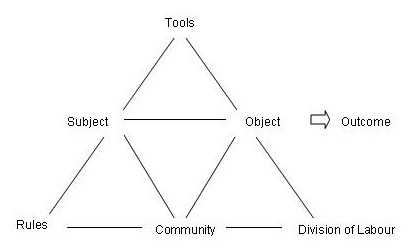

Engestrom (1987, 2000) has modelled the structure of an activity system as a mediated relationship (Figure 1) based on Vygotsky's (1972) triangular model representing the relationship between a stimulus and response as either directly connected through lower psychological functions or indirectly related through higher psychological functions. Engestrom (1987, 2000) illustrates these relationships situated between subject and object with mediating tools. Engestrom's work also exemplifies the social community within which the activity occurs, including the rules or norms and the roles or division of labour. These elements are meant to represent the system of activity in terms of the consecutive actions that envelop distributed work practice. According to Wilson (2006), 'The key elements of activity theory, Motivation, Goal, Activity, Tools, Object, Outcome, Rules, Community and Division of labour are all directly applicable to the conduct of information behaviour research'. Given this basis, can we use the elements of activity theory to conduct information behaviour a propos the problems faced by teams of operators in safety critical systems?

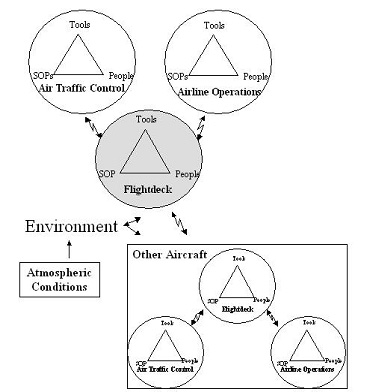

There are frequently indeterminate circumstances under which individuals in an operational team must use personal judgment to determine meaning and subsequently each must negotiate this meaning with other team members to arrive at a solution. The flight deck as a socio-technical system envelops the activity theoretical interdependencies between crew member and technological infrastructure, including interdependencies with air traffic control, airline operations teams and other pilots operating in the system (Figure 2).

Layered within these interdependent systems are communicative media (face-to-face and over the wire) that add a negotiation confound to an already congested system. While we may consider that two pilots sitting next to each other on the flight deck are communicating face to face, in reality, they are communicating through headsets and most of the time, not looking at each other when they speak, thus the words they use, or how they incorporate the tools on the flight deck into their work remains of utmost importance to communicating meaning. Internally and externally, these activity groups negotiate the use of Standard Operating Procedures, tools and human resources. These teams work interdependently to perform their tasks with appropriate flexibility in the context of the distributed work system (cf. Hughes et al. 1992). To think in terms of only the immediate flight crew, is to ignore the complex infrastructure devoted to the distributed support system and the direct media through which such support is derived (Bentley, et al., 1992).

Hutchins (1995, 1996) notes that information flow in critical operations follows a specific agenda. Among team members, knowledge is sought and obtained in accordance with the individual team member's position and his performance of routine procedures. This information must be interpreted situationally. Tools may be designed for a specific purpose, but it is how tools are implemented and used across the team that determines their success. According to Hutchins, 'Social organizational factors often produce group properties that differ considerably from the properties of individuals' (Hutchins 1996: xx). Thus, the cognitive properties between group members depend on the character of the social organization, rather than the cognitive properties of individuals in groups. Group information may be more robust than individual information, yet it requires social and organizational devices for continued support of group information retrieval in increasingly complex situations. Information retrieval efficiency relies on patterns of group size, individual interaction, interaction through time and distribution of knowledge (Hutchins 1996). On the flight deck, information processed collaboratively by multiple crew members may be more robust than information processed by each individual, yet it requires social, organizational and technological mediation for continued support of group information retrieval in increasingly complex situations.

Unfortunately, a damaging social factor in commercial aviation is the institutional flaw that allows pilots to individually bid for trips, a practice which does not maintain consistency in crew composition over time. The flight deck's social atmosphere must be negotiated for every change in crew, since interaction patterns do not normally carry over between flight assignments. Thus, it remains difficult to quickly negotiate common ground and lay a framework for joint activity (cf. Clark 1996). Interpreting shared information is hampered by this lack of cultural and experiential common ground among flight crews. It is only through the situated activity of the flight that crew members negotiate the meaning and implications of safety critical information for the mission. Nonetheless, even the tools pilots use to negotiate their interaction can change from flight to flight, as the aircraft may possess different configurations and different levels of technology. To facilitate their interpretation, pilots require clearly defined information with minimal to no distraction, especially during periods of high workload, to achieve effective situation awareness. It has been suggested that the use of external alarms add a layer of situation awareness defence to the crew; yet while attempting to provide decision support, the presence of numerous alarms on the flight deck actually provide confounds in a system with little tolerance for such complications. The modern aircrew cannot operate without a deluge of aural, visual and tactile alarms at any given time in the flight. In fact, the points in a flight where the majority of accidents are known to occur, takeoff and approach-to-landing, in which air crews operate under specific flight guidelines and procedures for communication, is also the point in the flight where most alarms annunciate, thus disrupting the flow of activity and critical information (Billings 1997). However, alarms are not foolproof and have been known to provide 'false' warnings. Consider the fact that crews are acutely aware of the rate of false alarms provided by warning systems and learn to ignore technologies developed to aid in their situation assessment (Billings 1997). As a result of false warnings, aircrews have taken for granted that alarms will sound at specific junctures in the flight and often do not take the time to heed the warnings. This 'taken for grantedness' (cf Bourdieu 1977) makes it possible to engage in the fluidness of everyday aviation operations, without which it would be impossible to ever conduct a flight. Yet ignoring the information contained in the warnings may result in ignoring a true threat. Bentley, et al. describe this (in the context of air traffic control) organization of work in regard to technology:

To be usable, [technological artefacts] have to be turned from a machine into an instrument, by being incorporated into the flow of the work and finding a place within the practices and contingencies of the working division of labour. A computer-based system, or any other tool can, on the basis of its material properties, be known about, geared into, made to work, tinkered with, taken advantage of, used in unanticipated ways, modified, circumvented, or plain rejected by those who work with it, implement it, invest in it, or manage it. That is, it is densely woven into the activities of doing the job and in highly particular ways' (Bentley, et al. 1992: 117).

Technological advances may add to flight efficiency, but they may not aid the operators as they subsequently learn to rely on these systems and become de-skilled in their performance (Bainbridge 1987). The invisibility of flight processes with the use of advanced automation takes the crew out of the information-seeking loop and tends to defeat, rather than sustain, the distribution of information seeking practice. Over-reliance on technology may instil a false sense of safety in the flight crew and promote a complacent information-seeking environment, in which the crew uses the technology to provide, rather than support, their collective knowledge. Van House et al. (1998) discuss changes in technology as influential on knowledge communities by either undermining or supporting levels of trust. The crew is taught to trust that automated systems work properly and that they needn't be aware of each automated change to the state of the aircraft. A flight crew necessarily then learns to trust the very automation that operates on an opaque level. As aptly described by Weiner (1988: 138), 'A number of accident and incidents in recent years have been laid at the doorstep of automation, not failures of the equipment so much as human error in its operation and monitoring'. So in addition to monitoring, detecting and recovering from increased multi-modal effects with decreased mode awareness, flight crews must also maintain an accurate representation of where they are actually located in space and time, share complex information with each other that they may individually not fully understand and, of course, fly the aeroplane and arrive safely and on schedule.

In cases of uncertainty, what is it that flight crews do? Human information processing does not necessarily flow in a comprehensible manner. Consider John Dewey's treatise on reflective thought. Dewey defines reflective thought as: 'Active, persistent and careful consideration of any belief or supposed form of knowledge in the light of the grounds that support it and the further conclusions to which it tends' (Dewey 1910/1991: 6). Reflective thought constitutes a conscious effort to establish belief upon a solid foundation of reasoning, which is to say, factual not suggested evidence. In our day-to-day activities we encounter current facts that suggest other facts persuading our belief in the latter as truth on the basis of our knowledge of the former. In other words, we suppose other facts we may not yet know but expect to confirm, based on our knowledge of past experiences. When our activity progresses seamlessly from one interest to another, there is no need for substantial reflexive thought. However, when we are forced to weigh alternatives (such as choosing which fork to follow on a road we have never travelled before) we either arbitrarily choose to cast our fate to luck, or we attempt to decide based on evidence, which involves memory or observation or both. Our reflection is based upon discovering proof that will serve our ends. However, memory, or habit, may serve as detrimental to reflexive thought and may result in choice that does not fit the present circumstance because the focus is on the outcome.

Information must be accessible, stable and at the same time dynamic, to meet the needs of the crew in safety critical high-risk systems. Pilots utilize mental models (Johnson-Laird 1983), which are dynamic internal representations of the real world in order to gain situation awareness. Carroll and Olson (1988) note that mental models entail knowledge of the components of a system, knowledge of their interconnection and knowledge of the processes through which they change their state. This knowledge is coupled with the user's knowledge of how to configure actions and the user's ability to explain why a set of actions is correct. It is this mental representation of the system state that allows pilots to maintain situation awareness. Mental models allow pilots to interpret information and perceive cues that may indicate a problem requiring immediate attention or action (Orasanu 1993). However, pilots must constantly update their mental model(s) through 'continuous extraction' a term Dominguez (1994) uses to describe the process of constantly ensuring the most up-to-date mental representation of the state of the system. By continually upgrading their perception of the system state, pilots comprehend a cognitive representation of the flight's position. However, with regard to the modern flight deck, the technology itself continually updates the aircraft's position and transition between flight states. This may, in turn, influence the pilot's information update and mental model of the system state. Using the aircraft systems, a pilot must decide when to look for new information, where to look and why. A pilot may frequently fixate on information or instrumentation without properly revising their mental representation of the circumstances. In these situations, pilots may trust in the mental model of their previous successful flights as a model for the current situation, thus imparting a false sense of confidence.

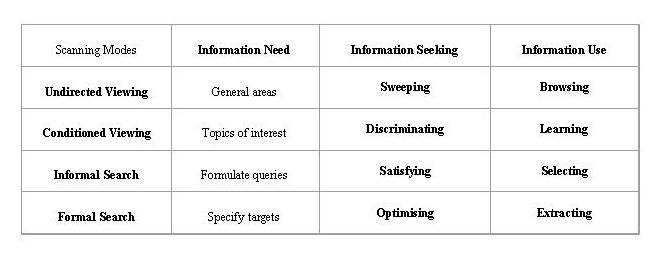

In a study examining the practice of researchers employing the World Wide Web, Choo et al. (2000) focused on behavioural models of information seeking to describe the process a user follows to scan the environment and satisfy an information need. They incorporated research rooted in organizational science originally based on Aguilar's (1967) fieldwork, subsequently expanded by Weick and Daft (1983). This work suggests that parties scan in four distinct modes:

In their study, Choo, et al. (2000), amplified the information seeking implications of each of the aforementioned modes by elaborating on the direction of the scanning and the amount and kind of effort expended by combining the aspects of these models into a multidimensional framework. On one axis, the four scanning modes, on the other, the categories of information seeking behaviour identified by Ellis (see Figure 3 for a representation of their model). Ellis (1989; Ellis, et al. 1993) proposed a general categorization of information seeking behaviour as:

Ellis (1997) updated this work thus adding to his original distinctions. To note:

Ellis notes that when users distinguish, information channels, such as discussions or conversations, as well as secondary sources, are normally ranked higher than primary sources (Ellis & Haugan 1997).

Choo, et al. (2000) concluded that each mode of information seeking was distinguished through users' recurrent sequences of search tactics. Knowledge workers employ multiple and complementary methods of information behaviour with differing motivations and differing tactics to collect qualitative and quantitative data. These tactics range from undirected, when there is no specific need to be discovered, to formal focused information used in decision-making or in the formation of an action plan.

This study adequately addressed the detailed behaviour of experienced knowledge researchers in a Web-based task satisfying a static information need, in which every Web page and path can be distinguished and accurately tracked. However, it fell short in lending itself to the study of distributed practice or to adaptation to a dynamic operational environment, nor was it designed to do so. The level of detail gathered with Web tracking in the Choo et al. model proved impossible in the social environment, as the model does not allow linking to outcomes such as system failure, error, accidents and the like. Still, the framework developed in their research proved a beneficial starting point upon which to connect the previous work in information behaviour to model distributed information behaviour within an activity system.

To build upon the Choo, et al. (2000) model commercial aviation operations serve as the basis for an information-rich activity system. The flight deck of a modern air carrier employs multiple operators who use advanced tools and distinctive language to perform their work which entails the goal of safe and efficient operations. In aviation, technologies have been developed and placed on the flight deck to mitigate accidents; still, underlying problems persist in the system infrastructure, which may necessitate the technology in the first place and have not been adequately addressed. The emphasis has mainly been on cognitively grounded, rather than socially grounded, theories feeding into the development of technological fixes to remedy cognitive or attention-based problems. While certain technological fixes may demonstrate usefulness as support tools for human operator awareness, these fixes do not appear to mitigate problems with the underlying social activity on the flight deck. By studying accidents through the lens of the social activity on the flight deck we can approach understanding what is the information behaviour of the crew and the overall information ecology present within and without the flight deck.

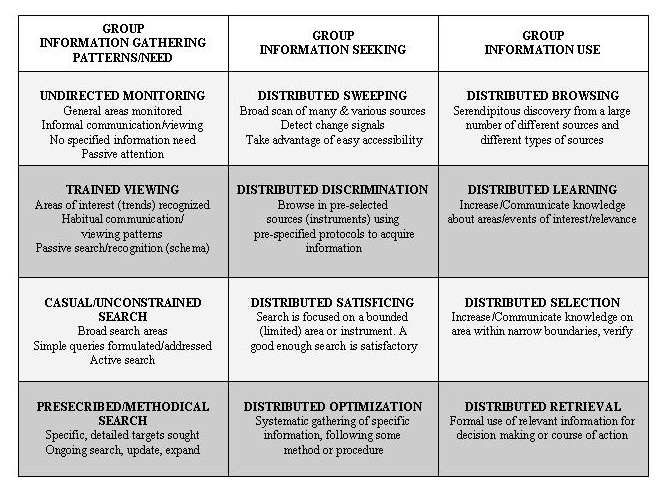

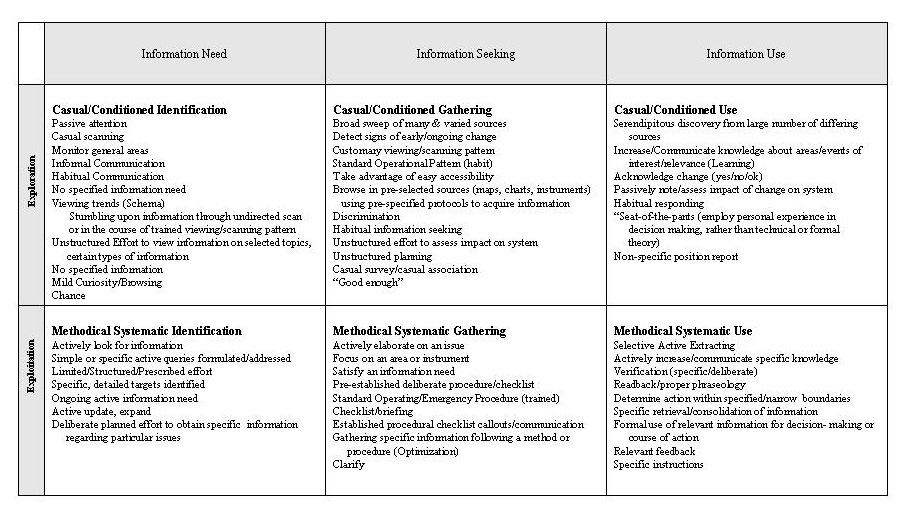

Distributed information behaviour in safety critical operations is necessarily different from that of the World Wide Web users in the Choo, et al. (2000) study. Among other factors, there are limited resources and a limited amount of time in which operators have to perform a search. On the flight deck, pilots frequently must make decisions based on incomplete information and must choose what appears to be the most favourable option among the choices present. Simon's satisficing addresses the bounded information available on the flight deck at any given time. That is to say, pilots necessarily determine a solution that is satisfactory given time constraints and limits on available information, rather than devoting substantially more time and attention to arrive at an optimal solution (Simon 1956). In other words, crews must either exploit their current information, or take the time to explore another path of information, which may or may not prove helpful. To better illustrate the dynamic process of distributed information behaviour, Marchionini's (1995) term extracting, as opposed to retrieving, was added. Extracting bears more resonance with pilot duties, referring to the use of information by reading, scanning, listening, etc. As information is extracted it is manipulated and integrated into the domain. Figure 4 represents the Crew Information Behaviour grid as originally redesigned.

The distributed practice within the grid may be visualized as moving from left to right and up and down as information is negotiated. Beginning at the left with identifying a need, moving into seeking information to satisfy the need and eventually putting the information to use. This is not necessarily a linear process as sequences may by redefined, or refined within needing, seeking and using information, depending on what information is available and the needs that develop from the search and use of that information. Moreover, moving vertically through the grid represents the exploration/exploitation continuum. Practices at the top of the grid represent the exploration of multiple sources while towards the bottom they represent the exploitation of specific and fewer sources. The interaction of the crew may then be analysed for each instance of information behaviour and coded into the grid scheme (von Thaden 2005).

This framework originally appeared to lend itself as a tool to attempt to understand the information activity of the commercial air carrier flight crew (von Thaden 2003). The information ecology on the modern flight deck ranges from monitoring, trend-watching behaviour, to that of intense focused specific information need. Delineating crew information behaviours performed in the context of the flight deck environment may provide a window through which to view the operational needs of the crew, determine crew performance and allow for the development of improved infrastructure and training methods in the real world of commercial aviation operations. Yet the manner of categorizations in the original model was problematic to the domain at hand. As mentioned previously, Choo, et al. (2000) propose that environmental scanning involves undirected viewing, conditional viewing, informal searching, or formal searching. When a pilot leans back just looking around, is there a distinction to be made between the categorizations of undirected and informal? While there may be, the level of distinction between the categorizations became muddied in the context of the social activity.

In aviation operations, time and space continually change. Pilots have a constant need for information that cannot truly be entirely satisfied because once one information need is satisfied it changes. What holds true for one point in time and space may not hold true for the next. Using the tools in the environment, which includes engaging in specific discourse, allows pilots to constantly negotiate their information needs; the continual process of identifying essential information, gathering evidence to assure their diagnosis of the situation is correct and accordingly then using the information. Once information is put to use pilots' information needs still exist, as pilots must decide whether their understanding of the information remains valid. Pilots must also act upon numerous information needs at one time.

From the outset of their first lesson, pilots are trained to scan the environment, look for trends, understand what the trend information relays, decide what to do about it and chart a course of action. It is an interesting environment because pilots clearly have to form a decision and execute an action frequently. There is no flight where a pilot can decide not to choose an action, because sooner or later, the laws of physics will prevail. Given the dynamics and training in aviation operations it makes sense to study information behaviour as either passive and conditioned behaviour or active and formal behaviour. Pilots generally put information into practice two ways, they actively engage in a methodical, systematic, highly standardized, defined procedure of making sense of the environment, an almost feed-forward activity (although this methodical process can actively engage understanding past events), or they passively, casually survey the environment or their instruments to evaluate the environment, a more experiential, seat-of-the-pants endeavour looking for things that may be out of place or may not feel right. In other words, pilots function informally or formally, looking at or looking for information. These broad distinctions of pilot information behaviour allow a general understanding of their work practice. Although integrating the categorizations from the Choo, et al. (2000) may lose some of the intricate information strategies, the real need is to understand the basis for information behaviour within the activity of the flight: is it solely personal experience or formal methodology? With these distinctions, actively engaging in a feed forward information process, or passively engaging in a habituated information process, the Distributed Information Behaviour System has been developed to include new categorizations of information practice (Figure 5).

For each action in the Distributed Information Behaviour system, there are different criteria determining the categorization of the discourse or action. Be aware that each instance of discourse has a prior communication from which it maintains its context.

In Casual and Conditioned Identification general areas of interest are passively viewed (scanned) using casual or informal means. There is no specific information need communicated but simple queries may be formulated or addressed on broad search areas. Oral examples of Conditioned Identification on the flight deck include:

'What's this?'

'Should I take care of that?'

'Oh wait, what were you doing?'

'Oh ####... can you get that?'

'Uh, how does that work?'

Casual and Conditioned Gathering may consist either of broadly sweeping varied resources to detect change signals and take advantage of easily accessible information or Conditioned Gathering may consist of passively fixating on a limited area or instrument. Oral examples of Conditioned Gathering on the flight deck include:

'I think it was five thousand'.

'We'll see the rabbits and all that stuff'.

'We probably want to go right er well maybe left. Most likely that'll be good'.

'Just do the parallel, that'll be good'.

In Casual and Conditioned Use, information may be discovered serendipitously through passively browsing a number of different resources. Conditioned Use may also entail passively or habitually acknowledging a change within narrow boundaries or using personal rather than technical criteria to arrive at a decision. Oral examples of Conditioned Use on the flight deck include:

'Sure'.

'Sounds good'.

'Oh we'll do all that'.

'We'll try it next time around'.

'It's right there'.

Non-specific position report on frequency

In Methodical and Systematic Identification, general areas of interest or trends are actively recognized using practiced viewing patterns (schema). Specific detailed targets are actively sought or simple specific needs are updated and expanded through an ongoing search. Oral examples of Methodical Identification on the flight deck include:

'Do you want to take the controls so I can brief for the approach?'

'We're going to be landing soon so can I have the descent checklist?'

'What's the decision height for ILS one-four?'

Methodical and Systematic Gathering of information involves actively browsing in preselected sources or instruments using prespecified protocols (methods/ procedures) to acquire information, such as attending to a checklist. Methodical Gathering also consists of active, ongoing measurement. Oral examples of Methodical Gathering on the flight deck include:

Approach Briefing: 'Field elevation at Springfield is five hundred and ninety seven feet. Two-two-hundred is our safe approach altitude. Final approach course is two-two-one... '.

Checklist callouts: 'Cowl flaps - closed. Carburettor heat - as required. Mixtures enriched'.

Measuring the descent: '...coming down through one hundred feet ... ninety ... eighty... keep it coming... fifty feet... '.

Reading Chart: 'All right then we'll go outbound for a minute and you're four-point-nine away from procedure turn on the DME'.

Methodical and Systematic Use, of information entails actively increasing specific knowledge about areas of interest, relevance, or change. Relevant information is used for determining a specific course of action. Methodical Use of information also entails meticulous confirmation (verification) of information. Oral examples of Methodical Use on the flight deck include:

'Debuke Traffic, Homer one-four-two is inbound on the ILS one-five'.

'Be sure that I maintain three-four hundred feet on the altitude, I'm trying to hold this ADF'.

Response: 'Roger, three-four-hundred feet'.

Response: 'Roger, I have control of the airplane'.

At missed approach point: 'I do not have the runway in sight, let's go missed'.

While the coding process is fodder for another discussion, below is a broad overview demonstrating how the links of the various foundations work together in the Distributed Information Behaviour System (note the transcriptions below are excerpted from actual experimental data testing the application of the Distributed Information Behaviour System framework) (von Thaden 2004). Imagine that you are in the jump seat observing the flight crew on a commercial flight in a relatively small plane such as a Piper Seminole; the chances are that, while on approach-to-land at the airport, you would hear something like this [Captain (CPT), First Officer (FO)]:

CPT Before landing

FO I'll do the before landing checklist, roger... cabin briefing... cabin briefing complete. Shoulder harness... secure. Seat backs... fuel selectors... electric fuel pumps...

CPT On... fuel on...and both pressure.

FO Mixtures rich, or as required.

CPT Roger, mixtures enriched.

FO 3700 cleared to 2000... carb heat is still on. Rec and strobe lights on... pitot heat is on... and I figure in about ten minutes should I give company a call.

CPT Carb and pitot on... lights on... uh we'll actually call him when we are inbound because by the time we get on the ground and taxi we'll uh... .

FO Yeah that's a good call.

FO Ok, we'll call company when inbound.

CPT Bring throttle back to 20 again.

FO Back to 20 roger and uh 1000 feet to go.

CPT 1000 to go. Get a little inside my arc here see what were doing, a little bit early see how we're doing... less then 3000 and uh lets uh verify DG we got going on here, 1-1-0. 1-1-0. I'm good. You're good. All right.

FO Our altimeters are off or at least ours are different 3-0-1-1

CPT 3-0-1-3

FO It's 3-0-1-1 on the altimeter, ok they're back

CPT Still holding it steady at 9.5 DME

FO Less than 2500, 500 to go

Employing a framework such as the Distributed Information Behaviour System, you would see that this interaction largely represents distributed methodical and systematic information behaviour. Both the captain and the first officer are prepared for their tasks and monitor the processes ahead of the aircraft (SA in practice). The captain calls for the before-landing checklist, the first officer goes through the checklist items and the captain responds specifically to the items (though at times using the accepted technical abbreviated vernacular). There is continual back-and-forth verification of information between the crew members. This crew engages in the systematic process of performing the descent checklist and verifying that, indeed, the information they are employing matches the information they are gathering. While a small amount of casual and conditioned information behaviour is engaged, it positively contributes to the work activity. Using casual and conditioned information behaviour the crew coordinates that they will call their airline company operations when they are near the airport. They also notice that their separate altimeters do not appear to match. They casually watch the trend until the altimeters once again become synchronized as they roll out of a turn. It would appear one altimeter lagged a bit more than the other. This crew's actions represent what Engestrom (2000) would refer to as a constant stabile activity system.

Another example of the exact approach-to-land course may progress something like this [Captain (CPT), First Officer (FO)]:

FO Do we want to do a descent checklist prior?

CPT Descent checklist, altimeter set and check did you already do yours?

FO 3-0-0-5

CPT 3-0-0-5 is correct, cowl flaps closed

FO How's our cylinder head?

CPT Hey, I'm not the pilot on the controls, man. Cylinder head temperature hasn't changed it's been up in the 400 range the entire time so...

FO Ok you're the captain

CPT Well you're... you're the guy flying. I suggest we leave them alone.

FO That is fine by me.

CPT Uh, carburettor heat as required... ok... mixtures enriched I'll wait till we come back in on the procedure turn to do that. Environmentals and review the approach. Ok if you want me to fly I can just keep updating you on stuff

FO Um

CPT Whatever works for you

FO Are we almost... ?

CPT Yeah we are almost there, it's 9.6

CPT Actually it's coming in real close so I'd say start the turn

FO What's our time? What's our... ?

CPT 9.9

FO Ok

CPT If you want to start your turn

FO Or wait a little bit...

CPT Cause we are coming...

FO Are we?

CPT Yeah.

The discourse presented here represents casual 'seat of the pants' distributed information behaviour from both the captain and the first officer. This crew performs the checklist items in an unstructured manner. While they engage in some systematic information behaviour (they hold the checklist yet seldom reference it; and they are aware they are on approach to the airport), they don't actively follow or share the particular and very specific information they need to share at this important juncture of the flight. This crew's performance of the descent checklist, deferral to the judgment of the other without systematically verifying information and the offhand discussion of the information needed for a critical operation affords them the opportunity to miss vital safety information and assume checks have been performed. Their actions and discourse are not entirely unreasonable (I say this because they did indeed land safely, albeit they were not stabilised on their approach to land) they represent hazardous (dis)information behaviour. This crew demonstrates what Engestrom (2000) would refer to as deviations from standard script, or disturbances in activity. While this crew may not have had an accident as a result of this unstructured information activity, the type of distributed information behaviour demonstrated by this crew contributed to an accident on a subsequent simulated flight (von Thaden 2005).

In a separate empirical study using Distributed Information Behaviour System (von Thaden,submitted) distinctions can be made between crews coordinating a stabile information activity system and those deviating from standard script, thus disturbing the information activity system. Of the crews who had accidents in the simulated environment, all demonstrated significantly casual/conditioned information behaviour activity on missions prior to and including their accident flight. Those crews who consistently demonstrated information behaviour activity that was comprised significantly of formal/methodical activity did not perform a mission resulting in an accident. When quantified, the data gleaned from the Distributed Information Behaviour System exemplifies not only formal vs. casual information behaviour activity, but also delineates the percentage of instances crews spend searching for, gathering and using information in the various stages of flight, serving as an important distinction for distributed sensemaking. Using activity theory in concert with an information behaviour framework allows researchers to identify weakness in the crew's performance, identify differences in crews who err to the point of an accident than those who do not and make targeted improvements to train them appropriately or build more supportive infrastructure, thus hopefully defeating the chances of an accident in the future.

At issue is how to best ensure efficient and effective use of essential information in high risk, safety critical environments such as aviation operations. Using ethnographic approaches, researchers and practitioners may study the activity in safety critical systems and the exact interactions that relay the negotiated activity and how information is distributed internally and externally to perform the activity successfully.

What constitutes the activity of distributed practice in safety critical, highly dynamic systems? The people, the tools and the processes they employ in their environment, coupled with the historical and social contexts of how they perform the work, including their discourse. By understanding the processes that allow for seamless fluidity of operation in high-risk systems, we can form models of supportive infrastructure to scale to the demands of everyday collective activity. To do so, we need to draw on multiple foundations to study information activity.

By analysing the information behaviour of teams who have accidents and those who do not, researchers may be able to ascertain how they (fail to) make use of essential, safety critical information in their information environment. This research affords the possibility to discern differences in distributed information behaviour illustrating that teams who err to the point of an accident appear to practice different distributed information behaviours than those who do not. This foundation serves to operationalise team sensemaking through illustrating the social practice of information structuring within the activity of the work environment.

The Distributed Information Behaviour System provides useful structure to study the patterning and organization of information distributed over space and time to reach a common goal through identifying the role information practice has on the critical activity of negotiating meaning in high reliability safety critical work. This is applicable to other domains.

My thanks to Ann Bishop and Michael Twidale at the Graduate School of Library and Information Science, University of Illinois at Urbana-Champaign, for their assistance in the early stages of this work and the referees for this special edition for their helpful and well-considered review of the draft paper.

| Find other papers on this subject | ||

© the author, 2007. Last updated: 28 March, 2007 |