The conversation as the answer: collective information seeking and knowledge construction on a social question and answer site

Ander Erickson

Introduction. This paper presents an analysis of the information seeking practices found on a social question and answer site, Ask Metafilter, from the perspective of social constructionism. It has been suggested that the study of epistemic community is a prerequisite for a better understanding of information-seeking and this paper accomplishes this in the context of a social question and answer site.

Method. The author takes a social constructionist approach through the study of a series of conversations on the Ask Metafilter social question and answer website.

Analysis. This is a conversation analysis of the sets of answers associated with questions on Ask Metafilter.

Results. The author was able to identify important features of collective information seeking and knowledge construction such as the importance of corroboration, elaboration, and productive disagreement.

Conclusion. These findings suggest a new direction for social question and answer research, with implications for how knowledge is socially constructed more generally, where the unit of analysis becomes the conversation rather than a singular answer.

Information consists of social arguments that take part in ongoing conversations about the meaning of an issue or a phenomenon. (Tuominen, Talja, and Savolainen, 2002)

Introduction

Information seeking has commonly been conceptualized as a mode of behaviour (Savolainen 2007). Investigators observe people as they react to sources encountered through the Internet (Tombros, Ruthven and Jose, 2005), new online learning devices in their workplace (Dimond, Bullock, Lovatt and Stacey, 2016), or engage in self-directed learning online (Nadelson et al. 2015). Studies of this sort, those that describe ‘how information needs, seeking and the relevance criteria of individuals are affected or directed by their current emotional and cognitive states, situations and work tasks' (Talja, Tuominen and Savolainen, 2005, p. 85), have contributed much to our understanding of the way in which individuals carry out information seeking tasks but they tell us very little about the objects to which those tasked are directed. In particular, a focus on information behaviour elides the practices that inform the construction of knowledge, 'the cultural formation of meanings, representations and classifications' (Talja, et al., 2005, p. 85). Beginning in the 1990s, there was a countermovement in the information science community made up of those who believe that information seeking is an irreducibly social phenomenon, that discourse is the primary site of knowledge construction (Talja, 1997), and that the most productive understanding of information seeking needs to be grounded in an examination of the ‘epistemic communities' that ratify and produce information (Tuominen, Savolainen and Talja, 2005, p. 85).

These are all tenets of social constructionism, a metatheory that challenges the cognitive view which sees knowledge as a representation of an outside reality residing within the individual mind and instead 'sees knowledge as dialogically constructed through discourse and conversation' (McKenzie, 2003, p. 262). A methodological approach informed by this perspective demands that the researcher treat observed information seeking activities as instantiations of cultural practices. These practices are created and carried out by epistemic communities; knowledge-producing groups which have generally been conceptualized as professional and informed by the disciplines (Knorr Cetina, 1999). Fortunately, the Internet has created a venue for more informal and general-purpose communities (Burbules, 2001). The activities of these communities may, therefore, help us understand how information can be constructed collectively and provide us with a glimpse of the other side of information seeking, the creation of the information that is being sought out.

One possible venue for such an investigation is the social question and answer site, a service that includes: (1) a means of expressing an information need using natural language, (2) a way for people to respond to those needs, (3) and a community centred upon participation in the service (Shah, Oh and Oh, 2009). This paper is focused on one site, Ask Metafilter, because its membership requirement and prevalent social norms (Silva, Goel and Mousavidin, 2009) reflect the epistemic communities described by Tuominen, et al (2005). I will argue that taking the social constructionist approach seriously entails one very salient difference between this study and social question and answer research more generally: the complete set of answers to a given question, which can be characterized as a conversation, becomes the unit of analysis rather than the individual answers. The use of the term conversation is intended both to reflect the social constructivist view of knowledge construction and the fact that contributed answers do not simply respond to the initial prompt, but instead often respond to one another. It also suggests that we can learn about how knowledge is constructed by attending to the conversational function (Eggins and Slade, 2005) of individual responses and, accordingly, that will be the focus of the analysis reported below.

Review of related research

Information practices

Traditional studies of information seeking tend to foreground the behaviour, emotions, and cognition of the individual seeker. This research can be of great use to the information professional who is granted insight into the experiences of potential clients and patrons (e.g., Case, Given and Mai, 2016; Jacobs, Amuta, and Jeon, 2017), but it does not provide many tools for understanding the nature of the information itself apart from insights into the way people assess the credibility and relevance of information sources (Saracevic 2007; Rieh and Danielson, 2007; Metzger and Flanagin, 2015). While these analyses can play an important role for those involved in developing information searching interfaces, it can lead to a superficial view of the information itself, the consequences of which are evident in pedagogies that teach students how to evaluate information sources. The most common approach to this is the enumeration of criteria for assessing sources, a pedagogical strategy that has been disparagingly referred to as the checklist method (Meola 2004; Metzger 2007). A student might be asked to 'check to see if the information is current', 'consider whether the views represented are facts or opinions', 'seek out other sources to validate the information' along with a host of other tasks (Metzger 2007, p. 2080). A number of critiques have been leveled at this method (Fister 2003; Meola 2004; Metzger 2007). One of the most suggestive comes from Burbules who argues that savvy web users could be 'deceived by "obvious" markers of credibility that — precisely because they are general reliable indicators of credibility — are easily falsified by clever information-providers with an eye toward deception' (Burbules 2001, p. 1).

An alternative approach to this problem, in particular, and information seeking more generally, is provided by Tuominen et al. (2005) who suggest that information literacy be defined as a sociotechnical practice. These authors approach information seeking from a sociocultural rather than a cognitive standpoint (Savolainen, 2007). While the study of information behaviour draws upon 'one of the basic categories of behavioural research, that is, need' (Savolainen 2007, p. 114), those interested in information practices argue that 'information seeking and retrieval are dimensions of social practices and that they are instances and dimensions of our participation in the social world in diverse roles, and in diverse communities of sharing' (Talja and Hansen, 2005, p. 125). Accordingly, the sociotechnical practice described by Tuominen, et al. assumes that:

Reading may be seen as essentially a shared activity in the sense that it deals with the evaluation of different and often conflicting versions of reality. Groups and communities read and evaluate texts collaboratively. Interpretation and evaluation in scientific and other knowledge domains is undertaken in specialized ‘communities of practice' or ‘epistemic communities'. (Tuominen, et al. 2005, p. 337)

This implies that the study of information ought to attend to the ‘communities of practice' (Lave and Wenger 1991) through which information is sought and generated. Tuominen, et al. (2005, p. 341) close their article with a call for further research: 'we need to understand the practices of these communities before we can effectively teach [information literacy]'. This study is, in part, a step in that direction.

Social constructionism

What does it mean to say that information seeking is a practice and what does that say about information itself? The constructionist viewpoint, as described by Talja, et al., 'sees language as constitutive for the... formation of meaning' and 'replaces the concept of cognition with conversations' (Talja, et al., 2005, p. 80). This approach is grounded in the idea that people employ historically constructed discourses to apprehend the world and that the apprehension and construction of new knowledge is mediated by these same discourses. Talja et al. note that most constructionist research 'has concentrated on analyzing the field's professional and scientific discourses' but that 'constructivist approaches are more commonly implied in empirical information studies than constructionist approaches' (Talja, et al. 2005, p. 91). Yet Tuominen, et al. have argued that constructionism has the potential to shift 'the focus of research from understanding the needs, situations, and contexts of individual users to the production of knowledge in discourses, that is, within distinct conversational traditions and communities of practice' (Tuominen, et al. 2002, p. 278).

As an example, Tuominen (2004, Methods, para. 4) presents a study in which the discourse of heart surgery patients and their spouses are analysed. In describing his methodological approach, he notes that 'discourse analysis focuses on the fluid and subtle ways speakers and writers use rhetorical and cultural resources to construct variable versions of states-of-things and events'. This negotiation is reflected in the reported interviews through the patients' and spouses' implicit valuation of ideal patient and ideal ill roles. In the course of my study, I would like to suggest that while discourse may be used for this sort of positioning and assignment of values, discursive construction also has a very practical and pervasive role through its assessment of information need and the information object itself. This role of discourse, while ever and always present, has been historically difficult to pin down due to the transient nature of conversations and the static nature of most available information objects. The internet has, on the other hand, afforded the researcher and information seeker the opportunity to get a closer look at Talja, et al.'s (2005) conversations.

Social question and answer websites

Social question and answer websites are defined by Shah et al. (2009) as services that includes: (1) a means of expressing an information need using natural language, (2) a way for people to respond to those needs, (3) and a community centered upon participation in the service. The first two requirements encompass many everyday encounters in environments from libraries to schools to homes, while the introduction of the Internet allows for the third requirement to be fulfilled. The sites that fall into this category range from the popular Quora and Yahoo! Answers to the much smaller membership-driven site Ask Metafilter.

On the face of it, a space designed for conversation focused on fulfilling an information need would seem to be ideally placed to both examine and extend the constructionist approach toward information seeking. This makes it all the more interesting that most studies of social question and answer have eschewed that approach. In Shah, et al.'s (2009) earlier review of social question and answer research, the authors divide the foci of inquiry into content-centred and user-centred. More recently, Srba and Bielikova (2016) conducted a comprehensive survey of the same area and divided the research base into exploratory studies, content and user modelling, and adaptive support. Each of these surveys notes that much existing research is focused on user satisfaction (e.g., Kim and Oh, 2009; Choi and Shah 2017) and answer quality (e.g., Adamic, Zhang, Bakshy and Ackerman, 2008; Su, Pavlov, Chow and Baker 2007; Harper, Raban, Rafaeli and Konstan, 2008; Gkotsis, Liakata, Pedrinaci, Stepanyan and Domingue, 2015). Those studies that focus upon question-answerers rely upon classification schemes that attempt to identify the characteristics of those who supply the best answers to a question (Gazan 2006) and others focus on the features of users' encounters with information on these sites (Jian, Zhang, Li, Fan and Yang, 2018). Another study by Bouguessa, Dumoulin and Wang (2008) attempts to identify authoritative actors through the use of the link analysis of a weighted directed graph representing user interactions. In each of these cases, the idea that multiple users could collectively construct information is not entertained.

This disregard of the social in social question and answer studies may be partially due to the structure of the most popular sites. While Shah et al. (2009) refer to the development of a community as the third part of their definition of social question and answer, large sites such as Yahoo! Answers and Quora do not have a user-base that looks anything like the communities of practice that are central to the social constructionist approach. The latter communities 'are groups of people sharing similar goals and interests; employing common practices; working with the same information objects, tools, and technologies; and expressing themselves in a common language' (Talja and Hansen, 2005, p. 128). Even if we extend this definition to encompass more casual communities that arise outside the bounds of workplace settings, it is hard to see how the over 200 million users of Yahoo! Answers could ever fit the definition. Furthermore, the notion of a single right answer is built into an interface that encourages users to vote for the best answer and places the winner above the rest of the responses. This diminishes the possibility for a meaningful attempt at conversation since most users are likely to aim at providing a highly-valued contribution and they know that success means that their response will be decontextualized through placement at the top of the page.

We can see an alternative format in the Ask Metafilter website, a question and answer component of the website Metafilter. The community weblog Metafilter has been the subject of the occasional scholarly investigation but has seen little of the attention paid to social question and answer more generally. This may be partially due to the fact that the website, while serving over ten thousand active users, has a community that is order of magnitudes smaller than those boasted by Yahoo! Answers, Quora, and other social question and answer sites. Ask Metafilter embodies the third part of Shah et al.'s (2009) definition due to a smaller user-base that is structured around a community weblog with well-established interactional norms. These are encouraged by moderation, discussion amongst members of the community, and a $5 membership fee (Silva, Goel and Mousavidin 2009). An examination of answers to questions in Ask Metafilter revealed that any single response to the information needs expressed by contributors to the forum is incomplete, and sometimes nonsensical, without also including the other responses that surround it. This implies that the entire exchange, or conversation, is the appropriate unit of analysis.

Research questions

This study presents an analysis focusing on the conversational function (Eggins and Slade, 2006) of the responses in a social question and answer site Ask Metafilter. It will serve three mutually-supportive goals: (1) the social construction of knowledge will be exemplified via the reported discourse, (2) the analysis of this discourse will contribute to the development of tools for talking about collective information seeking, and (3) a characterization of the nature of these interactions will provide a lens for the observation of collective information seeking in less ideal environments (e.g., larger social question and answer sites).

Accordingly, one of the aims of this study is to demonstrate that the social constructionist perspective can account for features of social question and answer websites that are disregarded by traditional approaches. More specifically, the study addresses the following research questions:

- Do the participants in a social question and answer website engage in collective information seeking and knowledge construction?

- If so, how do participants’ responses support collective information seeking and knowledge construction?

- What can the responses in a social question and answer website tell us about the social construction of knowledge more generally?

To give some immediate force to the questions above, I note that problems arise when one looks closely at the information produced by a social question and answer site with a traditional conception of knowledge in hand. For example, how does one talk about right or wrong answers when the information need involves some ambiguity? It could be argued that the researcher ought to interview the person who originated the question but this both disregards the role that question and answer sites inhabit, a given question and its accompanying answers may be viewed by many more people than those initially involved in the transaction, and it disregards the inchoate nature of most information needs, inquirers ‘don’t really know what might be useful for them’ (Belkin 2000, p. 58).

Research method

In what follows, I examine the responses to queries in Ask Metafilter through the lens of conversation analysis (Eggins and Slade, 2005) by assessing each contribution as a move in an ongoing conversation spurred by the initial question. This was done by inductively coding (Miles and Huberman, 1994) each response according to its role in the conversation. The initial coding was descriptive with a focus on the object and apparent purpose of the response. This meant that each turn in the conversation was coded according to whether it was responding to the initial question or another response and also given a code indicating the apparent purpose of the turn (e.g., providing an answer, pointing to another information source, disputing somebody else’s answer). This was followed by pattern coding used to identify ‘inferred themes’ (Miles and Huberman 1994, p. 57) that related the conversational moves to the social construction of knowledge. After revising and condensing the pattern codes, three dominant themes emerged: corroboration, elaboration, and disagreement.

Ask Metafilter

Upon opening Ask Metafilter, one sees the following motto underneath the website’s logo: ‘querying the hive mind’. This nicely sums up the rationale behind social question and answer sites, a group of interested people are more likely to be able to answer a question than a single person working on their own. But how does this happen on Ask Metafilter? This question can be either be answered in a very straightforward way, through a description of the process which I will outline below, through an analysis of the nature of the responses that populate the site. The latter analysis is the core of this study.

Figure 1: Screenshot of the Ask Metafilter homepage

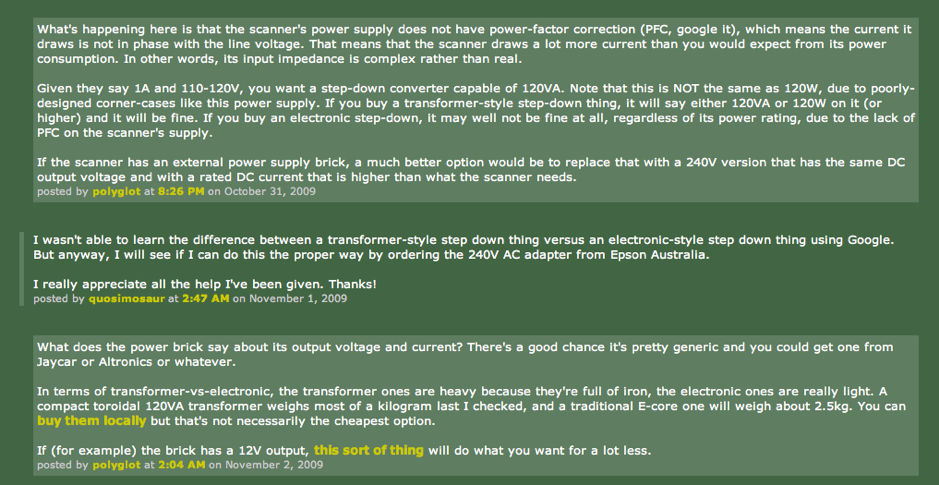

The basic format of an Ask Metafilter question proceeds as follows: a person, hereafter referred to as the poster, decides to ask the community a question and fills out the appropriate form with as much detail as they deem appropriate. Once submitted, this question appears in the top position on the home page, only to be shifted downward once a new question is posted by somebody else; thus, an Ask Metafilter reader encounters a list of questions in reverse chronological order (See Figure 1). The structure of the question and the responses is relatively straightforward. A small version of the question appears on the front page, while a detailed explanation, if necessary, appears when the reader hits the ‘[more inside]’ link. Members of Metafilter, hereafter referred to as responder, post responses to the question and these are presented in chronological order on the same page in which the extended version of the question appears. As the reader scrolls through a page of responses, they will encounter some responses that appear within a gray box and other that have a vertical bar to the left of them (See Figure 2). The former are responses that the poster has marked as ‘best answers’ while the latter are responses written by the poster.

Figure 2: Screenshot of a few responses to a question posted on Ask Metafilter

The sample

I initially examined all of the questions posted on three days: October 29, 30, and 31 of 2009. The time period is from several years ago in order to be assured that no new responses would be added; the structure of the forum remains unchanged as of this writing. The entire sample consisted of 222 questions. From this I narrowed the sample by distinguishing between those informational questions that allowed for multiple responses (e.g., ‘Two young professionals looking for an apartment in Manhattan or Brooklyn’) and those that only allowed for a single answer (e.g., ‘What is the name of the card game in which all the players place a card on their forehead?’). It is not difficult to assert that conversational and open-ended informational questions demand a type of collective information-seeking; the questions that call for a single answer are the real test. If it could be shown that a question that seemingly demands a single best answer is, nonetheless, usually served by collective information seeking, then I will have settled the first of my research questions in the affirmative.

At this point I was left with 74 questions. The number of responses to these questions ranged from a minimum of 1 to a maximum of 32 with a mean of 12 responses. Having isolated my sample, I began to generate a more thorough description of the structure of each set of responses and came to two conclusions. First, the series of responses to a given question were best characterised as a conversation. Even when there was no explicit interaction between responses, the vast majority of responses gained appreciable impact by being read against each other. Secondly, the information seeking and knowledge construction function of these responses fell into three categories that describe the central function fulfilled by the conversations: corroboration, elaboration, and disagreement. This suggests that an analysis of social question and answer should use the conversation as the fundamental unit of analysis.

Conversation analysis

Conversation is a turn-taking activity that can be analysed at a number of different levels including grammar, semantics, and the structure of the discourse (Eggins and Slade, 2005). I will be focusing on the level of discourse as this is where meaning is negotiated through a choice of moves (Sinclair and Coulthard, 1975) which combine to make up the entire exchange. Even having isolated the conversational move as a means of describing the course of conversation, it is important to note that a single turn can fulfill multiple functions; for example, establishing the authority of the speaker or positioning other participants in the conversations. For the purposes of this study, however, I will be focusing on how each move contributes to a collective response to the original poster’s information need.

Results

Collective information seeking

The collective information seeking captured by the Ask Metafilter conversations, as opposed to the private information seeking that may have led up to them, is always initiated by the posting of a question. Information questions are often highly specified, sometimes including several paragraphs worth of context. Here is one of the less-elaborated examples: ‘I found three salamanders under a trash can sitting on the cement walk next to my house. Is there anything I can do to increase their chances of survival through the winter?’ Once the reader clicks on the ‘[more inside]’ link they are presented with another nine lines of information. The poster provides additional description and asks supplementary questions such as ‘Should I even be worried about this?’

The subsequent conversations can move in a number of directions. I will provide a more in-depth review of observed conversational moves below, but they can generally be classified as responses to the original poster’s question, responses to those responses, or feedback from the original poster. It is surprisingly rare for a response to be entirely redundant, much more frequent are responses that either agree with an initial response but with elaboration or responses that explicitly corroborate an earlier response. Furthermore, the poster has the freedom to mark any response they wish as a best answer and this makes the designation ambiguous. A conversation regarding a question about the liability issues involved in posting false/defamatory material in an obituary included two responses that were in disagreement. These were both marked as best answers by the poster who gave no indication of who was being sided with. The problems involved in marking a question as resolved could be treated as a limitation of the system but it’s hard to imagine how any system could be put in place that would designate the true best answers. In fact, I hope to demonstrate that an analysis at that level misses a fundamental point: information needs are best answered by multiple voices which contradict, corroborate, and elaborate upon one another.

Overview of structuring moves

How is it that knowledge is constructed by the ‘hive mind'? I will present a taxonomy of the responses that I encountered in my sample along with examples which fall into the following three broad categories: responses to the original poster’s stated question, responses to responses, and feedback from the original poster.

Responses to the original question

Responses are often simple attempts to satisfy the expressed information need. Conversation 45, in which the poster asks for the name of a song that they heard at a comedy club provides an example of some of the forms this can take (see Table 1).

| Type of answer | Example |

|---|---|

| Unmodified answer | "'She’s my girl', by The Turtles" |

| Mild hedging | "'Girlfriend’ by Omarion and Bow Wow, maybe?" |

| Major hedging | "It’s probably not, but it sounds like it could be Jet-Are You gonna be My girl?" |

Responses do not have to take the form of a direct attempt to satisfy the poster’s need. As noted throughout the literature, requests for information are often met with responses couched as narratives (Bruner 1991; Gorman 1995). Conversation 6, in which the poster asks whether it is normal to have headaches when wearing contact lenses, provides a few examples of responses that this approach. These are sometimes used to warrant a specific recommendation and, at other times, are provided unelaborated leaving the reader to draw their own conclusions. Here is an example of the former:

When I first got contacts, it took wearing them everyday for about two weeks before I got used to them. I would think that wearing them as seldom as you are, it will be harder to get your eyes adjusted. (Response 15, Conversation 6)

And the latter:

When I had contacts, my doctor told me my astigmatism was minor enough that I could go without the correction in the contacts. But I did tend to get headaches when wearing them for more than a few hours, and I think that was the cause. There was always a minor but weird ‘perspective shift’ when I switched between glasses and contacts. (Response 7, Conversation 6)

Finally, responses may point the poster to a different information source rather than providing a direct response. The seed for conversation 39 is a question about a black velvet painting that appears in the Stanley Kubrick film The Shining. Nobody was able to identify the painting but several had suggestions about who might know where the answer might be found: Tinyeye (a reverse image-search web site), Getty images (where images can be searched for by categories), and The Stanley Kubrick Archive.

Responses to other responses

This category of response is what makes the conversation a conversation. The most fundamental moves are either agreement or disagreement. Conversation 4, in which a poster asks whether leaving cruise control on all the time is bad for their car, provides a number of examples of both (see Table 2)

| Type of answer | Example |

|---|---|

| Disagreement with evidence | ‘Contrary to Burhanistan I’ve heard that cruise control is actually BETTER for gas mileage, as the computer is more consistent on gas than your shaky foot is.’ (Response 4) |

| Agreement | Burhanistan is right, I think, both that it’s probably not as safe and that, presumably, if you use cruise control a lot it might wear out faster.’ (Response 8) |

| Agreement with multiple parties | ‘Another vote for better mileage, no damage.’ (Response 10) |

| Tentative disagreement | ‘If Burhanistan is right, I’d like to see the study. I’ve always heard the opposite but I don’t have a study to point to either.’ (Response 11) |

The proliferation of disagreement, i.e., the transformation of a conversation into an argument, can be rather productive in the context of Ask Metafilter. These arguments trace a very different trajectory than many real-time arguments, the nature of the interaction seems to encourage subsequent responses that are full of greater and greater amounts of evidence as the conversation progresses. Conversation 4 follows such a course with none of the first 15 responses filling more than a few lines. Then, as the argument gets more heated, 6 out of the 13 remaining responses take up at least a couple paragraphs.

Feedback from the poster

The poster can, and often does, contribute to the conversation. The nature of the interaction can take many different forms: a confirmation of a particular response or responses, a follow-up post that tells everybody what was eventually done with the information that was volunteered, a thank you to those who participated sometimes accompanied by an explanation for why certain responses were marked as best answers, or a further elaboration of the information need. This last is often based upon either an explicit request for further information from one of the responders or the discovery that the original question was not specific enough. Feedback of this sort is necessary for queries that involve the poster looking for a song or picture that they recall from the past. So, for conversation 45 in which the poster was looking for a song with the lyric ‘that’s my girl, hey!’, almost half of the responses were the original poster telling people that their guesses were wrong and providing clarifications (e.g., ‘the song was more poppy than rocky’, ‘it wasn’t a comedy song... more of a garage-rock, hipstery song’).

More interesting interactions between the poster and the respondents occurs in conversation 53 in which the poster is looking for a way of calculating the force of a cross wind that hit their Mazda Miata. In the course of the ensuing conversation, the poster responds by restating a response and asking if the restatement is correct, contributes a few additional assumptions in order to make the mathematics easier, asks about an implication of one of the responses, thanks a respondent for a link, explains some of the thinking that went into the formulation of the question, and even mediates between two respondents who are disagreeing. All of this in the course of 8 responses in a conversation that elicited 24 total responses.

Discussion

What do these conversational moves imply for collaborative information seeking and knowledge construction? First and most importantly, there are many ways, most of which cannot be assessed ahead of time, in which extra voices can contribute to an information seeking conversation. Specifically, I will describe a number of patterns of moves that support my claim that any analysis of the fulfillment of information needs through the use of a social question and answer site needs to treat the conversation as a unit of analysis. After describing these patterns, I will discuss connections between these findings and information seeking models more generally.

The fundamental role of corroboration

An individualistic point of view asks that we concentrate our attention on the point of contact between the information seeker and the piece of information that they encounter. We can talk about variables that mediate this confrontation: Does the information seeker believe it? Does the information matter to them? What use do they make of it? But if we turn our attention to the collective activity encountered on Ask Metafilter, a different perspective begins to come into focus. As noted above, responders often begin by simply providing an answer to the question as asked. But even this seemingly straightforward transmission of information from one individual to another serves to set collective information seeking in action. An answer set out in a public space is open to challenge and criticism. And, as we’ve seen above, challenges are often forthcoming. This means that even silence, in such a public form, communicates something as well. The silence may speak of general agreement or general disinterest but, either which way, it has a significance.

But silence is not the norm. The user generally encounters conversation and the comments often offer some manner of corroboration often coupled with elaboration. In fact, some form of explicit corroboration occurred in over 75% percent of the conversations I examined. This has profound implications for traditional approaches to credibility. Kim (2010), for example, in an examination of credibility assessment on social question and answer sites focuses the inquiry on the factors that influence an individual’s assessment of individual answers. This serves to obscure the function served by answers that corroborate earlier replies. Credibility is an ever-evolving construct, new pieces of information must necessarily color the information that came before. The conversations captured in Ask Metafilter allow one to see this dynamic first-hand. Subsequent responses sometimes confirm, sometimes repudiate, and sometimes shed new light on earlier responses. This happens throughout conversation 4 (on using cruise control): responders provide corroboration, additional evidence, exceptions, and narratives. There is no single answer in the entire conversation that includes all the pertinent evidence and exceptions accumulated by the group.

Elaboration: answering the question that was not asked

It has been well-established that information-seekers do not necessarily know what they are looking for before they find it (Belkin 2000). As noted above this can either be because an information need is not fully formed or due to a mistaken assumption (e.g., that there is a single card game in which players place a card on their foreheads). But a query can be extremely specific and well-defined while still allowing for expansion that may not have been anticipated by the poster. This phenomenon is accounted for by many information seeking models (Wilson 1999), but these models do not give us any traction for thinking about how exactly an information need is affected by new information. By taking the conversation as a site for knowledge construction, we can see what it means for knowledge to be constructed by multiple parties. In conversation 56, the poster asks whether the ancient Greeks and Romans paid for their seats in the theatre and the Circus, respectively. The conversation can be seen in Table 3. The poster asked a yes or no question and received elaborate responses. Not only would any single response be incomplete without the others, the responses provide more information than was asked for and answering more than what was asked contributes to the long-term value that the information will have for the community. An even more interesting example, because the poster explicitly took up the proffered information, occurs in conversation 40. The question is simply a request for a shareware program that will allow the poster to manipulate census data in order to track the number of people classified as disabled in a given county. One of the responders is able to help the poster work out some practical aspects of estimating disability status numbers that were not anticipated by the poster. By sharing one information need, and including contextual information, the poster realised that they were lacking information that they had not even known that they lacked.

| Response No. | Nature of response |

|---|---|

| 1 | A book is suggested and the responder draws from the book to provide details about how plays were financed in Ancient Greece |

| 2 | Another response provides further details about the founding of Greek theatre and suggests another book for more background. |

| 3 | This responder concurs with the second response and adds some information about the Romans. |

| 4 | Another responder asserts that the Romans got into the theatre for free and links the the Wikipedia page on ‘Bread and Circuses’. |

| 5 | This responder concurs with the first response, provides quotes from ancient sources in order to provide additional detail, and link to the .pdf of another book. |

| 6 | The author of the previous response adds a point about the Roman system. |

| 7 | The original poster thanks everybody and notes that they will mark all the responses as best answers. |

Productive disagreement

While disagreement was not seen in every single conversation, it appeared with some frequency: in almost 60% of the conversations examined. The individualistic view of information seeking attends to an individual’s probable reaction upon encountering claims that are controversial. This can be a productive exercise (Meho and Tibbo, 2003), knowledge about this behaviour can inform the development of the user interface of information systems (Talja, et al. 2005), but it fails to provide us with any insight into the effect that disagreement can have on the information itself. Reflecting on the foregoing analysis, I will argue that disagreements contribute to information seeking in a number of ways. Before doing so, though, I want to highlight one form of information seeking that is notable for its absence in this environment. Most social question and answer sites seem to be embrace the wisdom of crowds (Shah, et al., 2009): the idea that a large group of people can come to a better decision than the most intelligent individuals among them. This orientation is reflected in sites that highlight a single best answer that is voted on by the public. It should be clear, at this point, that Ask Metafilter does not work this way. And the preceding discussion of corroboration and elaboration should give some indication of why it would be difficult to apply that approach to this situation. The conversations that I witnessed could not be reduced down to an average answer; instead, they were full of different contributors who each brought different perspectives to the table.

Disagreement helped facilitate knowledge construction by encouraging different parties to provide more evidence for their claims, by bringing out assumptions that had been implicit in the question, and by suggesting approaches to a problem that sometimes could be made to complement one another. Conversation 73 in which a poster asked for advice on getting their 6-month old baby to sleep provided excellent examples of all three of these functions. After some initial responses provided suggestions for training the child to sleep all the way through the night, other responders vehemently disagreed, revealing that the expectation upon which the question was premised may have been unreasonable. The ensuing argument led to the provision of a number of anecdotal stories along with actual research that supported those who held that it is normal for a baby to not sleep through the night at that age. Finally, a review of the variety of responses reveals that there may be a few things that the poster could do to help encourage their baby to sleep through the night without resorting to an actual sleep training regimen. As noted above, the wisdom of this crowd cannot be reduced to any single average response, nor could it be understood by looking at the behaviour of the information seeker who instigated the conversation.

Conclusion

Information does not exist in a pure state apart from the discourses in which it is founded. This means that the study of information seeking behaviour must be complemented by a study of the practices that give rise to the information in the first place. Unfortunately, this process can be difficult to capture. Social question and answer provides a unique opportunity to take a look at some of the ways in which information can be constructed and sought collectively. I have argued that the existing information seeking research on social question and answer has been constrained due to its focus on information behaviour rather than collective information practices. The current study attempted to remedy this by focusing on the processes embedded in the conversations as a whole.

I identified three generative processes exhibited by the conversations: corroboration, elaboration, and disagreement. These processed demonstrate what it means for conversations to serve as a site of knowledge construction. Corroboration, or the lack thereof, provides feedback to the information seeker which may either support or undermine the credibility of any given response. Elaboration helps clarify the nature of the knowledge that is the object of the initial query. Disagreement is necessary for the representation of diverse perspectives. These processes show the importance of the conversation as a unit of analysis; they can only arise in an environment that allows for multiple voices to be heard. Further examinations of information seeking and the social construction of knowledge may be productively informed by the results of studies such as this.

Implications for further study

There are some immediate implications of this work for the analysis of social question and answer sites. Most previous analysis has taken it as a given that there exists such a thing as a best answer to a question and that research ought to be directed toward an evaluation of the responses independently from one another. The evidence provided suggests that researchers who want to investigate social question and answer ought to treat the entire conversation as their unit of analysis. This has important consequences for design because the features that afford a productive conversation may be very different than those that encourage people to vote on a single best answer to a question. A simple example can be seen in Yahoo! Answers practice of moving the response with the most votes to the top of the page. This makes sense under the former perspective, but makes no sense when the conversation is treated as paramount because the practice will inevitably undermine any attempts at dialogue.

But, as suggested at the outset, these findings can also be employed in research on the social constructionist view of information. The conversations referred to by researchers in the field are usually not literal; the methodological approach tends to rely on interviews with information seekers in order to gain access to their discourse (McKenzie, 2003; Tuominen, 2004). The present study demonstrates that it may be possible, in a networked environment, to gain access to actual multivoiced conversations that are intended to seek out and create information. The moves carried out by the participants in these conversations also give us a starting point for describing the way in which information is constructed, vetted, and used. These results can be applied, in turn, to research on information behaviour. For example, a researcher could observe an individual’s information seeking while paying special attention to the way that encounters with corroboration, elaboration, and disagreement affect the decisions that they make. While the conversations captured by social question and answer sites are only a partial representation of the social construction of knowledge, they provide empirical findings upon which further conjectures may be founded.

About the author

Ander Erickson is an Assistant Professor in the School of Interdisciplinary Arts & Sciences at the University of Washington Tacoma. He received his PhD in Educational Studies from the University of Michigan. He can be contacted at: awerick@uw.edu.

References

- Adamic, L.A., Zhang, J., Bakshy, E. & Ackerman, M.S. (2008). Knowledge sharing and Yahoo Answers: everyone knows something. In Proceedings of the 17th international conference on World Wide Web (pp. 665-674). New York, NY: ACM.

- Belkin, N.J. (2000). Helping people find what they don’t know. Communications of the ACM, 43(8), 58-61.

- Bouguessa, M., Dumoulin, B. & Wang, S. (2008). Identifying authoritative actors in questioning-answering forums: the case of Yahoo! Answers. In Proceedings of the 14th ACM SIGKDD international conference on Knowledge discovery and data mining (pp. 866-874). New York, NY: ACM.

- Bruce, C. (1997). The seven faces of information literacy. Adelaide, Australia: Auslib Press.

- Bruner, J. (1991). The narrative construction of reality. Critical Inquiry, 18(1), 1-21.

- Burbules, N.C. (2001). Paradoxes of the web: the ethical dimensions of credibility. Library Trends, 49(3), 441-453.

- Case, D.O., Given, L.M. & Mai, J-E. (2016). Looking for information: a survey of research on information seeking, needs, and behavior. Bingley, UK: Emerald Group Publishing.

- Choi, E. & Shah, C. (2017). Asking for more than an answer. What do askers expect in online question and answer services? Journal of Information Science, 43(3), 424-435.

- Dimond, R., Bullock, A., Lovatt, J. & Stacey, M. (2016). Mobile learning devices in the workplace: 'as much a part of the junior doctors’ kit as a stethoscope’? BMC Medical Education, 16(1), Article no. 207. Retrieved from https://doi.org/10.1186/s12909-016-0732-z (Archived by the Internet Archive at https://bit.ly/2O49U2Q)

- Eggins, S. & Slade, D. (2006). Analysing casual conversation. Sheffield, UK: Equinox Publishing Ltd.

- Ehrlich, K. & Cash, D. (1994). Turning information into knowledge: information finding as a collaborative activity. In J.L. Schnase, J. Leggett, R.K. Furuta & E. Metcalfce (Eds.), Proceedings of Digital Libraries ’94 (pp.119-125). Retrieved from https://pdfs.semanticscholar.org/28c1/aef41fb4a61628faec672f0db83f327f93ed.pdf

- Ellis, D., (1989). A behavioural approach to information retrieval design. Journal of Documentation, 46(3), 318-338.

- Fister, B. (2003). The devil in the details: media representation of "ritual abuse" and evaluation of sources. Studies in Media & Information Literacy Education, 3(2), 1-14.

- Gazan, R. (2004). Creating hybrid knowledge: a role for the professional integrationist. [Unpublished doctoral dissertation]. University of California, Los Angeles, California, USA

- Gazan, R. (2006). Specialists and synthesists in a question answering community. Proceedings of the American Society for Information Science and Technology, 43(1), 1-10.

- Gorman, P.N. (1995). Information needs of physicians. Journal of the American Society for Information Science, 46(10), 729-736.

- Gkotsis, G., Liakata, M., Pedrinaci, C., Stepanyan, K. & Domingue, J. (2015). ACQUA: automated community-based question answering through the discretisation of shallow linguistic features. The Journal of Web Science, 1(1), 1-15.

- Harper, F.M., Moy, D. & Konstan, J.A. (2009). Facts or friends? Distinguishing informational and conversational questions in social question and answer sites. Proceedings of the 27th International Conference on Human Factors in Computing Systems. (pp. 759-768). New York, NY: ACM.

- Harper, F. M., Raban, D., Rafaeli, S. & Konstan, J.A. (2008). Predictors of answer quality in online Q&A sites. In Proceedings of CHI '08: The 26th annual SIGCHI conference on human factors in computing systems (pp. 865-874). New York, NY: ACM.

- Jacobs, W., Amuta, A. O. & Jeon, K. C. (2017). Health information seeking in the digital age: an analysis of health information seeking behavior among US adults. Cogent Social Sciences, 3(1), Article 1302785. Retrieved from https://www.tandfonline.com/doi/full/10.1080/23311886.2017.1302785

- Jiang, T., Zhang, C., Li, Z., Fan, C. & Yang, J. (2018). Information encountering on social question and answer sites: a diary study of the process. In G. Chowdhury G., J. McLeod, V. Gillet and P. Willett (Eds.). (pp. 476-486). Cham, Switzerland: Springer. (Lecture Notes in Computer Science, 10766)

- Kim, S. & Oh, S. (2009). Users’ relevance criteria for evaluating answers in a social question and answer site. Journal of the American Society for Information Science and Technology, 60(4), 716-727.

- Kim, S. (2010). Questioners’ credibility judgments of answers in a social question and answer site. Information Research, 15(2), paper 432. Available at http://InformationR.net/ir/15-2/paper432.html Retrieved from http://InformationR.net/ir/15-2/paper432.html (Archived by WebCite® at https://www.webcitation.org/6GNVXn8w7)

- Knorr Cetina, K. (1999). Epistemic cultures: how the sciences make knowledge. Cambridge, MA: Harvard University Press.

- Lave, J. & Wenger, E. (1991). Situated learning: legitimate peripheral participation. Cambridge: Cambridge University Press.

- Lawton, P. (2005). Capital and stratification within a virtual community: a case study of Metafilter. [Unpublished doctoral dissertation]. University of Lethbridge, Lethbridge, Alberta, Canada.

- Liu, Y., Bian, J. & Agichtein, E. (2008). Predicting information seeker satisfaction in community question answering. In S-H. Myaeng, D.W. Oard, F. Sebastiani, T-S. Chua & M-K. Leong (Eds.), Proceedings of the 31st Annual International ACM SIGIR Conference on Research and Development in Information Retrieval (pp.483-490). New York. NY: ACM.

- Martzoukou, K. (2005). A review of web information seeking research: considerations of method and foci of interest. Information Research, 10(2), paper 215. Retrieved from http://InformationR.net/ir/15-2/paper432.html (Archived by WebCite® at https://www.webcitation.org/6GNVXn8w7)

- McKenzie, P.J. (2003). Justifying cognitive authority decisions: discursive strategies of information seekers. The Library Quarterly, 73(3), 261-288.

- Meho, L.I. & Tibbo, H.R. (2003). Modeling the information-seeking behavior of scientists: Ellis’s study revisited. Journal of the American Society for Information Science and Technology, 54(6), 570-587.

- Meola, M. (2004). Chucking the checklist: a contextual approach to teaching undergraduates web-site evaluation. Portal: Libraries and the Academy, 4(3), 331-344.

- Metzger, M.J. (2007). Making sense of credibility on the Web: models for evaluating online information and recommendations for future research. Journal of the American Society for Information Science and Technology, 58(13), 2078-2091.

- Metzger, M.J. & Flanagin, A. J. (2015). Psychological approaches to credibility assessment online. In S. S. Sundar (Ed.), The handbook of the psychology of communication technology (pp. 445-466). Chichester, UK: Wiley & Sons.

- Miles, M. B. & Huberman, A. M. (1994). Qualitative data analysis: an expanded sourcebook. Thousand Oaks, CA: Sage Publications.

- Nadelson, L. S., Cain, R., Cromwell, M., Edgington, J., Furse, J. S., Hofmannova, A., ... & Xie, T. (2015). A world of information at their fingertips. College students' motivations and practices in their self-determined information seeking. International Journal of Higher Education, 5(1), 220.

- Pettigrew, K.E. (1999). Waiting for chiropody: contextual results from an ethnographic study of the information behaviour among attendees at community clinics. Information Processing and Management, 35(6), 801-817.

- Rieh, S.Y. & Danielson, D.R. (2007). Credibility: a multidisciplinary framework. Annual Review of Information Science and Technology, 41, 307-364.

- Saracevic, T. (2007). Relevance: a review of the literature and a framework for thinking on the notion in information science: behavior and effects of relevance. Journal of the American Society for Information Science and Technology, 58(13), 2126-2144.

- Savolainen, R. (2007). Information behavior and information practice: reviewing the ‘umbrella concepts’ of information-seeking studies. The Library Quarterly, 77(2), 109-132.

- Shah, C., Oh, S. & Oh, J. (2009). Research agenda for social question and answer. Library & Information Science Research, 31(4), 205-209.

- Silva, L., Goel, L. & Mousavidin, E. (2009). Exploring the dynamics of blog communities: the case of Metafilter. Information Systems Journal, 19(1), 55-81.

- Sinclair, J. M. & Coulthard, M. (1975). Towards an analysis of discourse. The English used by teachers and pupils. Oxford: Oxford Univ Press.

- Srba, I. & Bielikova, M. (2016). A comprehensive survey and classification of approaches for community question answering. ACM Transactions on the Web (TWEB), 10(3), Article no. 18.

- Su, Q., Pavlov, D., Chow, J.-H. & Baker, W. C. (2007). Internet-scale collection of human-reviewed data. In WWW ’07: Proceedings of the 16th international conference on World Wide Web (pp. 231–240), New York, NY: ACM Press.

- Talja, S. (1997). Constituting ‘information’ and ‘user’ as research objects. A theory of knowledge formations as an alternative to information man-theory. In P. Vakkari, R. Savolainen & B. Dervin (Eds.) Information Seeking in Context: Proceedings of an International Conference on Research in Information Needs, Seeking and Use in Different Contexts (pp.67-80). London: Taylor Graham.

- Talja, S. & Hansen, P. (2005). Information sharing. In A. Spink & C. Cole (Eds.) New directions in human information behavior (pp.113-134). Berlin: Springer.

- Talja, S., Tuominen, K. & Savolainen, R. (2005). ‘Isms’ in information science: constructivism, collectivism and constructionism. Journal of Documentation, 61(1), 79-101

- Tombros, A., Ruthven, I. & Jose, J.M. (2005). How users assess web pages for information seeking. Journal of the American Society for Information Science and Technology, 56(4), 327-344.

- Tuominen, K. (2004). 'Whoever increases his knowledge merely increases his heartache.’ Moral tensions in heart surgery patients’ and their spouses’ talk about information seeking. Information Research, 10(1), paper 202, available at http://InformationR.net/ir/101/paper202.html Retrieved from http://InformationR.net/ir/10-1/paper202.html (Archived by WebCite® at https://www.webcitation.org/5sn61HqX2)

- Tuominen, K., Savolainen, R. & Talja, S. (2005). Information literacy as a sociotechnical practice. The Library Quarterly, 75(3), 329-345.

- Tuominen, K., Talja, S. & Savolainen, R. (2002). Discourse, cognition, and reality: toward a social constructionist metatheory for library and information science. In H. Bruce, R. Fidel, P. Ingwersen, and P. Vakkari (Eds.), Emerging Frameworks and Methods: CoLIS 4. Proceedings of the Fourth International Conference on Conceptions of Library and Information Science, Seattle, Washington, July 21-25, 2002 (pp.271-283). Greenwood Village, CO: Libraries Unlimited.

- Wilson, P. (1983). Second-hand knowledge: an inquiry into cognitive authority. Westport, CT: Greenwood Press.

- Wilson, T.D. (1999). Models in information behaviour research. Journal of Documentation, 55(3), 249-270.